[AINews] SpaceXai Grok Imagine API - the #1 Video Model, Best Pricing and Latency

xAI cements its position as a frontier lab and prepares to merge with SpaceX

AI News for 1/28/2026-1/29/2026. We checked 12 subreddits, 544 Twitters and 24 Discords (253 channels, and 7278 messages) for you. Estimated reading time saved (at 200wpm): 605 minutes. AINews’ website lets you search all past issues. As a reminder, AINews is now a section of Latent Space. You can opt in/out of email frequencies!

It looks like OpenAI (fundraising at around ~800b), Anthropic (worth $350b) and now SpaceX + xAI ($1100B? - folllowing their $20B Series E 3 weeks ago) are in a dead heat racing to IPO by year end. Google made an EXTREMELY strong play today launching Genie 3 (previously reported) to Ultra subscribers, and though technically impressive,, today’s headline story rightfully belongs to Grok, who now have the SOTA Image/Video Generation and Editing model released in API that you can use today.

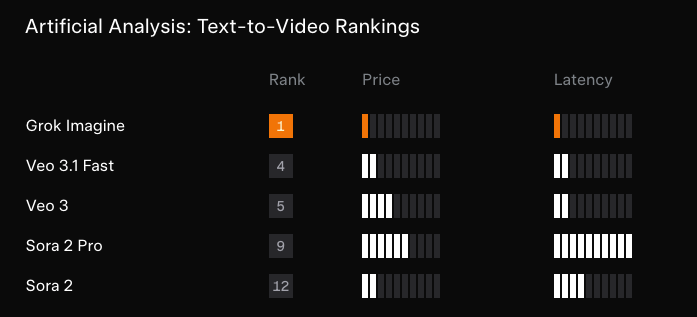

Artificial Analysis’ rankings says it all:

There’s not much else to say here apart from look at the list of small video model labs and wonder which of them just got bitter lessoned…

—-

AI Twitter Recap

World Models & Interactive Simulation: Google DeepMind’s Project Genie (Genie 3) vs. Open-Source “World Simulators”

Project Genie rollout (Genie 3 + Nano Banana Pro + Gemini): Google/DeepMind launched Project Genie, a prototype that lets users create and explore interactive, real-time generated worlds from text or image prompts, with remixing and a gallery. Availability is currently gated to Google AI Ultra subscribers in the U.S. (18+), and the product is explicit about prototype limitations (e.g., ~60s generation limits, control latency, imperfect physics adherence) (DeepMind announcement, how it works, rollout details, Demis, Sundar, Google thread, Google limitations). Early-access testers highlight promptability, character/world customization, and “remixing” as key UX hooks (venturetwins, Josh Woodward demo thread).

Open-source push: LingBot-World: A parallel thread frames world models as distinct from “video dreamers,” arguing for interactivity, object permanence, and causal consistency. LingBot-World is repeatedly described as an open-source real-time interactive world model built on Wan2.2 with <1s latency at 16 FPS and minute-level coherence (claims include VBench improvements and landmark persistence after long occlusion) (paper-summary thread, HuggingPapers mention, reaction clip). The meta-narrative: proprietary systems (Genie) are shipping consumer prototypes while open systems race to close capability gaps on coherence + control.

Video Generation & Creative Tooling: xAI Grok Imagine, Runway Gen-4.5, and fal’s “Day-0” Platforms

xAI Grok Imagine (video + audio) lands near/at the top of leaderboards: Multiple sources report Grok Imagine’s strong debut in video rankings and emphasize native audio, 15s duration, and aggressive pricing ($4.20/min including audio) relative to Veo/Sora (Arena launch ranking, Artificial Analysis #1 claim + pricing context, follow-up #1 I2V leaderboard, xAI team announcement, Elon). fal positioned itself as day-0 platform partner with API endpoints for text-to-image, editing, text-to-video, image-to-video, and video editing (fal partnership, fal links tweet).

Runway Gen-4.5 shifts toward “animation engine” workflows: Creators describe Gen-4.5 as increasingly controllable for animation-style work (c_valenzuelab). Runway shipped Motion Sketch (annotate camera/motion on a start frame) and Character Swap as built-in apps—more evidence that vendors are packaging controllability primitives rather than only pushing base quality (feature thread). Runway also markets “photo → story clip” flows as a mainstream onramp (Runway example).

3D generation joins the same API distribution layer: fal also added Hunyuan 3D 3.1 Pro/Rapid (text/image-to-3D, topology/part generation), showing the same “model-as-a-service + workflow endpoints” pattern spreading from image/video into 3D pipelines (fal drop).

Open Models & Benchmarks: Kimi K2.5 momentum, Qwen3-ASR release, and Trinity Large architecture details

Kimi K2.5 as the “#1 open model” across multiple eval surfaces: Moonshot promoted K2.5’s rank on VoxelBench (Moonshot) and later Kimi updates focus on productization: Kimi Code now powered by K2.5, switching from request limits to token-based billing, plus a limited-time 3× quota/no throttling event (Kimi Code billing update, billing rationale). Arena messaging amplifies K2.5 as a leading open model with forthcoming Code Arena scores (Arena deep dive, Code Arena prompt); Arena also claims Kimi K2.5 Thinking as #1 open model in Vision Arena and the only open model in the top 15 (Vision Arena claim). Commentary frames K2.5 as “V3-generation architecture pushed with more continued training,” with next-gen competition expected from K3/GLM-5 etc. (teortaxes).

Alibaba Qwen3-ASR: production-grade open speech stack with vLLM day-0 support: Qwen released Qwen3-ASR + Qwen3-ForcedAligner emphasizing messy real-world audio, 52 languages/dialects, long audio (up to 20 minutes/pass), and timestamps; models are Apache 2.0 and include an open inference/finetuning stack. vLLM immediately announced day-0 support and performance notes (e.g., “2000× throughput on 0.6B” in their tweet) (Qwen release, ForcedAligner, vLLM support, Adina Yakup summary, native streaming claim, Qwen thanks vLLM). Net: open-source speech is increasingly “full-stack,” not just weights.

Arcee AI Trinity Large (400B MoE) enters the architecture discourse: Multiple threads summarize Trinity Large as 400B MoE with ~13B active, tuned for throughput via sparse expert selection, and featuring a grab bag of modern stability/throughput techniques (router tricks, load balancing, attention patterns, normalization variants). Sebastian Raschka’s architecture recap is the most concrete single reference point (rasbt); additional MoE/router stability notes appear in a separate technical summary (cwolferesearch). Arcee notes multiple variants trending on Hugging Face (arcee_ai).

Agents in Practice: “Agentic Engineering,” Multi-Agent Coordination, and Enterprise Sandboxes

From vibe coding to agentic engineering: A high-engagement meme-like anchor tweet argues for “Agentic Engineering > Vibe Coding” and frames professionalism around repeatable workflows rather than vibes (bekacru). Several threads reinforce the same theme operationally: context prep, evaluations, and sandboxing as the hard parts.

Primer: repo instructions + lightweight evals + PR automation: Primer proposes a workflow for “AI-enabling” repos: agentic repo introspection → generate an instruction file → run a with/without eval harness → scale via batch PRs across org repos (Primer launch, local run, eval framework, org scaling).

Agent sandboxes + traceability as infra primitives: Multiple tweets point to “agent sandboxes” (isolated execution environments) as an emerging January trend (dejavucoder). Cursor proposed an open standard to trace agent conversations to generated code, explicitly positioning it as interoperable across agents/interfaces (Cursor). This pairs with broader ecosystem pressure: agents need auditability and reliable grounding when they can take actions.

Multi-agent coordination beats “bigger brain” framing: A popular summary claims a system that uses a controller trained by RL to route between large/small models can beat a single large agent on HLE with lower cost/latency—reinforcing that orchestration policies are becoming first-class artifacts (LiorOnAI). In the same direction, an Amazon “Insight Agents” paper summary argues for pragmatic manager-worker designs with lightweight OOD detection and routing (autoencoder + fine-tuned BERT) instead of LLM-only classifiers for latency/precision reasons (omarsar0).

Kimi’s “Agent Swarm” philosophy: A long-form repost from ZhihuFrontier describes K2.5’s agent mode as a response to “text-only helpfulness” and tool-call hallucinations, emphasizing planning→execution bridging, dynamic tool-based context, and multi-viewpoint planning via swarms (ZhihuFrontier).

Moltbot/Clawdbot safety trilemma: Community discussion frames “Useful vs Autonomous vs Safe” as a tri-constraint until prompt injection is solved (fabianstelzer). Another take argues capability (trust) bottlenecks dominate: users won’t grant high-stakes autonomy (e.g., finance) until agents are reliably competent (Yuchenj_UW).

Model UX, DevTools, and Serving: Gemini Agentic Vision, OpenAI’s in-house data agent, vLLM fixes, and local LLM apps

Gemini 3 Flash “Agentic Vision”: Google positions Agentic Vision as a structured image-analysis pipeline: planning steps, zooming, annotating, and optionally running Python for plotting—essentially turning “vision” into an agentic workflow rather than a single forward pass (GeminiApp intro, capabilities, rollout note).

OpenAI’s in-house data agent at massive scale: OpenAI described an internal “AI data agent” reasoning over 600+ PB and 70k datasets, using Codex-powered table knowledge and careful context management (OpenAIDevs). This is a rare concrete peek at “deep research/data agent” architecture constraints: retrieval + schema/table priors + org context.

Serving bugs are still real (vLLM + stateful models): AI21 shared a debugging story where scheduler token allocation caused misclassification between prefill vs decode, now fixed in vLLM v0.14.0—a reminder that infrastructure correctness matters, especially for stateful architectures like Mamba (AI21Labs thread).

Local LLM UX continues to improve: Georgi Gerganov shipped LlamaBarn, a tiny macOS menu bar app built on llama.cpp to run local models (ggerganov). Separate comments suggest agentic coding performance may improve by disabling “thinking” modes for specific models (GLM-4.7-Flash) via llama.cpp templates (ggerganov config note).

Top tweets (by engagement)

Grok Imagine hype & distribution: @elonmusk, @fal, @ArtificialAnlys

DeepMind/Google world models: @GoogleDeepMind, @demishassabis, @sundarpichai

AI4Science: @demishassabis on AlphaGenome

Speech open-source release: @Alibaba_Qwen Qwen3-ASR

Agents + developer workflow: @bekacru “Agentic Engineering > Vibe Coding”, @cursor_ai agent-trace.dev

Anthropic workplace study: @AnthropicAI AI-assisted coding and mastery

AI Reddit Recap

/r/LocalLlama + /r/localLLM Recap

1. Kimi K2.5 Model Discussions and Releases

AMA With Kimi, The Open-source Frontier Lab Behind Kimi K2.5 Model (Activity: 686): Kimi is the research lab behind the open-source Kimi K2.5 model, engaging in an AMA to discuss their work. The discussion highlights a focus on large-scale models, with inquiries about the development of smaller models like

8B,32B, and70Bfor better intelligence density. There is also interest in smaller Mixture of Experts (MoE) models, such as~100Btotal with~A3Bactive, optimized for local or prosumer use. The team is questioned on their stance regarding the notion that Scaling Laws have hit a wall, a topic of current debate in AI research. Commenters express a desire for smaller, more efficient models, suggesting that these could offer better performance for specific use cases. The debate on scaling laws reflects a broader concern in the AI community about the limits of current model scaling strategies.The discussion around model sizes highlights a preference for smaller models like 8B, 32B, and 70B due to their ‘intelligence density.’ These sizes are considered optimal for balancing performance and resource efficiency, suggesting a demand for models that can operate effectively on limited hardware while still providing robust capabilities.

The inquiry into smaller Mixture of Experts (MoE) models, such as a ~100B total with ~A3B active, indicates interest in models optimized for local or prosumer use. This reflects a trend towards developing models that are not only powerful but also accessible for individual users or small enterprises, emphasizing the need for efficient resource utilization without sacrificing performance.

The challenge of maintaining non-coding abilities like creative writing and emotional intelligence in models like Kimi 2.5 is significant, especially as coding benchmarks become more prominent. The team is tasked with ensuring these softer skills do not regress, which involves balancing the training focus between technical and creative capabilities to meet diverse user needs.

Run Kimi K2.5 Locally (Activity: 553): The image provides a guide for running the Kimi-K2.5 model locally, emphasizing its state-of-the-art (SOTA) performance in vision, coding, agentic, and chat tasks. The model, which is a

1 trillionparameter hybrid reasoning model, requires600GBof disk space, but the quantized Unsloth Dynamic 1.8-bit version reduces this requirement to240GB, a60%reduction. The guide includes instructions for usingllama.cppto load models and demonstrates generating HTML code for a simple game. The model is available on Hugging Face and further documentation can be found on Unsloth’s official site. Commenters discuss the feasibility of running the model on high-end hardware, with one user questioning its performance on a Strix Halo setup and another highlighting the substantial VRAM requirements, suggesting that only a few users can realistically run it locally.Daniel_H212 is inquiring about the performance of the Kimi K2.5 model on the Strix Halo hardware, specifically asking for the token generation speed in seconds per token. This suggests a focus on benchmarking the model’s efficiency on high-end hardware setups.

Marksta provides feedback on the quantized version of the Kimi K2.5 model, specifically the Q2_K_XL variant. They note that the model maintains high coherence and adheres strictly to prompts, which is characteristic of Kimi-K2’s style. However, they also mention that while the model’s creative capabilities have improved, it still struggles with execution in creative scenarios, often delivering logical but poorly written responses.

MikeRoz questions the utility of higher quantization levels like Q5 and Q6 (e.g., UD-Q5_K_XL, Q6_K) when most experts prefer int4 quantization. This highlights a debate on the trade-offs between model size, performance, and precision in quantization strategies.

Kimi K2.5 is the best open model for coding (Activity: 1119): The image highlights Kimi K2.5 as the leading open model for coding on the LMARENA.AI leaderboard, ranked

#7overall. This model is noted for its superior performance in coding tasks compared to other open models. The leaderboard provides a comparative analysis of various AI models, showcasing their ranks, scores, and confidence intervals, emphasizing Kimi K2.5’s achievements in the coding domain. One commenter compared Kimi K2.5’s performance to other models, noting it is on par with Sonnet 4.5 in accuracy but not as advanced as Opus in agentic function. Another comment criticized LMArena for not reflecting a model’s multi-turn or long context capabilities.A user compared Kimi K2.5 to other models, noting that it performs on par with Sonnet 4.5 in terms of accuracy for React projects, but not at the level of Opus in terms of agentic function. They also mentioned that Kimi 2.5 surpasses GLM 4.7, which was their previous choice, and expressed curiosity about the upcoming GLM-5 from z.ai.

Another commenter criticized LMArena, stating that it fails to provide insights into a model’s multi-turn, long context, or agentic capabilities, implying that such benchmarks are insufficient for evaluating comprehensive model performance.

A user highlighted the cost-effectiveness of Kimi K2.5, stating it feels as competent as Opus 4.5 while being significantly cheaper, approximately 1/5th the cost, and even less expensive than Haiku. This suggests a strong performance-to-cost ratio for Kimi K2.5.

Finally We have the best agentic AI at home (Activity: 464): The image is a performance comparison chart of various AI models, including Kimi K2.5, GPT-5.2 (xhigh), Claude Opus 4.5, and Gemini 3 Pro. Kimi K2.5 is highlighted as the top-performing model across multiple categories such as agents, coding, image, and video tasks, indicating its superior capabilities in multimodal applications. The post suggests excitement about integrating this model with a ‘clawdbot’, hinting at potential applications in robotics or automation. A comment humorously suggests that hosting the Kimi 2.5 1T+ model at home implies having a large home, indicating the model’s likely high computational requirements. Another comment sarcastically mentions handling it with a 16GB VRAM card, implying skepticism about the feasibility of running such a model on typical consumer hardware.

2. Open Source Model Innovations

LingBot-World outperforms Genie 3 in dynamic simulation and is fully Open Source (Activity: 230): The open-source framework LingBot-World surpasses the proprietary Genie 3 in dynamic simulation capabilities, achieving

16 FPSand maintaining object consistency for60 secondsoutside the field of view. This model, available on Hugging Face, offers enhanced handling of complex physics and scene transitions, challenging the monopoly of proprietary systems by providing full access to its code and model weights. Commenters question the hardware requirements for running LingBot-World and express skepticism about the comparison with Genie 3, suggesting a lack of empirical evidence or direct access to Genie 3 for a fair comparison.A user questioned the hardware requirements for running LingBot-World, highlighting the importance of specifying computational needs for practical implementation. This is crucial for users to understand the feasibility of deploying the model in various environments.

Another commenter raised concerns about the lack of a direct comparison with Genie 3, suggesting that without empirical data or benchmarks, claims of LingBot-World’s superiority might be unsubstantiated. This points to the need for transparent and rigorous benchmarking to validate performance claims.

A suggestion was made to integrate a smaller version of LingBot-World into a global illumination stack, indicating potential applications in graphics and rendering. This could leverage the model’s capabilities in dynamic simulation to enhance visual computing tasks.

API pricing is in freefall. What’s the actual case for running local now beyond privacy? (Activity: 1053): The post discusses the rapidly decreasing costs of API access for AI models, with examples like K2.5 offering prices at

10%of Opus and Deepseek being nearly free. Gemini also provides a substantial free tier. This trend is contrasted with the challenges of running large models locally, such as the need for expensive GPUs or dealing with quantization tradeoffs, which result in slow processing speeds (15 tok/s) on consumer hardware. The author questions the viability of local setups given these API pricing trends, noting that while privacy and latency control are valid reasons, the cost-effectiveness of local setups is diminishing. Commenters highlight concerns about the sustainability of low API prices, suggesting they may rise once market dominance is achieved, similar to past trends in other industries. Others emphasize the importance of offline capabilities and the ability to audit and trust local models, which ensures consistent behavior without unexpected changes from vendors.Minimum-Vanilla949 highlights the importance of offline capabilities for those who travel frequently, emphasizing the risk of API companies altering terms of service or increasing prices once they dominate the market. This underscores the value of local models for ensuring consistent access and cost control.

05032-MendicantBias discusses the unsustainable nature of current API pricing, which is often subsidized by venture capital. They argue that once a monopoly is achieved, prices will likely increase, making local setups and open-source tools a strategic defense against such business models.

IactaAleaEst2021 points out the importance of repeatability and trust in using local models. By downloading and auditing a model, users can ensure consistent behavior, unlike APIs where vendors might change model behavior over time, potentially reducing its utility for specific tasks.

3. Trends in AI Agent Frameworks

GitHub trending this week: half the repos are agent frameworks. 90% will be dead in 1 week. (Activity: 538): The image highlights a trend on GitHub where many of the trending repositories are related to AI agent frameworks, suggesting a surge in interest in these tools. However, the post’s title and comments express skepticism about the sustainability of this trend, comparing it to the rapid rise and fall of JavaScript frameworks. The repositories are mostly written in Python and include a mix of agent frameworks, RAG tooling, and model-related projects like NanoGPT and Grok. The discussion reflects a concern that many of these projects may not maintain their popularity or relevance over time. One comment challenges the claim that half of the trending repositories are agent frameworks, noting that only one is an agent framework by Microsoft, while others are related to RAG tooling and model development. Another comment appreciates the utility of certain projects, like IPTV, for educational purposes.

gscjj points out that the claim about ‘half the repos being agent frameworks’ is inaccurate. They note that the list includes a variety of projects such as Microsoft’s agent framework, RAG tooling, and models like NanoGPT and Grok, as well as a model CLI for code named Kimi and a browser API. This suggests a diverse range of trending repositories rather than a dominance of agent frameworks.

Mistral CEO Arthur Mensch: “If you treat intelligence as electricity, then you just want to make sure that your access to intelligence cannot be throttled.” (Activity: 357): Arthur Mensch, CEO of Mistral, advocates for open-weight models, likening intelligence to electricity, emphasizing the importance of unrestricted access to AI capabilities. This approach supports the deployment of models on local devices, reducing costs as models are quantized for lower compute environments, contrasting with closed models that are often large and monetized through paywalls. Mistral aims to balance corporate interests with open access, potentially leading to significant breakthroughs in AI deployment. Commenters appreciate Mistral’s approach to open models, noting the potential for reduced costs and increased accessibility. There is a consensus that open models could democratize AI usage, contrasting with the restrictive nature of closed models.

RoyalCities highlights the cost dynamics of model deployment, noting that open models, especially when quantized, reduce costs as they can be run on local devices. This contrasts with closed models that are often large and require significant infrastructure, thus being monetized through paywalls. This reflects a broader industry trend where open models aim to democratize access by lowering hardware requirements.

HugoCortell points out the hardware bottleneck in deploying open models effectively. While open-source models can rival closed-source ones in performance, the lack of affordable, high-performance hardware limits their accessibility. This is compounded by large companies making high-quality local hardware increasingly expensive, suggesting a need for a company capable of producing and distributing its own hardware to truly democratize AI access.

tarruda expresses anticipation for the next open Mistral model, specifically the “8x22”. This indicates a community interest in the technical specifications and potential performance improvements of upcoming models, reflecting the importance of open model development in advancing AI capabilities.

Less Technical AI Subreddit Recap

/r/Singularity, /r/Oobabooga, /r/MachineLearning, /r/OpenAI, /r/ClaudeAI, /r/StableDiffusion, /r/ChatGPT, /r/ChatGPTCoding, /r/aivideo, /r/aivideo

1. OpenAI and AGI Investments

Nearly half of the Mag 7 are reportedly betting big on OpenAI’s path to AGI (Activity: 1153): NVIDIA, Microsoft, and Amazon are reportedly in discussions to invest a combined total of up to

$60 billioninto OpenAI, with SoftBank considering an additional$30 billion. This potential investment could value OpenAI at approximately$730 billionpre-money, aligning with recent valuation discussions in the$750 billion to $850 billion+range. This would mark one of the largest private capital raises in history, highlighting the significant financial commitment from major tech companies towards the development of artificial general intelligence (AGI). Commenters note the strategic alignment of these investments, with one pointing out that companies like Microsoft and NVIDIA are unlikely to invest in competitors like Google. Another comment reflects on the evolving landscape of large language models (LLMs) and the shifting focus of tech giants.CoolStructure6012 highlights the strategic alignment between Microsoft (MSFT) and NVIDIA (NVDA) with OpenAI, suggesting that their investments are logical given their competitive stance against Google. This reflects the broader industry trend where tech giants are aligning with AI leaders to bolster their AI capabilities and market positions.

drewc717 reflects on the evolution of AI models, noting a significant productivity boost with OpenAI’s

4.1 Pro mode. However, they express a decline in their workflow efficiency after switching to Gemini, indicating that not all LLMs provide the same level of user experience or productivity, which is crucial for developers relying on these tools.EmbarrassedRing7806 questions the lack of attention on Anthropic despite its widespread use in coding through its Claude model, as opposed to OpenAI’s Codex. This suggests a potential underestimation of Anthropic’s impact in the AI coding space, where Claude might be offering competitive or superior capabilities.

2. DeepMind’s AlphaGenome Launch

Google DeepMind launches AlphaGenome, an AI model that analyzes up to 1 million DNA bases to predict genomic regulation (Activity: 427): Google DeepMind has introduced AlphaGenome, a sequence model capable of analyzing up to

1 million DNA basesto predict genomic regulation, as detailed in Nature. The model excels in predicting genomic signals such as gene expression and chromatin structure, particularly in non-coding DNA, which is crucial for understanding disease-associated variants. AlphaGenome outperforms existing models on25 of 26benchmark tasks and is available for research use, with its model and weights accessible on GitHub. Commenters highlight the model’s potential impact on genomics, with some humorously suggesting its significance in advancing scientific achievements akin to winning Nobel prizes.[R] AlphaGenome: DeepMind’s unified DNA sequence model predicts regulatory variant effects across 11 modalities at single-bp resolution (Nature 2026) (Activity: 66): DeepMind’s AlphaGenome introduces a unified DNA sequence model that predicts regulatory variant effects across

11 modalitiesat single-base-pair resolution. The model processes1M base pairsof DNA to predict thousands of functional genomic tracks, matching or exceeding specialized models in25 of 26evaluations. It employs a U-Net backbone with CNN and transformer layers, trained on human and mouse genomes, and captures99%of validated enhancer-gene pairs within a1Mbcontext. Training on TPUv3 took4 hours, with inference under1 secondon H100. The model demonstrates cross-modal variant interpretation, notably on the TAL1 oncogene in T-ALL. Nature, bioRxiv, DeepMind blog, GitHub. Some commenters view the model as an incremental improvement over existing sequence models, questioning the novelty despite its publication in Nature. Others express concerns about the implications of open-sourcing such powerful genomic tools, hinting at potential future applications like ‘text to CRISPR’ models.st8ic88 argues that while DeepMind’s AlphaGenome model is notable for its ability to predict regulatory variant effects across 11 modalities at single-base pair resolution, it is seen as an incremental improvement over existing sequence models predicting genomic tracks. The comment suggests that the model’s prominence is partly due to DeepMind’s reputation and branding, particularly the use of ‘Alpha’ in its name, which may have contributed to its publication in Nature.

--MCMC-- is interested in the differences between the AlphaGenome model’s preprint and its final published version in Nature. The commenter had read the preprint and is curious about any changes made during the peer review process, which could include updates to the model’s methodology, results, or interpretations.

f0urtyfive raises concerns about the potential risks of open-sourcing powerful genomic models like AlphaGenome, speculating on future developments such as ‘text to CRISPR’ models. This comment highlights the ethical and safety considerations of making advanced genomic prediction tools widely accessible, which could lead to unintended applications or misuse.

3. Claude’s Cost Efficiency and Usage Strategies

Claude Subscriptions are up to 36x cheaper than API (and why “Max 5x” is the real sweet spot) (Activity: 665): A data analyst has reverse-engineered Claude’s internal usage limits by analyzing unrounded floats in the web interface, revealing that subscriptions can be up to 36x cheaper than using the API, especially for coding tasks with agents like Claude Code. The analysis shows that the subscription model offers free cache reads, whereas the API charges 10% of the input cost for each read, making the subscription significantly more cost-effective for long sessions. The “Max 5x” plan at

$100/monthis highlighted as the most optimized, offering a6xhigher session limit and8.3xhigher weekly limit than the Pro plan, contrary to the marketed “5x” and “20x” plans. The findings were derived using the Stern-Brocot tree to decode precise usage percentages into internal credit numbers. Full details and formulas are available here. Commenters express concern over Anthropic’s lack of transparency and speculate that the company might change the limits once they realize users have reverse-engineered them. Some users are taking advantage of the current subscription benefits, anticipating potential changes.HikariWS raises a critical point about Anthropic’s lack of transparency regarding their subscription limits, which could change unexpectedly, rendering current analyses obsolete. This unpredictability poses a risk for developers relying on these plans for cost-effective usage.

Isaenkodmitry discusses the potential for Anthropic to close loopholes once they realize users are exploiting subscription plans for cheaper access compared to the API. This highlights a strategic risk for developers who are currently benefiting from these plans, suggesting they should maximize usage while it lasts.

Snow30303 mentions using Claude code in VS Code for Flutter apps, noting that it consumes credits rapidly. This suggests a need for more efficient usage strategies or alternative solutions to manage costs effectively when integrating Claude into development workflows.

We reduced Claude API costs by 94.5% using a file tiering system (with proof) (Activity: 603): The post describes a file tiering system that reduces Claude API costs by 94.5% by categorizing files into HOT, WARM, and COLD tiers, thus minimizing the number of tokens processed per session. This system, implemented in a tool called

cortex-tms, tags files based on their relevance and usage frequency, allowing only the most necessary files to be loaded by default. The approach has been validated through a case study on the author’s project, showing a reduction from66,834to3,647tokens per session, significantly lowering costs from$0.11to$0.01per session with Claude Sonnet 4.5. The tool is open-source and available on GitHub. One commenter inquired about the manual process of tagging files and updating tags, suggesting the use of git history to automate file heat determination. Another user appreciated the approach due to their own struggles with managing API credits.Illustrious-Report96 suggests using

git historyto determine file ‘heat’, which involves analyzing the frequency and recency of changes to classify files as ‘hot’, ‘warm’, or ‘cold’. This method leverages version control metadata to automate the classification process, potentially reducing manual tagging efforts.Accomplished_Buy9342 inquires about restricting access to ‘WARM’ and ‘COLD’ files, which implies a need for a mechanism to control agent access based on file tier. This could involve implementing access controls or modifying the agent’s logic to prioritize ‘HOT’ files, ensuring efficient resource usage.

durable-racoon asks about the process of tagging files and updating these tags, highlighting the importance of an automated or semi-automated system to manage file tiering efficiently. This could involve scripts or tools that dynamically update file tags based on usage patterns or other criteria.

If anyone at the Latent.Space team is reading this, these AI News posts are so bad that I'm considering unsubscribing from the entire publication.