In April 2023 we released an episode named “Mapping the future of *truly* open source models” to talk about Dolly, the first open, commercial LLM.

Mike was leading the OSS models team at Databricks at the time. Today, Mike is back on the podcast to give us the “one year later” update on the evolution of large language models and how he’s been using them to build Brightwave, an an AI research assistant for investment professionals.

Today they are announcing a $6M seed round (led by Alessio and Decibel!), and sharing some of the learnings from serving customers with >$120B of assets under management in production in the last 4 months since launch.

Losing faith in long context windows

In our recent “Llama3 1M context window” episode we talked about the amazing progress we have done in context window size, but it’s good to remember that Dolly’s original context size was 1,024 tokens, and this was only 14 months ago.

But while understanding length has increased, models are still not able to generate very long answers. His empirical intuition (which matches ours while building smol-podcaster) is that most commercial LLMs, as well as Llama, tend to generate responses <=1,200 tokens most of the time. While Needle in a Haystack tests will pass with flying colors at most context sizes, the granularity of the summary decreases as the context increases as it tries to fit the answer in the same tokens range, rather than returning tokens close to the 4,096 max_output, for example.

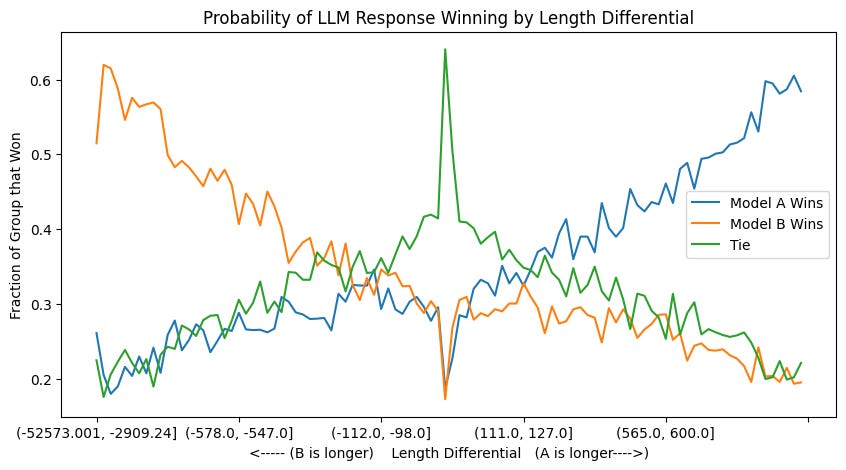

Recently Rob Mulla from Dreadnode highlighted how LMSys Arena results prefer longer responses by a large margin, so both LLMs and humans have a well documented length bias which doesn’t necessarily track the quality of answer:

The way Mike and team solved this is by breaking down the task in multiple subtasks, and then merging them back together. For example, have a book summarized chapter by chapter to preserve more details, and then put those summaries together. In Brightwave’s case, it’s creating multiple subsystems that accomplish different tasks on a large corpus of text separately, and then bringing them all together in a report. For example understanding intent of the question, extracting relations between companies, figuring out if it’s a positive / negative, etc.

Mike’s question is whether or not we’ll be able to imbue better synthesis capabilities in the models: can you have synthesis-oriented demonstrations at training time rather than single token prediction?

“LLMs as Judges” Strategies

In our David Luan episode he mentioned they don’t use any benchmarks for their models, because the benchmarks don’t reflect their customer needs. Brightwave shared some tips on leveraging LLMs as Judges:

Human vs LLM reviews: while they work with human annotators to create high quality datasets, that data isn’t just used to fine tune models but also as a reference basis for future LLM reviews. Having a set of trusted data to use as calibration helps you trust the LLM judgement even more.

Ensemble consistency checking: rather than using an LLM as judge for one output, you use different LLMs to generate a result for the same task, and then use another LLM to highlight where those generations differ. Do the two outputs differ meaningfully? Do they have different beliefs about the implications of something? If there are a lot of discrepancies between generations coming from different models, you then do additional passes to try and resolve them.

Entailment verification: for each unique insight that they generate, they take the output and separately ask LLMs to verify factuality of information based on the original sources. In the actual product, user can then highlight any piece of text and ask it to 1) “Tell Me More” 2) “Show Sources”. Since there’s no way to guarantee factuality of 100% of outputs, and humans have good intuition for things that look out of the ordinary, giving the user access to the review tool helps them build trust in it.

It’s all about the data

During his time at Databricks, they had created dolly-15k, a dataset of instruction-following records written by thousands of their employees. Since then, no other company has replicated that type of effort even though the data wars are in full effect. It’s been clear in the last year that the half-life of a model is much shorter than the half-life of a dataset. The Pile by Eleuther (see Datasets 101) came out in 2020 and is still widely used; if you had trained an LLM in 2020, you would have definitely replaced it by now as they have gotten better and cheaper.

On the age old “RAG v Fine-Tuning” question, Mike shared a great example that we’ll just quote:

I think of language models kind of like a stem cell, and then under fine tuning, they differentiate into different kinds of specific cells. I don't think that unbounded agentic behaviors are useful, and that instead, a useful LLM system is more like a finite state machine where the behavior of the system is occupying one of many different behavioral regimes and making decisions about what state should I occupy next in order to satisfy the goal. As you think about the graph of those states that your system is moving through, once you develop conviction that one behavior is useful and repeatable and worthwhile to differentiate down into a specific kind of subsystem, that's where like fine tuning and specifically generating the training data, like having human annotators produce a corpus that is useful enough to get a specific class of behaviors, that's kind of how we use fine tuning rather than trying to imbue net new information into these systems.

There are a lot of other nuggets in the episode around knowledge graphs extraction, private vs public data, user intent extraction, etc, but we only have so much room in the writeup so go listen! And if you’re interested in working on these problems, Brightwave is hiring 👀

Watch on YouTube

We like Mike. The camera likes Mike. Our audience loooves Mike.

Show Notes

Timestamps

[00:00:00] Introductions

[00:02:40] Social media's polarization influence on LLMs

[00:04:09] What's Brightwave?

[00:05:13] How to hire for a vertical AI startup

[00:09:34] How $20B+ hedge funds use Brightwave

[00:11:23] Evolution of context sizes in language models

[00:14:36] Summarizing vs Ideating with AI

[00:18:26] Collecting feedback in a field with no truth

[00:20:49] Evaluation strategies and the importance of custom datasets

[00:23:43] Should more companies make employees label data?

[00:25:32] Retrieval for highly temporal and hierarchical data

[00:30:05] Context-aware prompting for private vs. public data

[00:32:01] Knowledge graph extraction and structured information retrieval

[00:33:49] Fine-tuning vs RAG

[00:36:16] Anthropomorphizing language models

[00:38:20] Why Brightwave doesn't do spreadsheets

[00:42:24] Will there be fully autonomous hedge funds?

[00:47:58] State of open source AI

[00:53:53] Hiring and team expansion at Brightwave

Transcript

Alessio [00:00:01]: Hey everyone, welcome to the Latent Space Podcast. This is Alessio, partner and CTO in Residence at Decibel Partners, and I have no co-host today. Swyx is in Vienna at ICLR having fun in Europe, and we're in the brand new studio. As you might see, if you're on YouTube, there's still no sound panels on the wall. Mike tried really hard to put them up, but the glue is a little too old for that. So if you hear any echo or anything like that, sorry, but we're doing the best that we can. And today we have our first repeat guest, Mike Conover. Welcome Mike, who's now the founder of Brightwave, not Databricks anymore.

Mike [00:00:40]: That's right. Yeah. Pleased to be back.

Alessio [00:00:42]: Our last episode was one of the fan favorites, and I think this will be just as good. So for those that have not listened to the first episode, which might be many because the podcast has grown a lot since then, thanks to people like Mike who have interesting conversations on it. You spent a bunch of years doing ML at some of the best companies on the internet, things like Workday, you know, Skipflag, LinkedIn, most recently at Databricks where you were leading the open source large language models team working on Dolly. And now you're doing Brightwave, which is in the financial services space. But this is not something new, I think when you and I first talked about Brightwave, I was like, why is this guy doing a financial services company? And then you look at your background and you were doing papers on The Nature Magazine about LinkedIn data predicting S&P 500 stock movement, like many, many years ago. So what are some of the tying elements in your background that maybe people are overlooking that brought you to do this?

Mike [00:01:36]: Yeah, sure. Yeah. So my PhD research was funded by DARPA and we had access to the Twitter data set early in the natural history of the availability of that data set, and it was focused on the large scale structure of propaganda and misinformation campaigns. And LinkedIn, we had planet scale descriptions of the structure of the global economy. And so primarily my work was homepage news feed relevant. So when you go to LinkedIn.com, you'd see updates from one of our machine learning models. But additionally, I was a research liaison as part of the economic graph challenge and had this Nature Communications paper where we demonstrated that 500 million jobs transitions can be hierarchically clustered as a network of labor flows and could predict next quarter S&P 500 market gap changes. And at Workday, I was director of financials machine learning. You start to see how organizations are organisms. And I think of the way that like an accountant or the market encodes information in databases similar to how social insects, for example, organize their work and make collective decisions about where to allocate resources or time and attention. And that especially with the work on Twitter, we would see network structures relating to polarization emerge organically out of the interactions of many individual components. And so like much of my professional work has been focused on this idea that our lives are governed by systems that we're unable to see from our locally constrained perspective. And when humans interact with technology, they create digital trace data that allows us to observe the structure of those systems as though through a microscope or a telescope. And particularly as regards finance, I think the markets are the ultimate manifestation and record of that collective decision making process that humans engage in.

Alessio [00:03:21]: Just to start going off script right away, how do you think about some of these interactions creating the polarization and how that reflects in the language models today because they're trained on this data? Like do you think the models pick up on these things on their own as well?

Mike [00:03:34]: Absolutely. Yeah. I think they are a compression of the world as it existed at the point in time when they were pre-trained. And so I think absolutely. And you see this in Word2Vec too. I mean, just the semantics of how we think about gender as it relates to professions are encoded in the structure of these models and like language models, I think are much more sort of complete representation of human sort of beliefs.

Alessio [00:04:01]: So we left you at Databricks last time you were building Dolly. Tell us a bit more about Brightwave. This is the first time you're really talking about it publicly.

Mike [00:04:09]: Yeah. Yeah. And it's a pleasure. So Brightwave is a $6 million seed round, led by Decibel, that we love working with, and including participation from Point72, one of the largest hedge funds in the world and Moonfire Ventures. And if you think of the job of an active asset manager, the work to be done is to understand something about the market that nobody else has seen in order to identify a mispriced asset. And it's our view that that is not a task that is well suited to human intellect or attention span. And so much as I was gesturing towards the ability of these models to perceive more than a human is able to, we think that there's a historically unique opportunity to expand individual's ability to reason about the structure of the economy and the markets. It's not clear that you get superhuman reasoning capabilities from human level demonstrations of skill. And by that I mean the pre-training corpus, but then additionally the fine tuning corpuses. I think you largely mimic the demonstrations that are present at model training time. But from a working memory standpoint, these models outclass humans in their ability to reason about these systems.

Alessio [00:05:13]: And you started Brightwave with Brandon. What's the story? You two worked together at Workday, but he also has a really relevant background.

Mike [00:05:20]: Yes. So Brandon Kotara is my co-founder, the CTO, and he's a very special human. So he has a deep background in finance. He was the former CTO of a federally regulated derivatives exchange, but his first deep learning patent was filed in 2018. And so he spans worlds. He has experience building mission critical infrastructure in highly regulated environments for finance use cases, but also was very early to the deep learning party and understand. He led at Workday, was the tech lead for semantic search over hundreds of millions of resumes and job listings. And so just has been working with information retrieval and neural information retrieval methods for a very long time. And so was an exceptional person, and I'm glad to count him among the people that we're doing this with.

Alessio [00:06:07]: Yeah. And a great fisherman.

Mike [00:06:09]: Yeah. Very talented.

Alessio [00:06:11]: That's always important.

Mike [00:06:12]: Very enthusiastic.

Alessio [00:06:13]: And then you have a bunch of amazing engineers, then you have folks like JP who used to work at Goldman Sachs. Yeah. How should people think about team building in this more vertical domain? Obviously you come from a deep ML background, but you also need some of the industry side. What's the right balance?

Mike [00:06:28]: I think one of the things that's interesting about building verticalized solutions in AI in 2024 is that historically, you need the AI capability, you need to understand both how the models behave and then how to get them to interact with other kinds of machine learning subsystems that together perform the work of a system that can reason on behalf of a human. There are also material systems engineering problems in there. So I saw, I forget who this is attributed to, but a tweet that made reference to all of the traditional software companies are trying to hire AI talent and all the AI companies are trying to hire systems engineers, and that is 100% the case. Getting these systems to behave in a predictable and repeatable and observable way is equally challenging to a lot of the methodological challenges. But then you bring in, whether it's law or medicine or public policy or in our case finance, I think a lot of the most valuable, like Grammarly is a good example of a company that has generative work product that is valuable by most humans. Whereas in finance, the character of the insight, the depth of insight and the non-consensusness of the insight really requires fairly deep domain expertise. And even operating an exchange, I mean, when we went to raise it around, a lot of people said, why don't you start a hedge fund? And it's like, there are many, many separate skills that are unrelated to AI in that problem. And so we've brought into the fold domain experts in finance who can help us evaluate the character and sort of steer the system.

Alessio [00:07:59]: So that's the team. What does the system actually do? What's the Brightwave product?

Mike [00:08:03]: Yeah. I mean, it does many, many things, but it acts as a partner in thought to finance professionals. So you can ask Brightwave a question like, how is NVIDIA's position in the GPU market impacted by rare earth metal shortages? And it will identify as thematic contributors to an investment decision or developing your thesis that in response to export controls on A100 cards, China has put in place licensors on the transfer of germanium and gallium, which are not rare earth metals, but they're semiconductor production inputs and has expanded its control of African and South American mining operations. And so we see, if you think about, we have a $20 billion crossover hedge fund. Their equities team uses this tool to go deep on a thesis. So I was describing this like multiple steps into the value chain or supply chain for companies. We see wealth management professionals using Brightwave to get up to speed extremely quickly as they step into nine conversations tomorrow with clients who are assessing like, do you know something that I don't? Can I trust you to be a steward of my financial wellbeing? We see investor relations teams using Brightwave. You just think about the universe of coverage that a person working in finance needs to be aware of, the ability to rip through filings and transcripts and have a very comprehensive view of the market. It's extremely rate limited by how quickly a person is able to read and not just read, but like solve the blank page problem of knowing what to say about a factor of finding.

Alessio [00:09:34]: So you mentioned the $20 billion hedge fund. What's like the range of customers that you work with as far as AUM goes?

Mike [00:09:41]: I mean, we have customers across the spectrum. So from $500 million owner operated RIAs to organizations with tens and tens of billions of dollars in asset center management.

Alessio [00:09:52]: What else can you share about customers that you're working with?

Mike [00:09:55]: Yeah. So we have seen traction that far exceeded our expectations from the market. You sit somebody down with a system that can take any question and generate tight, actionable financial analysis on that subject and the product kind of sells itself. So we see many, many different funds, firms, and strategies that are making use of Brightwave. So you've got 10 person owner operated registered investment advisor, the classical wealth manager, you know, $500 million in AUM. We have crossover hedge funds that have tens and tens of billions of dollars in assets center management, very different use case. So that's more investment research, whereas the wealth managers can use this to step into client interactions, just exceptionally well prepared. We see investor relations teams. We see corporate strategy types that are needing to understand very quickly new markets, new themes, and just the ability to very quickly develop a view on any investment theme or sort of strategic consideration is broadly applicable to many, many different kinds of personas.

Alessio [00:10:56]: Yeah. I can attest to the product selling itself, given that I'm a user. Let's jump into some of the technical challenges and work behind it, because there are a lot of things. As I mentioned, you were on the podcast about a year ago. Yep. You had released Dolly from Databricks, which was one of the first open source LLMs. Yep. Dolly had a whopping 1,024 tokens of context size. And today, you know, I think a thousand tokens, a model would be unusable.

Mike [00:11:23]: You lose that much out.

Alessio [00:11:24]: Yeah, exactly. How did you think about the evolution of context sizes as you built the company and where we are today? What are things that people get wrong? Any commentary there?

Mike [00:11:34]: Sure. We very much take a systems of systems approach. When I started the company, I think I had more faith in the ability of large context windows to generally solve problems relating to synthesis. And actually, if you think about the attention mechanism and the way that it computes similarities between tokens at a distance, I, on some level, believed that as you would scale that up, you would have the ability to simultaneously perceive and draw conclusions across vast, disparate bodies of content. And I think that does not empirically seem to be the case. So when, for example, you, and this is something anybody can try, take a very long document, like needle in a haystack. I think, sure, we can do information retrieval on specific fact-finding activities pretty easily. I kind of think about it like summarizing, if you write a book report on an entire book versus a synopsis of each individual chapter, there is a characteristic output length for these models. Let's say it's about 1,200 tokens. It is very difficult to get any of the commercial LLMs or LLAMA to write 5,000 tokens. And you think about it as, what is the conditional probability that I generate an end token? It just gets higher the more tokens are in the context window prior to that sort of next inference step. And so if I have 1,000 words in which to say something, the level of specificity and the level of depth when I am assessing a very large body of content is going to necessarily be less than if I am saying something specific about a sub-passage. I mean, if you think about drawing a parallel to consumer internet companies like LinkedIn or Facebook, there are many different subsystems with it. So let's take the Facebook example. Facebook almost certainly has, I mean, you can see this in your profile, your inferred interests. What are the things that it believes that you care about? Those assessments almost certainly feed into the feed relevance algorithms that would judge what you are, you know, am I going to show you snowboarding content? I'm going to show you aviation content. It's the outputs of one machine learning system feeding into another machine learning system. And I think with modern rag and sort of agent-based reasoning, it is really about creating subsystems that do specific tasks well. And I think the problem of deciding how to decompose large documents into more kind of atomic reasoning units is still very important. Now, it's an open question whether that is a model that is addressable by pre-training or instruction tuning. Like, can you have synthesis-oriented demonstrations at training time? And now this problem is more robustly solved because synthesis is quite different from complete the next word in the great Gatsby. I think empirically is not the case that you can just throw all of the SCC filings in a million token context window and get deep insight that is useful out the other end.

Alessio [00:14:36]: Yeah. And I think that's the main difference about what you're doing. It's not about summarizing. It's about coming up with different ideas and kind of like thought threads to pull on.

Mike [00:14:47]: Yeah. You know, if I think that GLP-1s are going to blow up the diet industry, identifying and putting in context a negative result from a human clinical trial, or for example, that adherence rates to Ozempic after a year are just 35%, what are the implications of this? So there's an information retrieval component. And then there's a not just presenting me with a summary of like, here's here are the facts, but like, what does this entail? And how does this fit into my worldview, my fund strategy? Broadly, I think that, you know, I mean, this idea, I think, is very eloquently puts it, which is, and this is not my insight, but that language models, and help me know who said this. You may be familiar, but language models are not tools for creating new knowledge. They're tools for helping me create new knowledge. Like they themselves do not do that. I think that that's presently the right way to think about it.

Alessio [00:15:36]: Yeah. I've read a tweet about Needle in the Haystack actually being harmful to some of this work because now the model is like too focused on recalling everything versus saying, oh, that doesn't matter. Like ignoring some of the things, if you think about a S1 filing, like 85% is like boilerplate. It's like, you know, previous performance doesn't guarantee future performance. Like the company might not be able to turn a profit in the future, blah, blah, blah. All these things, they always come up again.

Mike [00:16:02]: COVID and currency fluctuations.

Alessio [00:16:03]: Yeah, yeah, yeah. Yada, yada, yada. We have a large workforce and all of that. Have you had to do any work at the model level to kind of like make it okay to forget these things? Or like have you found that making it a smaller problem than putting them back together kind of solves for that?

Mike [00:16:19]: Absolutely. And I think this is where having domain expertise around the structure of these documents. So if you look at the different chunking strategies that you can employ to understand like what is the intent of this clause or phrase, and then really be selective at retrieval time in order to get the information that is most relevant to a user query based on the semantics of that unique document. And I think it's certainly not just a sliding window over that corpus.

Alessio [00:16:45]: And then the flip side of it is obviously factuality. You don't want to forget things that were there. How do you tackle that?

Mike [00:16:52]: Yeah, I mean, of course, it's a very deep problem. And I think I'll be a little circumspect about the specific kinds of methods we use. This sort of multiple passes over the material and saying, how convicted are you that what you're saying is in fact true? And you can take generations from multiple different models and compare and contrast and say, do these both reach the same conclusion? You can treat it like a voting problem. We train our own models to assess. You can think of this like entailment. Is this supported by the underlying primary sources? And I think that you have methodological approaches to this problem, but then you also have product affordances. There was a great blog post on Bard from the Bard team. It was sort of a design-led product innovation that allows you to ask the model to double-check the work. So if you have a surprising finding, we can let the user discretionarily spend more compute to double-check the work. And I think that you want to build product experiences that are fault tolerant. And the difference between hallucination and creativity is fuzzy. Do you ever get language models with Next Token Prediction as the loss function that are guaranteed to not contain factual misstatements? That is not clear. Now, maybe being able to invoke Code Interpreter, like code generation and then execution in a secure way, helps to solve some of these problems, especially for quantitative reasoning. That may be the case, but for right now, I think you need to have product affordances that allow you to live with the reality that these things are fallible.

Alessio [00:18:26]: We did our RLHF 201 episode, just talking about different methods and whatnot. How do you think about something like this, where it's maybe unclear in the short term, even if the product is right? It might give an insight that might be right, but it might not prove until later. So it's kind of hard for the users to say, that's wrong, because actually it might be like, you think it's wrong. Like an investment, that's kind of what it comes down to. Some people are wrong. Some people are right. How do you think about some of the product features that you need and something like this to bring user feedback into the mix and maybe how you approach it today and how you think about it long term?

Mike [00:19:01]: Yeah, well, I mean, I think that your point about the model may make a statement which is not actually verifiable. It's like, this may be the case. I think that is where the reason we think of this as a partner in thought, is that humans are always going to have access to information that has not been digitized. And so in finance, you see that, especially with regards to expert call networks, the unstated investment theses that a portfolio manager may have, like, we just don't do biotech. Or we think that Eli Lilly is actually very exposed because of how unpleasant it is to take examples. Right. Those are things that are beliefs about the world, but that may not be like falsifiable right now. And so I think you can, again, take pages from the consumer web playbook and think about personalization. So it is getting a person to articulate everything that they believe is not a realistic task. Netflix doesn't ask you to describe what kinds of movies you like and they give you the option to vote, but nobody does this. And so what I think you do is you observe people's revealed preferences. So one of the capabilities that our system exposes is, given everything that Brightwave has read and assessed, and like the sort of synthesized financial analysis, what are the natural next questions that a person investigating this subject should ask? And you can think of this chain of thought and this deepening kind of investigative process and the direction in which the user steers the attention of this system reveals information about what do they care about, what do they believe, what kinds of things are important. And so at the individual level, but then also at the fund and firm level, you can develop like an implicit representation of your beliefs about the world in a way that you just you're never going to get somebody to write everything down.

Alessio [00:20:49]: How does that tie into one of our other favorite topics, e-mails? We had David Luan from Adapt and he mentioned they don't care about benchmarks because their customers don't work on benchmarks, they work on business results. How do you think about that for you? And maybe as you build a new company, when is the time to like still focus on the benchmark versus when it's time to like move on to your own evaluation using maybe labelers or whatnot?

Mike [00:21:14]: We use a fair bit of LLM supervision to evaluate multiple different subsystems. And I think that one of the reasons that we pay human annotators to evaluate the quality of the generative outputs, and I think that that is always the reference standard, but we frequently first turn to LLM supervision as a way to have, whether it's at fine-tuning time or even for subsystems that are not generative, what is the quality of the system? I think we will generate a small corpus of high-quality domain expert annotations and always compare that against how well is either LLM supervision or even just a heuristic. A simple thing you can do, this is a technique that we do not use, but as an example, do not generate any integers or any numbers that are not present in the underlying source data. If they're doing rag, you can just say you can't name numbers that are not, it's very sort of heavy-handed, but you can take the annotations of a human evaluator and then compare that. I mean, Snorkel kind of takes a similar perspective, like multiple different weak sort of supervision data sets can give you substantially more than any one of them does on their own. And so I think you want to compare the quality of any evaluation against human-generated sort of benchmark. But at the end of the day, especially for things that are nuanced, is this transcendent poetry, there's just no way to multiple choice your way out of that, you know? And so really where I think a lot of the flywheels for some of the large LLM companies are, it's methodological, obviously, but it's also just data generation. And you think about like, you know, for anybody who's done crowdsource work, and this I think applies to the high-skilled human annotators as well, like you look at the Google search quality evaluator guidelines, it's like a 90 or 120-page rubric describing like, what is a high-quality Google search result? And it's like very difficult to get on a human level people to reproducibly follow a rubric. And so what is your process for orchestrating that motion? Like how do you articulate what is high-quality insight? I think that's where a lot of the work actually happens, and that it's sort of the last resort. Ideally, you want to automate everything, but ultimately the most interesting problems right now are those that are not especially automatable.

Alessio [00:23:43]: One thing you did at Databricks was the, well, not that you did specifically, but the team there was like the Dolly 15K dataset. You mentioned people misvalue the value of this data. Why has no other company done anything similar with like creating this employee-led dataset? You can imagine some of these Goldman Sachs, they got like thousands and thousands of people in there. Obviously they have different privacy and whatnot requirements. Do you think more companies should do it? Do you think there's like a misunderstanding of how valuable that is?

Mike [00:24:15]: So I think Databricks is a very special company and led by people who are very sort of courageous, I guess is one word for it. Just like, let's just ship it. And I think it's unusual. And it's also because I think most companies will recognize, like if they go to the effort to produce something like that, they recognize that it is competitive advantage to have it and to be the only company that has it. And I think Databricks is in an unusual position in that they benefit from more people having access to these kinds of sources, but you also saw scale, I guess they haven't released it.

Alessio [00:24:49]: Well, yeah. I'm sure they have it because they charge people a lot of money.

Mike [00:24:51]: They created that alternative to GSM 8K, I believe was how that's said. I guess they too are not releasing that.

Alessio [00:25:01]: It's interesting because I talked to a lot of enterprises and a lot of them are like, man, I spent so much money on Scale. And I'm like, why don't you just do it? And they're like, what?

Mike [00:25:11]: So I think this again gets to the human process orchestration. It's one thing to do like a single monolithic push to create a training data set like that or an evaluation corpus. But I think it's another to have a repeatable process. And a lot of that realistically is pretty unsexy, like people management work. So that's probably a big part of it.

Alessio [00:25:32]: So we have these four wars of AI framework, the data quality war, we kind of touched on a little bit now. About RAG, that's like the other battlefield, RAG and context sizes and kind of like all these different things. You work in a space that has a couple of different things. One, temporality of data is important because every quarter there's new data and like the new data usually overrides the previous one. So you cannot just like do semantic search and hope that you get the latest one. And then you have obviously very structured numbers thing that are very important to the token level. Like, you know, 50% gross margins and 30% gross margins are very different, but you know, this organization is not that different. Any thoughts on like how to build a system to handle all of that as much as you can share, of course?

Mike [00:26:19]: Yeah, absolutely. So I think this again, rather than having open ended retrieval, open ended reasoning, our approach is to decompose the problem into multiple different subsystems that have specific goals. And so, I mean, temporality is a great example. When you think about time, I mean, just look at all of the libraries for managing calendars. Time is kind of at the intersection of language and math. And this is one of the places where, without taking specific technical measures to ensure that you get high quality narrative overlays of statistics that are changing over time and have a description of how a PE multiple is increasing or decreasing, and like a retrieval system that is aware of the time, sort of the time intent of the user query, right? So if I'm asking something about breaking news, that's going to be very different than if I'm looking for a thematic account of the past 18 months in Fed interest rate policy. You have to have retrieval systems that are, to your point, like if I just look for something that is a nearest neighbor without any of that temporal or other qualitative metadata overlay, you're just going to get a kind of a bag of facts. I think that that is explicitly not helpful, because the worst failure state for these systems is that they are wrong in a convincing way. And so I think, at least presently, you have to have subsystems that are aware of the semantics of the documents, or aware of the semantics of the intent behind the question, and then have multiple, we have multiple evaluation steps. Once you have the generated outputs, we assess it multiple different ways to know, is this a factual statement given the sort of content that's been retrieved?

Alessio [00:28:10]: Yep. And what about, I think people think of financial services, they think of privacy, confidentiality. What's kind of like customer's interest in that, as far as like sharing documents and like, how much of a deal breaker is that if you don't have them? I don't know if you want to share any about that and how you think about architecting the product.

Mike [00:28:29]: Yeah, so one of the things that gives our customers a high degree of confidence is the fact that Brandon operated a federally regulated derivatives exchange. That experience in these highly regulated environments, I mean, additionally, at Workday, I worked with the financials product, and without going into specifics, it's exceptionally sensitive data, and you have multiple tenants, and it's just important that you take the right approach to being a steward of that material. And so, from the start, we've built in a way that anticipates the need for controls on how that data is managed, and who has access to it, and how it is treated throughout the lifecycle. And so that, for our customer base, where frequently the most interesting and alpha-generating material is not publicly available, has given them a great degree of confidence in sharing. Some of this, the most sensitive and interesting material, with systems that are able to combine it with content that is either publicly or semi-publicly available, to create non-consensus insight into some of the most interesting and challenging problems in finance.

Alessio [00:29:40]: Yeah, we always say it breaks our recommendation systems for LLMs. How do you think about that when you have private versus public data, where sometimes you have public data as one thing, but then the private is like, well, actually, we got this insight model, with this insight scoop that we're going to figure out. How do you think in the RAC system about a value of these different documents? I know a lot of it is secret sauce, but- No, no, it's fine.

Mike [00:30:05]: I mean, I think that there is, so I will gesture towards this by way of saying context-aware prompting. So you can have prompts that are composable, and that have different command units that may or may not be present based on the semantics of the content that is being populated into the RAG context window. And so that's something we make great use of, which is, where is this being retrieved from? What does it represent? And what should be in the instruction set in order to treat and respect the underlying contents, not just as like, here's a bunch of text, you figure it out, but this is important in the following way, or this aspect of the SEC filings are just categorically uninteresting, or this is sell-side analysis from a favored source. And so it's that creating it, much like you have with the qualitative, the problem of organizing the work of humans, you have the problem of organizing the work of all of these different AI subsystems, and getting them to propagate what they know through the rest of the stack, so that if you have multiple seven, 10 sequence inference calls, that all of the relevant metadata is propagated through that system, and that you are aware of, where did this come from? How convicted am I that it is a source that should be trusted? I mean, you see this also just in analysis, right? So different, like Seeking Alpha is a good example of just a lot of people with opinions, and some of them are great, some of them are really mid, and how do you build a system that is aware of the user's preferences for different sources? I think this is all related to how, we talked about systems engineering, it's all related to how you actually build the systems.

Alessio [00:31:51]: And then, just to kind of wrap on the rec side, how should people think about knowledge graphs and kind of like extraction from documents, versus just like semantic search over the documents?

Mike [00:32:01]: Knowledge graph extraction is an area where we're making a pretty substantial investment, and so I think that it is underappreciated how powerful, there's the generative capabilities of language models, but there's also the ability to program them to function as arbitrary machine learning systems, basically for marginally zero cost. And so, the ability to extract structured information from huge, sort of unfathomably large bodies of content in a way that is single pass, so rather than having to reanalyze a document every time that you perform inference or respond to a user query, we believe quite firmly that you can also, in an additive way, perform single pass extraction over this body of text and then bring that into the RAG context window. And this really sort of levers off of my experience at LinkedIn, where you had this structured graph representation of the global economy, where you said, person A works at company B, we believe that there's an opportunity to create a knowledge graph that has resolution that greatly exceeds what any, whether it's Bloomberg or LinkedIn, currently has access to, where we're getting as granular as person X submitted congressional testimony that was critical of organization Y, and this is the language that is attached to that testimony, and then you have a structured data artifact that you can pivot through and reason over that is complementary to the generative capabilities that language models expose. And so it's the same technology being applied to multiple different ends. And this is manifest in the product surface, where it's a highly facetable, pivotable product, but it also enhances the reasoning capability of the system.

Alessio [00:33:49]: Yeah, you know, when you mentioned you don't wanna re-query like the same thing over and over, a lot of people may say, well, I'll just fine tune this information in the model, you know? How do you think about that? That was one thing when we started working together, you were like, we're not building foundation models. A lot of other startups were like, oh, we're building the finance financial model, the finance foundation model, or whatever. When is the right time for people to do fine tuning versus RAG? Any heuristics that you can share that you use to think about it?

Mike [00:34:19]: So we, in general, I do not, I'll just say like, I don't have a strong opinion about how much information you can imbue into a model that is not present in pre-training through large-scale fine tuning. The benefit of rag is the capability around grounded reasoning. So the, you know, forcing it to attend to a collection of facts that are known and available at inference time, and sort of like materially, like only using these facts. At least in my view, the role of fine tuning is really more around, I think of like language models kind of like a stem cell, and then under fine tuning, they differentiate into different kinds of specific cells, so kidney or an eye cell. And if you think about specifically, like, I don't think that unbounded agentic behaviors are useful, and that instead, a useful LLM system is more like a finite state machine where the behavior of the system is occupying one of many different behavioral regimes and making decisions about what state should I occupy next in order to satisfy the goal. As you think about the graph of those states that your system is moving through, once you develop conviction that one behavior is useful and repeatable and worthwhile to differentiate down into a specific kind of subsystem, that's where like fine tuning and like specifically generating the training data, like having human annotators produce a corpus that is useful enough to get a specific class of behaviors, that's kind of how we use fine tuning rather than trying to imbue net new information into these systems.

Alessio [00:36:00]: Yeah, and people always try to turn LLMs into humans. It's like, oh, this is my reviewer, this is my editor. I know you're not in that camp. So any thoughts you have on how people should think about, yeah, how to refer to models?

Mike [00:36:16]: I mean, we've talked a little bit about this, and it's notable that I think there's a lot of anthropomorphizing going on, and that it reflects the difficulty of evaluating the systems. Is it like, does the saying that you're the journal editor for Nature, does that help? Like you've got the editor, and then you've got the reviewer and you've got the, you're the private investigator. It's like, this is, I think, literally we wave our hands and we say, maybe if I tell you that I'm gonna tip you, that's gonna help. And it sort of seems to, and like maybe it's just like the more cycles, the more compute that is attached to the prompt and then the sort of like chain of thought at inference time, it's like, maybe that's all that we're really doing and that it's kind of like hidden compute. But our experience has been that you can get really, really high quality reasoning from roughly an agentic system without needing to be too cute about it. You can describe the task and within well-defined bounds, you don't need to treat the LLM like a person in order to get it to generate high quality outputs.

Alessio [00:37:24]: And the other thing is like all these agent frameworks are assuming everything is an LLM.

Mike [00:37:29]: Yeah, for sure. And I think this is one of the places where traditional machine learning has a real material role to play in producing a system that hangs together. And there are guaranteeable like statistical promises that classical machine learning systems to include traditional deep learning can make about what is the set of outputs and like what is the characteristic distribution of those outputs that LLMs cannot afford. And so like one of the things that we do is we, as a philosophy, try to choose the right tool for the job. And so sometimes that is a de novo model that has nothing to do with LLMs that does one thing exceptionally well. And whether that's retrieval or critique or multiclass classification, I think having many, many different tools in your toolbox is always valuable.

Alessio [00:38:20]: This is great. So there's kind of the missing piece that maybe people are wondering about. You do a financial services company and you didn't do anything in Excel. What's the story behind why you're doing partner in thought versus, hey, this is like a AI enabled model that understands any stock and all that?

Mike [00:38:37]: Yeah, and to be clear, Brightwave does a fair amount of quantitative reasoning. I think what is an explicit non-goal for the company is to create Excel spreadsheets. And I think when you look at products that work in that way, you can spend hours with an Excel spreadsheet and not notice a subtle bug. And that is a highly non-fault tolerant product experience where you encounter a misstatement in a financial model in terms of how a formula is composed and all of your assumptions are suddenly violated. And now it's effectively wasted effort. So as opposed to the partner in thought modality, which is yes and, like if the model says something that you don't agree with, you can say, take it under consideration. This is not interesting to me. I'm going to pivot to the next finding or claim. And it's more like a dialogue. The other piece of this is that the financial modeling is often very, when we talk to our users, it's very personal. So they have a specific view of how a company is structured. They have the one key driver of asset performance that they think is really, really important. It's kind of like the difference between writing an essay and having an essay, I guess. Like the purpose of homework is to actually develop what do I think about this? And so it's not clear to me that like push a button, have a financial model is solving the actual problem that the financial model affords. That said, we take great efforts to have exceptionally high quality quantitative reasoning. So we think about, and I won't get into too many specifics about this, but we deal with a fair number of documents that have tabular data that is really important to making informed decisions. And so the way that our RAG systems operate over and retrieve from tabular data sources is it's something that we place a great degree of emphasis on it's just, I think the medium of Excel spreadsheets is just, I think not the right play for this class of technologies as they exist in 2024.

Alessio [00:40:40]: Yeah, what about 2034?

Mike [00:40:42]: 2034?

Alessio [00:40:43]: Are people still going to be making Excel models or like, yeah, I think to me, the most interesting thing is like, how are the models abstracting people away from some of these more syntax driven thing and making them focus on what matters to them?

Mike [00:40:58]: Yeah, I wouldn't be able to tell you what the future, 10 years from now it looks like. I think anybody who could convince you of that is not necessarily somebody to be trusted. I do think that, so let's draw the parallel to accountants in the 70s. So VisiCalc, I believe came out in 1979. And historically the core, you know, you would have as an accountant, as a finance professional in the 70s, like I'm the one who runs the, I run the numbers. I do the arithmetic and that's like my main job. And we think that, I mean, you just look now that's not a job anybody wants. And the sophistication of the analysis that a person is able to perform as a function of having access to powerful tools like computational spreadsheets is just much greater. And so I think that with regards to language models, it is probably the case that there is a play in the workflow where it is commenting on your analysis within that, you know, spreadsheet based context, or it is taking information from those models and sucking this into a system that does qualitative reasoning on top of that. But I think the, it is an open question as to whether the actual production of those models is still a human task. But I think the sophistication of the analysis that is available to us and the completeness of that analysis necessarily increases over time.

Alessio [00:42:24]: What about AI hedge funds? Obviously, I mean, we have quants today, right? But those are more kind of like momentum driven, kind of like signal driven and less about long thesis driven. Do you think that's a possibility?

Mike [00:42:35]: It's, this is an interesting question. I would put it back to you and say like, how different is that from what hedge funds do now? I think there is, the more that I have learned about how teams at hedge funds actually behave, and you look at like systematics desks or semi-systematic trading groups, man, it's a lot like a big machine learning team. And it's, I sort of think it's interesting, right? So like, if you look at video games and traditional like Bay Area tech, there's not a ton of like talent mobility between those two communities. You have people that work in video games and people that work in like SaaS software. And it's not that like cognitively they would not be able to work together. It's just like a different set of skill sets, a different set of relationships. And it's kind of like network clusters that don't interact. I think there's probably a similar phenomenon happening with regards to machine learning within the active asset allocation community. And so like, it's actually not clear to me that we don't have AI hedge funds now. The question of whether you have an AI that is operating a trading desk, that seems a little, maybe, like I don't have line of sight to something like that existing yet. No, I mean, I'm always curious.

Alessio [00:43:48]: I think about asset management on a few different ways, but venture capital is like extremely power law driven. It's really hard to do machine learning in power law businesses because, you know, the distribution of outcomes is like so small versus public equities. Most high-frequency trading is like very, you know, bell curve, normal distribution. It's like, even if you just get 50.5% at the right scale, you're gonna make a lot of money. And I think AI starts there, right? And today, most high-frequency trading is already AI driven. You know, Renaissance started a long time ago using these models. But I'm curious how it's gonna move closer and closer to like power law businesses, right? I would say some boutique hedge funds, their pitch is like, hey, we're differentiated because we only do kind of like these long-only strategies that are like thesis driven versus, you know, movement driven. And most venture capitalists will tell you, well, our fund is different because we have this unique thesis on this market. And I think like five years ago, I've read this blog post about why machine learning would never work in venture because the things that you're investing in today, they're just like no precedent that should tell you this will work. You know, most new companies, a model will tell you this is not gonna work, you know, versus the closer you get to the public companies, the more any innovation is like, okay, this is kind of like this thing that happened. And I feel like these models are quite good at generalizing and thinking, again, going back to the partnering thought, like thinking about second order.

Mike [00:45:13]: Yeah, and that's maybe where concrete example, I think it certainly is the case that we tell retrospective, to your point about venture, we tell retrospective stories where it's like, well, here was the set of observable facts. This was knowable at the time, and these people made the right call and were able to cross correlate all of these different sources and said, this is the bet we're gonna make. I think that process of idea generation is absolutely automatable. And the question of like, do you ever get somebody who just sets the system running and it's making all of its own decisions like that, and it is truly like doing thematic investing or more of the like what a human analyst would be kind of on the hook for, as opposed to like HFT. But the ability of models to say, here is a fact pattern that is noteworthy, and we should pay more attention here. Because if you think about the matrix of like all possible relationships in the economy, it grows with the square of the number of facts you're evaluating, like polynomial with the number of facts you're evaluating. And so if I want to make bets on AI, I think it's like, what are ways to profit from the rise of AI? It is very straightforward to take a model and say, parse through all of these documents and find second order derivative bets and say, oh, it turns out that energy is like very, very adjacent to investments in AI and may not be priced in the same way that GPUs are. And a derivative of energy, for example, is long duration energy storage. And so you need a bridge between renewables, which have fluctuating demands, and the compute requirements of these data centers. And I think, and I'm telling this story as like, having witnessed Brightwave do this work, you can take a premise and say like, what are second and third order bets that we can make on this topic? And it's going to come back with, here's a set of reasonable theses. And then I think a human's role in that world is to assess like, does this make sense given our fund strategy? Does this, is this coherent with the calls that I've had with the management teams? There's this broad body of knowledge that I think humans sort of are the ultimate like, synthesizers and deciders. And like, maybe I'm wrong. Maybe the world of the future looks like, and the AI that truly does everything, I think it is kind of a singularity vector where it's like really hard to reason about like, what that world looks like. And like, you asked me to speculate, but I'm actually kind of hesitant to do so because it's just the forecast, the hurricane path just diverges far too much to have a real conviction about what that looks like.

Alessio [00:47:58]: Awesome, I know we've already taken up a lot of your time, but maybe one thing to touch on before wrapping is open source LLMs. Obviously you were at the forefront of it. We recorded our episode the day that Red Pajama was open source and we were like, oh man, this is mind blowing. This is going to be crazy. And now we're going to have an open source dense transformer model that is 400 billion parameters. I don't know if one year ago you could have told me that that was going to happen. So what do you think matters in open source? What do you think people should work on? What are like things that people should keep in mind to evaluate? Okay, is this model actually going to be good? Or is it just like cheating some benchmarks to look good? It's like, is there anything there? Like, yeah, this is the part of the podcast where people already dropped off if they wanted to. So they want to hear the hot things right now.

Mike [00:48:46]: I mean, I do think that that's another reason to have your own private evaluation corpuses is so that you can objectively and out of sample measure the performance of these models. And again, sometimes that just looks like giving everybody on the team 250 annotations and saying, we're just going to grind through this. And you have to tell, does this meet? The other thing about doing the work yourself is that you get to articulate your loss function precisely. What is the thing that, what do I actually want the system to behave like? Do I prefer this system or this model or this other model? Yeah, and I think the work around overfitting on the test I think is like that 100% is happening. One notable, in contrast to a year ago, say, the incentives, the economic incentives for companies to train their own foundation models, I think are diminishing. So the window in which you are the dominant pre-train, and let's say that you spend five to $40 million for like a, call it kind of a commodity-ish pre-train, not 400 billion would be another sort of-

Alessio [00:49:50]: It costs more than 40 million. Another leap.

Mike [00:49:52]: But the kind of thing that, like a small multi-billion dollar mom and pop shop might be able to pull off. The benefit that you get from that is like, I think, diminishing over time. And so I think fewer companies are going to make that capital outlay. And I think that there's probably some material negatives to that. But the other piece is that we're seeing that, at least in the past two and a half, three months, there's a convergence towards like, well, these models all behave fairly similarly. And it's probably that the training data on which they are pre-trained is substantially overlapping. And so it's generalizing a model that generalizes to that training data. And so it's unclear to me that you have this sort of balkanization where there are many different models, each of which is good in its own unique way, versus something like Lama becomes like, listen, this is a fine standard to build off of. We'll see, it's just like the upfront cost is so high. And I think for the people that have the money, the benefit of doing the pre-train is now less. Where I think it gets really interesting is how do you differentiate these and all of these different behavioral regimes? And I think the cost of producing instruction tuning and fine tuning data that creates specific kinds of behaviors, I think that's probably where the next generation of really interesting work starts to happen. If you see that the same model architecture trained on much more training data can exhibit substantially improved performance, it's the value of modeling innovations. For fundamental machine learning and AI research, there is still so much to be done. But I think that much lower hanging fruit, I guess, is developing new kinds of training data corpuses that elicit new behaviors from these models in a specific way. And so that's where, when I think about the availability to like a year ago, you had to have access to fairly high performance GPUs that were hard to get in order to get the experience of multiple reps fine tuning these models. And what you're doing when you take a corpus and then fine tune the model and then see across many inference passes, what is the qualitative character of the output, you're developing your own internal mental model of how does the composition of the training corpus shape the behavior of the model in a qualitative way. A year ago, it was very expensive to get that experience. And now you can just recompose multiple different training corpuses and see like, well, what do I do if I insert this set of demonstrations or I ablate that set of demonstrations? And that I think is a very, very valuable skill and one of the ways that you can have models and products that other people don't have access to. And so I think as more people, as those sensibilities proliferate because more people have that experience, you're gonna see teams that release data corpuses that just imbue the models with new behaviors that are especially interesting and useful. And I think that may be where some of the next sets of kind of innovation differentiation come from.

Alessio [00:53:03]: Yeah, yeah, when people ask me, I always tell them the half-life of a model, it's much shorter than a half-life of a dataset.

Mike [00:53:08]: Yes, absolutely.

Alessio [00:53:09]: I mean, the pile is still around and like core to most of these training runs versus all the models people trained a year ago. It's like, they're at the bottom of the LMC's litter board.

Mike [00:53:20]: It's kind of crazy, like I don't, just the parallels to other kinds of computing technology where like the work involved in producing the artifact is so significant and the like shelf life is like a week. You know, I'm sure there's a precedent, but it is remarkable.

Alessio [00:53:37]: Yeah, I remember when Dolly was the best open source model.

Mike [00:53:42]: Dolly was never the best open source model, but it demonstrated something that was not obvious to many people at the time. Yeah, but we always were clear that it was never state-of-the-art.

Alessio [00:53:53]: State-of-the-art or whatever that means, right? This is great, Mike. Anything that we forgot to cover that you want to add? Any call, I know you're, you know, thinking about growing the team.

Mike [00:54:03]: We are hiring across the board, AI engineering, classical machine learning, systems engineering and distributed systems, front-end engineering, design. We have many open roles on the team. We hire exceptional people. We fit the job to the person as a philosophy and would love to work with more incredible humans. Awesome.

Alessio [00:54:25]: Thank you so much for coming on, Mike.

Mike [00:54:26]: Thanks, Alessio.