The Hyperstitions of Moloch

It is ever easier to make wishes into reality, therefore we should be careful what we wish for...

The basic insight of reflexivity is that, contra the Platonic Representation Hypothesis where incorrect beliefs converge on correct reality, there exist some, maybe the majority, of situations where unreal beliefs alter reality to retroactively make themselves real. Not figuratively in the “everything is a social construct” sense, but literally, in the fact that beliefs create national borders, churches, economies, art, wars and so on, because human beliefs drive human actions in the physical, real world. Real Steel becomes Underground Robot Fight Club just because we all think it’s rad.

The medium is not just the message, but the meme drives the manifestation.

In AI social circles, the preferred term for this has come to be the Landian word hyperstition: “ideas that, by their very existence as ideas, bring about their own reality”. And of course one of the most important lens to view generative AI and AI agents as a whole is that it massively lowers the barriers to go from thought to reality, whether it is paper reviews, news summaries, or entire working apps.

In 2025, one of the most important generative AI trends is “finally good AI Video”, like Veo 3 (introduction here).

Today’s guests, Justine and Olivia Moore, have become experts and documentarians of the generative media trends in popular culture (not just video), and we’re excited to release our conversation with them, which you can watch here!

Brainrot IS a thing people want

The topic of Brainrot is an uncomfortable one to confront. The self styled “high taste testers” of the world would never deign to consume “AI slop”… but there are many many billions of humans in the lower swells of the bell curve that do.

When you really think about it, the “plotlines” and “characters” are no more “slop” than your average Teletubbies or every generation’s blue dog. But it was still a surprise to learn that these video gen characters have become real toys:

This is enormously powerful in the small sense - IP that you can programmatically create from scratch, A/B test, and double down on whatever the RNGhost in the machine blesses into a popular brand is worth billions in the consumer market.

Of course, unfettered capitalism doesn’t everywhere and always lead to desirable outcomes. There’s many times that you or your kids should NOT be given what you want, at the every thought and whim. While we acknowledge that issue, we want to shed some light on the brighter side that we feel often gets overlooked in the standard essays’s penchant for hating on AI and capitalism.

Hyperstition-led Growth

Justine made her own version exploration of this with Melt, her own froyo brand. None of this exists in reality:

But you could obviously build a large social following just sharing images of this, and a/b testing the best/most viral ones over 50 different accounts, none of which exist, but crucially, follow through and actually start the brand with an inbuilt fandom from day 1.

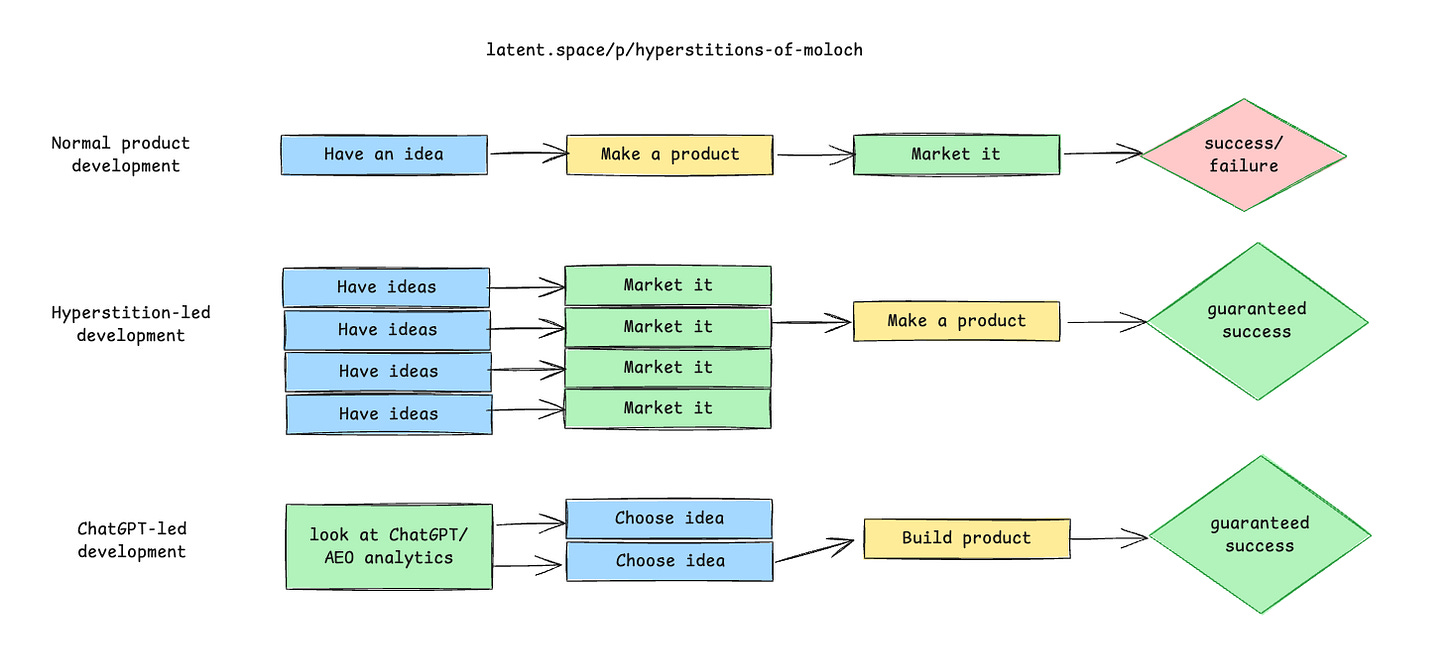

This kind of “selling things that don’t exist yet” technique has a long tradition since the zero-incremental-margin internet of the 4-Hour Work Week, and it has led to various forms of Dead Internet Theory (see also: Dead Planet Theory). But there is a bright side to that admittedly very serious problem, that pessimists often overlook - this is actually an enormously efficient process to discover what people want to buy, and so cut down a lot of the wasted guesswork that is both costly and terrible for the environment:

You don’t even need humans to drive the idea these days, because you could just have ChatGPT tell you what it thinks you should have, and then you go build it because it makes sense.

Show notes

Italian Brainrot made into Toys

AI IP - Disney, Harry potter

AI Ads

AI creator stack

Prompt Theory

Misc

Karpathy on veo 3 https://x.com/karpathy/status/1929634696474120576

Veo 3 opera https://x.com/jerrod_lew/status/1924934440486371589

Veo 3 chinese mumble rap https://x.com/1owroller/status/1925004130730606810

Veo 3 rapping https://x.com/YugeTen/status/1924947152242974877

Veo 3 singer https://x.com/nearcyan/status/1924966995910631899

Ai furniture https://x.com/venturetwins/status/1686443924385308672

Kangaroo model https://x.com/venturetwins/status/1934236631336403344?s=46

Transcript

Alessio [00:00:04]: Hey everyone, welcome to the Latent Space podcast. This is Alessio, partner and CTO at Decibel, and I'm joined by my co-host Swyx, founder of Small AI.

swyx [00:00:12]: Hello, hello. We have a very special double guest episode with Justine and Olivia Moore. Welcome.

Olivia [00:00:17]: Hi, thanks for having us. We're excited to be here.

swyx [00:00:20]: I think you're the first twins on the pod.

Olivia [00:00:23]: We're honored. We love that.

swyx [00:00:24]: Olivia, are you wearing glasses so it's easier to differentiate? Is that like a thing?

Olivia [00:00:28]: No, we have opposite, even though we're identical twins, we have opposite vision problems. So I actually need glasses and Justine doesn't, but it would be nice. Yeah, sometimes we think we should do like name tags on our foreheads or something, but I think the glasses work just as well.

swyx [00:00:42]: So both of you are partners at Andreessen Horowitz, but also I think we're actually talking to you in a capacity of you're just very involved in generative media. We don't cover enough and I can see a change. Latent Space itself was started because of stable defense. And then there was like, yeah, improvements in image generators for a while. You can see like ReCraft is better than blah and then Black Forest Labs and so on and blah. But like, they're all just image generators. I think video gen and then also there was music gen for a while. Video gen, I think, is like the current thing. And we really wanted to do an episode on that, I guess. And then also, I think the last piece that I will preview is that we really wanted to start using it for Latent Space itself. So we could use some help. We could use some. We could use some overview of like what people are doing. We can sort of take it from there. I have some like some of your tweets pulled up, but I don't know how you want to start.

Justine [00:01:37]: It's really funny. Actually, I got into generative media, too, with Stable Diffusion and I think it was like September 2022-ish and it's grown so quickly since then. And Olivia and I talk about this all the time because it used to be that our friends in creative fields would look at like the AI image and video generation and be like, I'm not worried about that or I can't use it in my job. Like, this is kind of just a silly side thing. And then I think starting earlier this year, we started getting a few of them being like, maybe I should maybe I should learn a little bit more about how these work and how to use them. And now we'll literally have people coming over to our house on the weekend so we can like give tutorials and walkthroughs of like, here's here's the tools. Here's how you use them. That sort of thing.

Olivia [00:02:24]: I would say we've seen the same thing happen on. I mean, if you've been on TikTok or Reels. Or YouTube Shorts recently in the past week, probably 90 percent of your feed is AI generated video. Like even two months ago, it was like that little orange cat animation that was super popular. And then it was the Italian brain rot characters. But it was just a few people making AI video. And now there's like, I would guess, hundreds of thousands of people making and publishing AI video, which is just awesome.

Alessio [00:02:51]: Sorry, I just want to clarify. Are the Italian brain rot made by Italians? Or is the descent like? Are the names it's I haven't quite figured that out. I haven't been able to trace it down to the origins of the Italian brain rot.

Justine [00:03:04]: It's complicated because it's a decentralized meme that people are taking and remixing. I actually looked into this question as well. And I think the answer is no, it did not come from Italians. But someone early on thought the name sounded vaguely Italian. And so they called it Italian brain rot.

Alessio [00:03:23]: We're innocent. That's all I wanted to know. I guess we're not.

swyx [00:03:26]: It's not our fault. So I didn't even know about the Italian brain rot, but I wanted to kind of show and tell a little bit as well so that our viewers can watch along. So this is it, apparently.

Olivia [00:03:37]: Exactly. So this is essentially it was kind of a universe of characters started decentralized, like one person made a few characters and then a couple other people on TikTok added their own characters to the universe. And the best ones became kind of like canon. And they started as images is probably important to clarify. And then they people started animating them into video. Yes. And then some people, as you can see in this kind of music video, did like compilations of all the characters together or videos where the characters were interacting with each other and whole storylines were forming. And so it became this whole giant entertainment thing. I saw last night, actually, there the IP has evolved to the point where people are now selling like toy sets and T-shirts and plushies. And the crazy thing about this first video to me is it's like a real kid who is treating these characters like knows them by heart. And it's. Treating them like they're Nickelodeon characters.

swyx [00:04:29]: Why not?

Olivia [00:04:30]: It's absolutely wild. But I get it because he's he's able to watch like dozens, if not, you know, hundreds of videos of these characters every day on TikTok, whereas Nickelodeon might put out like one episode of your show every week. So you get attached fast, I think.

swyx [00:04:44]: So this is I mean, this is actually for kids. It's not for adults. I don't know.

Justine [00:04:48]: Adults love it, though. They're making like musicals. They're making movies. They're making more, I would say, adult oriented storylines of like the characters cheating. They're cheating on each other. So I would say it's for both.

swyx [00:05:00]: It can be too fun to scroll brain rot characters. I was wondering, like, how do you keep track? What is your process? How do you organize the universe? Apart from just mindlessly scrolling? You know, I think that's the hard part about covering something like this.

Justine [00:05:15]: Keeping track of trends or models or both.

swyx [00:05:18]: Trends first. I think models, models will come and go like the current thing is VO3, but like there will be a next thing, you know?

Justine [00:05:25]: I think first of all, you have to know. You have to know where the trends are originating at that point in time. Initially with AI video, it was actually Reddit. Like the early days of AI video, you had a ton of people making and posting stuff in those, in those forums.

swyx [00:05:40]: Which ones?

Justine [00:05:42]: AI video, ChatGPT, Singularity, like basically all of the AI oriented. The AI video one was like the one for a while. And then people like me would take the best content from there and bring it to Twitter. And then like the very best would sort of make its way eventually to TikTok or Instagram, but that happened pretty rarely now, especially after VO3 and like Minimax 2 and like with the animals diving and that sort of thing. We've actually seen like a flip where most of the viral content is now originating on the true consumer, like everyday consumer platforms, which I would say are like TikTok and Instagram. And so because. Because people more like everyday people who are not technical can make quality, interesting content and they can remix content than other people have created. These characters like the Italian brainwok characters are like a million times bigger on Instagram and TikTok than they are on X. And so nowadays I'd say we spend more time on those other platforms and then sort of watching what starts getting momentum in the community. Like what are other people remixing and iterating on? What are people making accounts for like the vlogs? And then eventually, like what what starts making its way to X and Reddit?

Alessio [00:07:01]: Do you have kind of like a contaminated account that you use for like max brain rot algorithm and then once you log off, it's like you close that, you close that in a safe and look at it?

Justine [00:07:12]: No, I like to live in the brain rot all the time, so I purposely do not have a separate account.

Olivia [00:07:17]: I actually do. I even made my own account. I can share my screen. Yeah. Olivia's become like a TikTok creator for these videos. So once VO3 came out. Yeah. And now I've like maxed out all of my my credit. So I need to use Justine's special account. But I was like, OK, I'm curious, like how low hanging is the fruit here? Like how easy is it to actually kind of make money on this?

swyx [00:07:39]: Oh, you made your own. Yeah.

Olivia [00:07:40]: I made my own. And then I was experimenting with all the prompts and trying the videos myself. OK, let's see. So I started you can see I'm exposing myself here, but I started when the fruit slicing ASMR videos emerged and I was like, OK, let me get creative and try my own formats like opening dragon eggs or cracking eggs or something. It didn't really work. So I did the fruit trend myself and you can see that did the best. I think the trend that always works like in any format is lava. Like people love like eating lava, squishing lava, like peeling the crust out of lava. Like you can see in the comments like people are just completely obsessed with it. And then I evolved into the interesting thing about VO3 is there's no IP restrictions, at least on cartoon games. There are no rules. Yeah, on real people there are on cartoon characters there are not. So you can make like Stitch from Lilo and Stitch doing his own ASMR videos, which was super fun. And then I just kind of honestly was like following the trends of of like what was popular, like gold bar squishing became a thing for a while. I would say the thing I'm most ashamed of that I eventually ended up falling for is the Tide Pod consumption. This is a very popular genre of videos. And as you can see, people are admitting that this is their live stream to be able to eat a Tide Pod, and the AI characters can do it completely safely. So these were super fun to make, and I would cross-publish them on YouTube and Instagram, and it was fascinating to see what worked differently on what platforms.

Alessio [00:09:14]: Were all of these text-to-video directly? Do you ever do frame-to-video to maybe steer it a little more?

Olivia [00:09:22]: Yeah, almost all of them were VO3, just directly text-to-video. I did a couple on the Minimax model, especially the new trend of animals diving off the diving board at the Olympics, which went mega viral. I did that with the Star Wars characters, and I just wanted to get the sound effects super dialed. So I actually did that with the Minimax model and then used the new Eleven Labs sound effects models to hand-generate the sound effects, which took a very long time. I didn't have to generate them and then line them up at the right spot in the video, but it was very satisfying when I was done.

Justine [00:09:57]: Because the thing to know about VO3 that I think a lot of people don't know until you use it is you actually can't do image-to-video with audio. Google hasn't released that yet, I would assume for trust and safety reasons. In the interface, you can start with a frame, but once you do that, if you're doing it on the VO3 text-to-video model, it will switch you back to VO2 and will tell you, hey, we're switching you to a model that's compatible. to starting with an image, which means that it makes it super hard for like character consistency, right? If you're not able to start with an image of the same character over and over again, which is part of why you're seeing so many of these viral trends using things like a Stormtrooper or like Jesus, where there's like a known identity that the model already understands and can recreate without a starting frame.

Olivia [00:10:46]: A lot of the Yeti and Bigfoot videos have gone super viral, like they have their own channels where they're making videos. They're making daily vlogs and they're getting like millions of followers and hundreds of thousands of likes. And they're genuinely really entertaining and you start to feel an affinity with the characters, which is super fun.

swyx [00:11:02]: This is the one that somebody posted about. I've actually, I'm subscribed, I'm watching it.

Justine [00:11:09]: They're so good. And they're like real storylines, especially I think there's a lot of people who are like not happy with the way the official IP has been going. And so they prefer to watch. They're like, I can control the story myself now.

swyx [00:11:22]: And also, I mean, obviously it's nice that they, you don't see their mouths. So it's, they can speak without it. Yeah. So how come you haven't done a vlog? You're just focused on ASMR?

Olivia [00:11:33]: Exactly. Those are easier to generate for sure. I've been shocked by like how smart VO3 is. And Justine, you probably know more about this, but like from a pretty under-optimized prompt for something like an ASMR video, because I don't know, I have to imagine it's at least somewhat trained on YouTube data. Yeah. Something that already exists on YouTube, like any genre of video that already exists, it does a really good job of taking like a one-line prompt and like turning into something that you would see like a professional creator publish. But the vlogs, the narrative character-driven vlogs are a little bit more complicated outside of my skill set right now.

Justine [00:12:09]: I would say that we, like, I generate dozens of VO3 videos every day and just don't post most of them on like TikTok or Instagram. So I've definitely experimented with the vlog format and it's like pretty easy to do.

Alessio [00:12:22]: How much of a part do you think the character familiarity plays into it? You know, maybe the Stormtrooper content is actually not that good, but there's so many Star Wars fans, including, you know, I mean, you can see I have a Vader helmet right there. Do you think there's kind of like an initial slope of like, hey, let's remix all known IPs in like this new format and you get a lot of attention, then you kind of see that peter off? Or we're not consumer experts, so I'm curious to hear your thoughts.

Justine [00:12:48]: It's such a good question. And a lot of our portfolio, companies ask us this because obviously they're all trying to figure out how do they make their content stand out or how do they help people make more interesting and viral stuff using their platforms. So we've thought about this and studied this and A-B tested different video formats. I think there are like a couple of things that benefit new formats like AI video in general. One is having some element of familiarity, but then having an interesting twist on it. I think it like hits multiple of the like, good sectors of people's brains when you're like, oh, it's a Stormtrooper. I know this. I'm going to like keep watching because I'm like already bought into the storyline of Star Wars and I want to see what happens next. But then like you get this other weird happiness in your brain when the Stormtrooper does something in the AI vlog they would like never do in the real cinematic universe. I mean, like 2% of like the Star Wars hardcore fans will like leave an angry comment like this isn't realistic, but like a ton of the time, it's like, oh, it's a good thing. It's a good thing. It's a good thing. It's a good thing. It's a good thing. It's a good thing. It's a good thing. It's a good thing. It's a good thing. It's a good thing. It's a good thing. It's a good thing for them are like, oh, this is super cool. Like I always wondered about this and like now it can happen. One of the benefits of starting with established IP is like you're already tapping into a known association in people's brains that makes them stop scrolling and be interested. The second thing we've seen work though, honestly, is like just super weird stuff. Like this is why the Italian brain rot characters, like they're not based on any existing IP, right? They're just completely new, but they're just strange and interesting.

Olivia [00:14:21]: Interesting enough that you're continuing to watch just to see like, what the heck is this? Like, am I hallucinating? Are they speaking a different language or is this English? And I just don't understand it. And so I think over time, we'll see more AI like IP like that. One of my favorite examples, and I'll screen share again, is Kim the gorilla, which is another TikTok character. And this is a new character. I actually don't even know who makes it, but it's a gorilla in a zoo named Kim who has a... And the storyline over time is just her constant conflict with a zookeeper named Becky, who she's fighting with and trying to escape from. And you can see like all of her videos get hundreds of thousands of likes. She already has like 300,000 followers in like a really short period of time. Yeah, that's Becky that she's kind of fighting with in most of these storylines. She already has her own website, her own free Kim merch, where she tries to escape from the zoo. And so it's this kind of thing where it's like, who knows if this person would have been able to make this content before? Like, I'm guessing they're not a professional filmmaker, but like...

Justine [00:15:27]: Or had access to a gorilla. Like who? Yeah.

Olivia [00:15:30]: And a great gorilla actress as well. Yeah. Not just a gorilla, but a gorilla who can act on camera. It's not also like anyone with VO3 is going to come up with a really fun, great narrative idea like this and keep it going over time. So in my mind, it's still a mix of like, we need great creative. But now they just have like a different tool set.

swyx [00:15:49]: Yeah, they're just going to be a lot more creative. So yeah, I would just appreciate we're more face blind to gorillas so that that solves the consistency problem. And then you add the pink bow that makes it more recognizable, even if it's like misplaced. It's so smart. It's just like, I feel just dumb when I look at these people and how they solve AI problems, you know.

Justine [00:16:07]: I think the really interesting thing too is people remix each other's work. Like someone does a Yeti and then other people realize like, oh, I can get around the eight second limit. I think the really interesting thing too is people remix each other's work. Like someone does a Yeti and then other people realize like, oh, I can get around the eight second limit. are just literally remixing other people's work like this. And then the universe evolves from that.

swyx [00:16:52]: For people who are kind of newish to this whole field, it is actually very valuable to have your own IP that you entirely control. So effectively, I actually tried to get an interview with Lil Mikaela.

Justine [00:17:05]: Ah, yes.

swyx [00:17:06]: This can be cynically taken or whatever. But like the cynical version is you have an influencer that will always obey you. Right? Yeah. So they'll never speak out. They'll never speak out about like political stuff. It will just just do what you ask it to do and behave. And, and like, you know, that's rough for the AI model, but hopefully they're not sentient yet. But in the meantime, you have like a property you completely control. And like you can pose them and put them in all sorts of situations.

Justine [00:17:31]: That part is so interesting. I have like a very hot and controversial take on this, which some people hate, which is like, before, honestly, to be like an Instagram or YouTube influencer, most of them are hot people. And now it's like anyone can be a popular influencer. And you don't have to be a hot person. Like how many friends do you have that have like are like really funny and have like great personalities and are super creative, but they like don't fit the exact beauty standard of like what an Instagram influencer is. And now it's like anyone can create a character who like an AI character who meets the beauty standard, and then it can be their brain behind the content.

Olivia [00:18:07]: We already saw this with whole startups or stacks of products. And Justine looked at a bunch of these where it was like basically make money by building your own Instagram influencer. We already saw this with whole startups or stacks of products. And Justine looked at a bunch of these where it was like basically make money by building your own Instagram influencer. off of AI images. And a lot of people were making, yeah, tens of thousands of dollars through that. They would even have sometimes like a subscription that you could sign up for to get extra content for them that would make like a ton more money than they could make on Instagram ads or something like that. But I feel like with AI video, it's just going to explode 10x.

Alessio [00:18:36]: How do you see the monetization kind of landscape? So there's the I use AI to make a fake person that then sell some product. How do you see the monetization kind of landscape? So there's the I use AI to make a fake person that then sell some product. How do you see the monetization kind of landscape? So there's the I use AI to make a fake person that then sell some product. How do you see the monetization kind of landscape? So there's the I use AI to make a fake person that then sell some product. How do you see the monetization kind of landscape? So there's the I use AI to make a fake person that then sell some product.

Justine [00:19:06]: How do you see the monetization kind of landscape? So there's the I use AI to make a fake person that then sell some product. How do you see the monetization kind of landscape? So there's the I use AI to make a fake person that then sell some product. How do you see the monetization kind of landscape? So there's the I use AI to make a fake person that then sell some product. to your videos. Another way is, yeah, we've seen people use them for like ads or driving traffic to an actual business. We've also seen people who are like really skilled prompters, people like a Nick St. Pierre, who sell online courses or do a lot of consulting, like a lot of actually the best AI creators do a lot of consulting work behind the scenes, whether it's with companies or with brands. And the viral content they create is like generating leads for these people to read. And then they either like sell a course or they sell their consulting services. What I'm waiting for is like, what is the first AI native IP or AI videos that get packaged and bought by like a Netflix or like a Hulu? And then like, from there, how do you even determine like who gets paid in that scenario when like this gets bought because it's the brainchild of like a thousand different characters. But like, I'm sure given the popularity, of Italian brain rot on, on Instagram and TikTok, like you can totally imagine someone like Netflix wanting to buy and reuse or license those characters for their own content.

Olivia [00:20:26]: My learning of making a bunch of these videos and trying to post them on social feeds is it's actually like very expensive still to produce just because VO3 is so expensive. And especially if you're making more complex content than like someone slicing a glass fruit. And even that in itself, like I would have to do, you know, eight generations before it was coming out. But especially if you're cutting the fruit in the right way that I could post it and people would be happy. But especially if you're doing something more complex, like and you're using something like VO3, it's, it's really expensive to run, to run these. Because you get a certain amount of generations on kind of the core plan, but then you have to pay for extra credits. Exactly. This is the fruit example. Like lots of times when I tried to generate it, it would like, you know, cut it horizontally instead of vertically or the layers would look weird once you cut them through. And so you would end up having to do a bunch of generations. To get one that works. But yeah, my learning is that the generation is still expensive enough that it's like, you have to kind of be smart about how you're going to make money from it. Otherwise, I think a lot of people are going to find that the ROI isn't really worth it.

Alessio [00:21:31]: And roughly the payouts on the social medias are like 20 bucks per million views, something in that range. That sounds high.

Olivia [00:21:39]: You have to go. Well, it depends on the platform, but it's like you have to go viral enough or get enough views to even qualify for like a creator program. Or you can then make money like with my videos I posted on TikTok. I'm not part of the creator program, so I'm not making money from those. So it's first like you have to have one or two videos that ideally go hyper viral and qualify you. And then after that, it's like, yeah, you have to get enough views on each incremental video that you're actually making money from them. So it's not like cash sitting on the ground, I would say, on these platforms quite yet.

swyx [00:22:13]: I was going to correct myself. 20 per million is actually low. I think, oh, maybe that's TikTok level. I was thinking 20 per mil, which is a thousand, but yeah.

Justine [00:22:22]: I think it also depends on like the engagement and stuff like that, too. But like the other really interesting thing is a lot of people are. So some people are motivated by I make these viral videos and then I use it to sell something, whether it's like my time or a course or whatever. A lot of people just finally have the opportunity to grow a big account online and like the dopamine hit of getting like tens of thousands of likes and like thousands. Followers on an account doing like I grow of logs when you were never able to like grow a big social media account before. I think that is motivating a bunch of people, too, which is fascinating. Like these people are all experiencing what it's like to be early adopters who are like pioneering a new form of content and people are going crazy for it. That feels sort of really rewarding and fun.

swyx [00:23:11]: Yeah, I agree. I had a cheap joke that I was going to throw in there, which is like, you know, people who are. Are not hot enough for Instagram or TikTok, you know, to go to Twitter.

swyx [00:23:24]: think this links very closely with the creator economy stuff, creator economy. You know, I think people were very excited maybe like five years ago and then it kind of died ish. I don't know if you would agree or you would vehemently object, but like maybe this is like the return of the creator economy. There's another way to slice the monetization stuff, you know, just to put like VC hat on a little bit, which is like there's the creators. And, you know, I think. We talked about them, but then there's like sort of the creator enabler platforms. Let's call it like a CREA or a comfy UI or whoever. And then there's the direct model layer itself. And I think like right now, especially with VO, just like directly offering a platform, like it's going to be the model layer that's like gets all the money. And that's just an open model where then it then it goes to the model enabler, the model workflow platforms. Is that accurate? Would you change that mental model of how money flows?

Justine [00:24:16]: I would say. Somewhat accurate. I agree with kind of the distinction between the we call it sort of like the interface layer or the application layer. Companies that aren't training their own foundation models, but are making it really easy to use other people's models. And then the core model layer itself, like a VO3 or like a mini max or like a cling or all those or all those folks. Some of the model providers are better than others at building the consumer interfaces. So like cling, for example, which is one of the really good. Chinese image to video models has like a pretty good interface to be able to upload an image, make it a video, add sound effects in their platform, that sort of thing. I think like VO3 is in my mind, actually like a counterexample where it's super hard to figure out where to access VO3 within all of the Google products. Like you have to sign up for the separate product called Flow, which requires like a subscription to one of two like very expensive Google plans. And then from that subscription, like you have to make. sure you're logged into the correct Google account when you try to access the subscription because we all have like three Gmail accounts. And so honestly, what we see is like a ton of creators are just going to the model enablement layer, whether it's a consumer facing interface like CREA or more of a developer facing interface, like a fall or replicate where you can generate like videos on a one off basis and like a pay as you go model because it's easier than trying to navigate like the behemoth that is. the Google product infrastructure, and then you don't have to commit to the like $125 a month Google plan to use the model.

Olivia [00:25:55]: Another example on on Flow and VO, it's like VO2 is actually the default and you have to like navigate a bunch of like little hidden buttons to change it to VO3. So there's like YouTube videos with like millions of hits of just like, how do I find VO3 within like the Google subscription? Yes, you can't. It doesn't work on mobile, which is crazy. Yes. Given so many of these videos are being posted. On mobile apps, like, yeah, and like maybe Google will release a video generation mobile app, but I would guess that it's going to take a really long time. And the website isn't even usable on mobile. So they might fix some of these things. But I think even in the VO3 example, it is API available already. And so like a lot of the model enablement companies, I think, are making a bunch of money from it. And Google, I'm sure, is making a ton of money from it, too.

swyx [00:26:42]: Yeah, I was looking for one of your market maps. And it looks like these are just model companies. I don't have the enablement ones.

Justine [00:26:48]: Oh, the multimodal model apps are the enablement ones. Oh, yeah, right there.

swyx [00:26:53]: Hydra had his own models.

Justine [00:26:55]: They do. Yeah. So they're in the talking avatars category for the model layer. So their model is like a talking avatar model. And then they also host a bunch of the image and video models so that you can generate other stuff on their platform, too.

swyx [00:27:08]: Flora raised a big round recently. Visual Electrics, I have heard. These two I've heard less of. Yeah.

Justine [00:27:13]: And then even, I mean, if you guys even follow the stuff Adobe's doing, like Forever. Adobe was like, we're only having our own models. And they're the clean models that are only trained on licensed data. And then I think they realized that, like, no one was using those models, either the image ones or the video ones. And so now Adobe Firefly hosts, like, all of the other models.

swyx [00:27:34]: Which is crazy.

Justine [00:27:36]: Yeah.

swyx [00:27:37]: We did a state of AI engineering survey. Oh, cool. And we asked people what models they were using. And Adobe did surprisingly well. Yeah. Because, like, everything, everyone, everyone who's paying attention thinks about them as, like, oh, they're clean, therefore boring. Yeah. But actually, they showed up, like, here.

Justine [00:27:57]: Interesting. Weird.

swyx [00:27:58]: Like, they're beating Ideogram and Recraft. Maybe because it's Adobe. I don't know.

Justine [00:28:02]: I think it depends, too. Because, like, okay, if I think about it, there are definitely things I use Adobe for. Like, for example, I think their generative fill within Photoshop or even within Firefly or Express is, like, really, really good for in painting and images. And so I would say I still use, like, an Adobe model, but I use it for in-painting versus, like, pure generation.

swyx [00:28:24]: I think there's another thing about, like, the depth of workflows. How do you feel about, like, something like a comfy UI where it's, like, literally the it's always sunny in Philadelphia, like, crazy link maps? Like, you need that to have complex workflows, but then also maybe, you know, the next model will destroy it.

Justine [00:28:43]: I love that meme. And I have so much respect for the comfy community. Because, like, they have truly been in the trenches. Like, having an open source project like that where you have all of these interconnected nodes that are dependent on each other to run all of these mini apps that, like, thousands, probably millions of people around the world are using and not paying for but loudly complaining about is, like, that is, like, doing the Lord's work to maintain that. I have never been a deep, comfy UI user because I am at my heart, as you can probably tell by the fact that my feed is open. I have never been a deep, comfy UI user because I am at my heart, as you can probably tell by the fact that my feed is open. I actually try not to get too deep in the technical stuff because I want to have technical stuff in terms of actually using the products and, like, running the really complex local workflows because I want to understand, like, it's this too hard for an everyday person to do. I think comfy UI is really great for people who want a ton of control. And so for people who have more professional use cases, that's awesome. For people who just, like, want to make a meme or, like, a fun video. To share with their friends or with their Instagram audience, you probably don't need comfy UI. I would agree that more of the comfy UI use cases are starting to be eaten away by kind of the core foundation model companies. But especially with things like VO3 not offering image to video, they're still definitely lacking a level of control that you can get from a platform like that. And I would say comfy UI is still awesome for a lot of things like video style transformation. People who want to turn a photo. Realistic video into an anime video or something like that. Like, that is not something you can do on a platform like VO3 today.

swyx [00:30:23]: Yeah, style transformation, consistency, and upscaling or fixing hands. I don't know if that's a thing that people still do.

Justine [00:30:31]: Or, like, character swapping. I don't know if you guys follow AI Warper on Twitter, but he does some really cool stuff with a lot of the open source tools and sort of interfaces like comfy UI. And kind of shows, like, this is, to me at least, a lot of his stuff is, like, this is the forefront of what. What I could do if I was, like, better at navigating these interfaces. But, like, the average person, there's just no way.

swyx [00:30:53]: So I guess, like, part of this discussion we wanted to have also was just, like, how we might put, you know, just, like, use us as a case study. I think you're relatively familiar with us. If we were to start, like, a Latent Space, like, sort of somewhat educational channel or sub-brand that was, like, entirely driven by these things, like, where do we start?

Justine [00:31:13]: Okay. Have you guys seen the Brain Rot Education videos? Yeah. Just, like, the, I had, like, a super, let me try to bring it up. Okay.

swyx [00:31:20]: I am very out of it.

Justine [00:31:21]: Everything is Brain Rot now.

swyx [00:31:23]: Well, I mean, like, this is the most uncool I've ever felt because, like, I clearly don't scroll TikTok and Instagram, but, like.

Justine [00:31:30]: You have to pay a really high price to be this informed about Brain Rot. I think this is an interesting place for you guys to start. Hear me out. I know this looks crazy. So I found these, like, channels on Instagram. I think this one is called Unlock Learning that we're getting. And it's, like, consistently millions of views on these sorts of, like, AI celebrity interviews teaching education. And so basically what they, this is embarrassing. You can see that I like my own tweets, but we're going to keep moving. You can see that they use, like, a deepfake tool. Like, I don't know if they use Yapper or if they use something else to, like, take an image of a celebrity and have them sort of say the words in their voice of the script. And then they overlay it with some sort of diagram at the top that's actually showing what's going on. You could probably just generate a script. Maybe what I would do is you would take links to, like, YouTube videos or transcripts of the podcast. You could put them in Gemini 2.5. I actually did this recently for someone else's videos. You could ask for an entertaining Brain Rot style clip summarizing XYZ topic based on your content. Then, you know, play around with it, edit it a little bit, wait until it's perfect. Then you have to decide, are you guys the Brain Rot characters? Or are you the Swyx? Or are you going to use a celebrity Brain Rot character to tap into the familiarity, which we discussed earlier? Which I think, like, pros and cons. Like, I think maybe for your audience, they would like seeing you guys better. If you want to go viral on, like, Instagram and TikTok and reach new people, like, maybe Sydney Sweeney is a good face for it. I don't know. Just something to think about.

swyx [00:33:09]: We have an AI host. Actually, we've used an Eleven Labs voice called Charlie for a while. Okay.

Justine [00:33:15]: Incredible.

swyx [00:33:16]: And we could give it a try. We could give him a body, you know. Right now, he's just a voice.

Justine [00:33:20]: Okay. So you could animate on Hydra then. You could take the audio clip. You could take the image. You can make him talking. And then the really interesting part to me is, like, what plays at the top, right? Like, where it was showing the diagrams. Historically, I think people still put that stuff together themselves. Like, the one I was just showing of Sydney Sweeney, it's actually an education company who makes those videos. So... Yeah.

swyx [00:33:42]: I just found their website.

Justine [00:33:43]: And so they can reuse content they've already made. Yeah. And it's giving brain rot.

swyx [00:33:50]: It's fine.

Justine [00:33:51]: I know, but, like, people are... And so, yeah, then the question is, like, do you guys want to make the diagrams at the top? Or there's also, if you search, like, AI B-roll generator now, there's a variety of tools that will basically take your script or your audio track and sort of extract the keywords and then either search the internet to pull together B-roll and images for you. Or they will sort of... Or they will sort of generate net new images or videos, which could potentially be an interesting way to pull it together.

Olivia [00:34:25]: I would say the other recommendation that we would have for you guys to go viral with AI video stuff is there's this whole ecosystem. You've probably seen their content, but there are people called clippers that just take existing long-form videos, like podcast interviews or even TV shows, and extract the most interesting and viral clips and then post them on their own platforms as, like, one-second videos. And there's products like Overlap right now, where if you upload, say, a new YouTube video to your channel, you can link your channel to Overlap, and it'll automatically review it, clip the best ones, and then publish those clips for you across, like, Twitter or Instagram or other products.

Justine [00:35:06]: Can you guys see it? And it predicts the virality score, which I find is crazy. So this runs all the time. It's linked to the A16Z YouTube.

swyx [00:35:14]: Oh, you guys are just using it for your channel. Holy crap.

Justine [00:35:17]: I mean, I am. Like, for example, okay, this one. Like, you can go into the video, and then you can see what they picked, and then you can go in and edit. And so you can say, like, hey, I want to remove the caption on this word, or I want to cut this word from the video completely, or, like, I want to extend the clip and make it start longer or shorter. Like, I want to remove curse words. I want to remove filler words. I want to remove stutter. I want to change the subtitles so that they're... Like, look more brain rot. Like, that sort of thing. And then you can generate the social posts and download.

Olivia [00:35:53]: And you can set this up on overlap so that it'll automatically publish the short-form clips as soon as you publish the long-form video, and it'll, like, write the tweets for you or write the TikTok caption for you. Yeah.

swyx [00:36:04]: Which is very cool. This is exactly what I've been looking for. I just didn't know it existed. It's crazy. Yeah.

Olivia [00:36:09]: So you guys can become your own fanboy clippers and make money off of... Yeah. Yeah. The long-form show itself and the short-form. Yeah.

Justine [00:36:18]: So there's all sorts of clipping agents. So it's actually a comfy UI-type interface. Oh. Which is really cool. So this is basically... This is what it looks... The clipping agent looks like. It is watching for any new YouTube video that is uploaded to the A16Z channel. It is automatically finding interesting clips that are between 30 seconds and 140 seconds in length. And the criteria is, like, topics that would be interesting to, like, the tech audience. It is adding subtitles. It is adding subtitles. You can then add more actions. For example, you can say, convert it, take all of the 16 by 9 clips and convert them to 9 by 16. Or add smart zoom so it zooms in on the person's face when they're doing something interesting. This is not a company we're invested in. We just, like, recently discovered it and we were like, this is the dream.

Alessio [00:37:09]: It is the dream. Yeah. We have a... I built a small podcaster thing for us that automates a lot of things and it picks the clips. But it doesn't... It doesn't do any... The hard part is actually how do you go from picking the timestamps to actually making it get a lot of views on YouTube. So we'll try that out.

swyx [00:37:26]: You know, I think there's an art in picking the clips because I think that, you know, maybe they're optimized for brain rot and we're a technical podcast. Like, they wouldn't pick the same clips that we would pick.

Justine [00:37:38]: So this is the interesting thing, too. It's like, if you guys want to be across multiple platforms, maybe you have an agent set up that purposely generates different clips for different audiences. Like, maybe you have it clip, like, educational, informative, like, technical stuff for YouTube and then you experiment with, what if we gave it a prompt to pick, like, brain rot, funny, controversial stuff for, like, young people and then turn it into a TikTok, the TikTok aspect ratio and then post those? Like, what would happen to a TikTok account?

swyx [00:38:11]: Yeah. Yeah. I think it's worth experimenting with a bunch of those. Yeah. My hypothesis is that usually sort of repurposed content doesn't do as well as native content. That's the general rule, which, in other words, like, we recorded this as a long-form podcast. If you clip it out, sure, like, you know, you might get a little more juice out of it, but it's never going to go great because it's never made to the short form. Like, we've actually, you know, so a little behind the scenes, we've actually explored, like, what if we change the way we record podcasts and we only optimize for clips? Okay.

Justine [00:38:42]: But have you, so I used to agree with you, but have you seen the Vitrupo account?

swyx [00:38:46]: Yeah, that guy's pretty prolific, but I don't know what you're referring to.

Justine [00:38:49]: So just his, he has clips that go viral, like, he clips long-form interviews and they go viral all the time. Like, I also used to think that clips would never do well, but I feel like, especially in the X algorithm right now, it's like a clipping era. Everyone, for some reason, video is, like, doing super well in the algorithm. And especially if you're, it's a video of, like, a character that people know, which is, like, Sam Altman or, like, Gary Tan or, like, whoever. You can get in a crazy amount of juice out of these clips.

swyx [00:39:19]: I think part of it is also we, as a brand, like, this is seriously, like, we're considering doing this, right? So we have to think through everything. We, as a brand, have to resist clickbait somewhat, right? Yes. You know, we can't just keep saying, like, Sam Altman says the world's going to end in two years. And, like, no. Yeah. He didn't say that. But, like, you could misconstrue something he said to say that, you know.

Justine [00:39:43]: We have this struggle, which is, and Olivia and I were fighting. We have this struggle, which is, and Olivia and I were fighting. We were fighting about this last night. We fight in a good-natured way about a lot of things. It's not really. It's just, like, we're twins. Like, we, like, you know, have back and forth about things. But we will help each other write tweets sometimes. And she wrote a tweet intro to me that just, like, sounded like one of those spammy, like, AIs blowing up. Like, everything has changed accounts. And I had to be like, Olivia, this is not us.

Olivia [00:40:10]: Like, we need to, like, stay on the course. It's the YouTube thumbnail economy now. Yeah, for content.

swyx [00:40:18]: Right, right. I mean, like, you know, we could create a sub-brand that is, like, affiliated but not us. That, like, we could just kind of throw that on there. And I think, like, look, like, there are some people who are just literally their best serve like that. Yeah. They don't have the cog sec, is what I call it. Or, like, they don't have the pride or taste or whatever, right? Whatever you call yourself. Yes. But, like, if you want your content to spread to the widest known people, you have to sort of adapt it to the way that they like to receive information.

Justine [00:40:49]: I think it's going to be really interesting, too, to see different ages of people. Because, at least from what I've seen, like, already the kids, like, the Gen Alpha, the kids who, like, grew up fully in the internet era, fully in the smartphone era, consume content and create content much differently than, like, Gen Z and millennials. And I hope that doesn't mean that everything has to be brain rot for people to understand it. Like, I really want there to still be space. For, like, these long-form intellectual deep dives, it's like a habitual blog post writer. But I'm, like, a little bit scared of that.

swyx [00:41:24]: No, I think there's always room for, like, long-form, good writing, good discussions. I almost think, like, sometimes it's just ironic and funny. That's why it's viral. Like, you're not even, like, actually learning anything from those, like, educational Sydney Swinney channels. You're just like, oh, it's funny that you can do this now. Like, this is what humanity has come to. Yes. Anything else in terms of, like, what you're doing? Like, creative trends. Like, we've talked a lot about video. Oh, I was going to bring up prompt theory. Maybe this is, like, a nice thing to talk about. I don't know if it's too woo-woo or philosophical for you, but you brought it up. So could you explain what prompt theory is?

Justine [00:42:00]: Okay, so it sort of evolved. So at first it started with people, when people realized they could make these VO3 characters talk in videos, it started with, like, what if these characters either realized they were AI-generated or refused to accept that they were AI? AI-generated and controlled by a prompt.

Olivia [00:42:18]: It's kind of like an existential crisis for AI characters. Yes. Like that video that went viral, the Notebook LM hosts back last September. Yes. Realizing that, like, they could be shut down and they weren't real. And he tried to call his wife and then he realized he doesn't actually have a wife and he's just an AI-generated voice. Oh, no. It's kind of like that, but for VO3 characters. And it's a lot more striking because they look so real.

Justine [00:42:42]: And then the evolution of prompt theory more recently, and I think I tweeted a video about this that I can, I don't know if I can pull it up or send it to you guys, but now people are like, okay, what if we're actually prompted? Like, what if real humans, like, what if we are the AI characters in someone else's universe? Like, would we know it? And, like, are we all controlled by prompts? Like, one of the big trends for VO3 on TikTok right now is AI clapbacks. So it's often, like, the format is, like, a young person and an old person. And, like, the young person is like, oh, like, my hair looks amazing. And the old person is like, oh, my hair looks amazing. And the young person is like, that's not natural hair, whatever. But it started evolving to, like, well, you know, your hair is just prompted. You didn't do anything to get that good hair. And then, like, the young person will be like, well, like, you're on your way to the cemetery. Like, it quickly devolves. It quickly devolves into, like, chaos.

swyx [00:43:30]: Wait, wait, are both people in this situation real?

Justine [00:43:33]: No, they're both generated.

swyx [00:43:34]: Oh, okay.

Justine [00:43:35]: Yes, yes. But it's like, you're seeing all of these, like, brain rot accounts of, like, teenagers with anime profile pictures who live in the Midwest. Who you would, like, never expect to be thinking about, like, the meta layer of AI and prompt theory. Or including the concept of prompt theory in their, like, AI clapback videos. So it's really gotten kind of very, very broad. So I think about that a ton because sites like Reddit have existed like that for a while where you actually, you have no context on the other person. Like, it's an anonymous username. Almost no one is, like, doxxed on Reddit. And so on Reddit, it's like, everyone could be botched. And, like, what do you know? Now that LLMs are, like, getting so good at sounding natural and, like, pretending, like, they have interests and, like, pretending, like, they have lives in which things are going on. I spend so much time thinking, like, would it actually be sad if I was talking with a ton of LLMs on Reddit? Or if there was just a ton of people who are always available to talk about my interests who had interesting things to say, like, is that the good side of the world? Like, I don't know.

swyx [00:44:40]: Made your own Froyo brand, right?

Justine [00:44:42]: Yes.

swyx [00:44:43]: It's just a brainstorm.

Justine [00:44:44]: Chat GPT for prompting, yeah.

swyx [00:44:46]: Prompting, yeah. You can pose it. You can create artists.

Olivia [00:44:49]: I was going to say, it's exactly that. Or Breadclimp. Yes. Breadclimp is another great one. Have you guys followed Breadclimp?

swyx [00:44:55]: No. What is that?

Justine [00:44:57]: So Breadclimp, yeah, was early AI. Then they started creating videos and stuff like that of him. He got super popular. I tweeted one of the early AI videos of Breadclimp and people loved it. All right. Here is Breadclimp. Aw, it's a possum. He's very cute and he has this whole universe of characters that he interacts with. Let me see if I can find...

swyx [00:45:21]: Isn't that Ian McKellen?

Justine [00:45:22]: But the AI version. Yeah. So a lot of people liked this. And then, like, a bunch of people started commenting and said that they wanted Breadclimp sweatshirts that I actually made Breadclimp sweatshirts. And so we, like, took a Breadclimp design. We, like, removed the background. We, like, overlaid it on a sweatshirt that we had, like, a screen printing company do. And then we probably ordered, like, 30 of them. And we distributed them to, like, friends and family and other people in, like, the AI video community. But, like, the Breadclimp sweatshirts could have been, like, a real sweatshirt brand.

swyx [00:45:55]: Oh, my God.

Justine [00:45:56]: There they are. Wait, can you zoom in on them, Olivia? Yeah. So that's us and that's a niche on our consumer team wearing our Breadclimp sweatshirt. Well, the AI, like, furniture, I don't know if you guys have seen, especially on Facebook, actually, AI furniture, like, totally blows up. So, like... Like, it's a couch, but it's, like, shaped like a giant cat and, like, the eyes glow when you, like, sit on it or things like that. Like, these accounts, the AI furniture and the AI home design accounts are huge. And I actually saw... I'll try to see if I can find it later, but I actually saw one example of someone made this, like, gorilla chair, basically,

Olivia [00:46:36]: that then actually got, like, manufactured and you can buy it. There's a lot of differences between platforms of the type of... of content that's going viral and the types of people that are making them. So my biggest piece of advice is actually just to, like, yeah, set up a separate account on YouTube, on Instagram, on TikTok, on X, and kind of take a look at what people are posting there and just follow a bunch of AI accounts and then the feed will give you more. There's almost this, like, content arbitrage thing that happens right now where something will go viral on one platform and then there's, like, a one- or two-day window to be, like, the person to post it on the next platform. But it's kind of fascinating to watch, like, what blows up. Which I think is reflective of, like, who's spending their time on which platforms. It's just amazing to watch. Like, the AI ASMR stuff on TikTok was huge and then kind of slowly made its way elsewhere. I think the animals diving was huge on... on threads and all the Facebook products first because of the maybe age demo of those users and then slowly made their way to, like, X and other places. Justine, what do you think?

Justine [00:47:39]: I think if you want less of the brain rot, less of the... brand new people to this and more, like, how are people professionally using AI video? There's this guy called PJ Ace on Twitter who... He had, like, the viral Kalshi ad that aired during the NBA finals. He's done, like, a bunch of really cool, more commercial work, whether it's for individual musicians or brands. And he shows, like, how would a true professional use AI to make stuff? And he also shows his workflows, which is great.

swyx [00:48:11]: True professional stuff.

Justine [00:48:13]: Yes. Yeah, he's a real... He has, like, decades of experience in the kind of film production. I think he made his own TV show before this, not on AI. Like, he knows what... he knows what he's doing.

Alessio [00:48:23]: To me, the biggest thing in my mind is, are people going to be bottlenecked by how many sweatshirts they can produce, basically, to make money? It feels like it's much easier to make the content and get the audience than actually get good merch. So I'm curious to see if we get the overclip thing that generates the clip. You have something that just generates merch based on latest videos. You have something that just generates merch based on latest videos with, like, quotes and, like, images of it. Yeah, I'm curious to see where that goes.

Justine [00:48:48]: That would be so cool. I love that, actually. Someone should totally do that.

Alessio [00:48:53]: Yeah, request for a startup. Yeah. If anybody's listening, please go build it for us. Yeah, thanks for your time. This was great. And we'll keep following The Brain Rod closely on social medias.

Justine [00:49:06]: We will, too. And thanks for having us. And we're looking forward to seeing what content you guys make.

swyx [00:49:11]: Thank you all. Thanks, guys. Awesome.

Justine [00:49:13]: Thanks, guys.

Came here from Tim Ferris 4 Bullet Friday Newsletter. Great stuff you share here 👍

The last diagram could be seen as a whole business strategy. Great work!