Our last AI PhD grad student feature was Shunyu Yao, who happened to focus on Language Agents for his thesis and immediately went to work on them for OpenAI. Our pick this year is Jack Morris, who bucks the “hot” trends by -not- working on agents, benchmarks, or VS Code forks, but is rather known for his work on the information theoretic understanding of LLMs, starting from embedding models and latent space representations (always close to our heart).

Jack is an unusual combination of doing underrated research but somehow still being to explain them well to a mass audience, so we felt this was a good opportunity to do a different kind of episode going through the greatest hits of a high profile AI PhD, and relate them to questions from AI Engineering.

Papers and References made

AI grad school:

A new type of information theory:

Embeddings

Text Embeddings Reveal (Almost) As Much As Text: https://arxiv.org/abs/2310.06816

Contextual document embeddings https://arxiv.org/abs/2410.02525

Harnessing the Universal Geometry of Embeddings: https://arxiv.org/abs/2505.12540

Language models

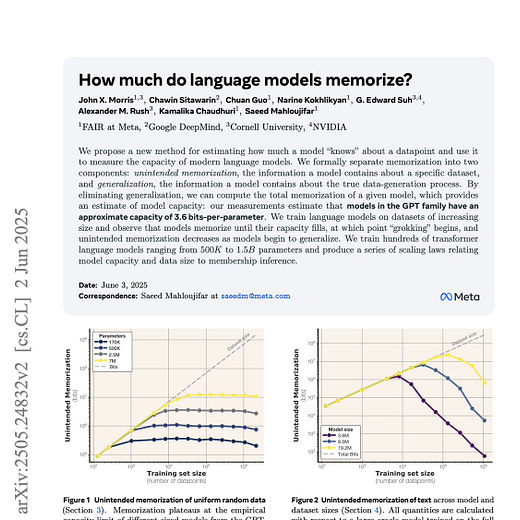

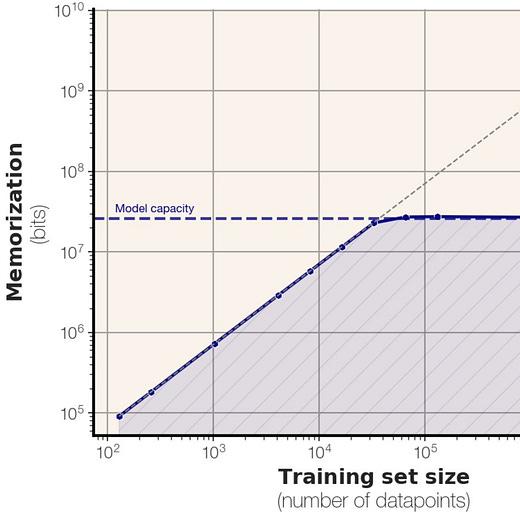

GPT-style language models memorize 3.6 bits per param:

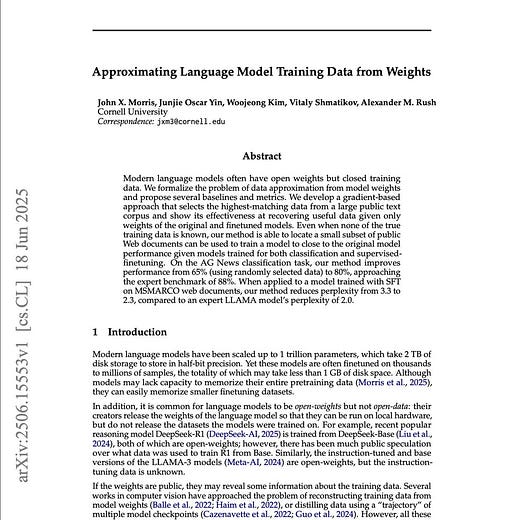

Approximating Language Model Training Data from Weights: https://arxiv.org/abs/2506.15553

LLM Inversion

“There Are No New Ideas In AI.... Only New Datasets”

misc reference: https://junyanz.github.io/CycleGAN/

—

for others hiring AI PhDs, Jack also wanted to shout out his coauthor

Zach Nussbaum, his coauthor on Nomic Embed: Training a Reproducible Long Context Text Embedder.

Full Video Episode

Timestamps

00:00 Introduction to Jack Morris

01:18 Career in AI

03:29 The Shift to AI Companies

03:57 The Impact of ChatGPT

04:26 The Role of Academia in AI

05:49 The Emergence of Reasoning Models

07:07 Challenges in Academia: GPUs and HPC Training

11:04 The Value of GPU Knowledge

14:24 Introduction to Jack's Research

15:28 Information Theory

17:10 Understanding Deep Learning Systems

19:00 The "Bit" in Deep Learning

20:25 Wikipedia and Information Storage

23:50 Text Embeddings and Information Compression

27:08 The Research Journey of Embedding Inversion

31:22 Harnessing the Universal Geometry of Embeddings

34:54 Implications of Embedding Inversion

36:02 Limitations of Embedding Inversion

38:08 The Capacity of Language Models

40:23 The Cognitive Core and Model Efficiency

50:40 The Future of AI and Model Scaling

52:47 Approximating Language Model Training Data from Weights

01:06:50 The "No New Ideas, Only New Datasets" Thesis