The Day The AGI Was Born

ChatGPT FOMO Antidote: I read all the tweets so you don't have to

Context

What were you doing the evening of November 30th, 2022 — the day AGI was born?

I imagine most regular folks were going about their usual routine - hobbies, TV, family, work stuff. This isn’t a judgment; I was sleeping. I may even have thought to myself: “Nothing much happened today.” I’ve been covering the new AI Summer here on this blog and, like the apocryphal story of King George III on July 4 1776, I slept through what probably will be viewed in history as the biggest change to the world since the Internet, and woke up to an absolute torrent of tweets and jailbreaks and jokes and discoveries I should’ve been on top of.

If you’re feeling the FOMO, you aren’t alone - even AI researchers are feeling it - and this is the blogpost for you. ChatGPT is as big a jump from GPT3, as GPT3 itself was a jump from GPT2 - but it has been extremely hard to get up to speed live because of the extreme quantity and variety of discoveries. Each tweet is a rabbit hole, which contributes to the FOMO. Every newsletter author furiously writing this up this weekend will add to it by linking out to dozens of tweets and hyperbolic comments.

So I will tackle this differently: you can view my notes live anytime, but here, I’ll attempt to give a cohesive, concise executive summary of ChatGPT here that you can use in your conversations and thinking. Longtime readers will know this isn’t my normal style, but I think the importance of this topic deserves clarity and concision.

ChatGPT Executive Summary

last updated Dec 3 2022

ChatGPT is OpenAI’s latest large language model, released on Nov 30 2022 as a chat app open to the public. Its simple interface1 hints at its capabilities and limitations.

Like GPT3, it can explain scientific and technical concepts in a desired style or programming language, and brainstorm basically anything you need.

However, there are three very important differences you should know:

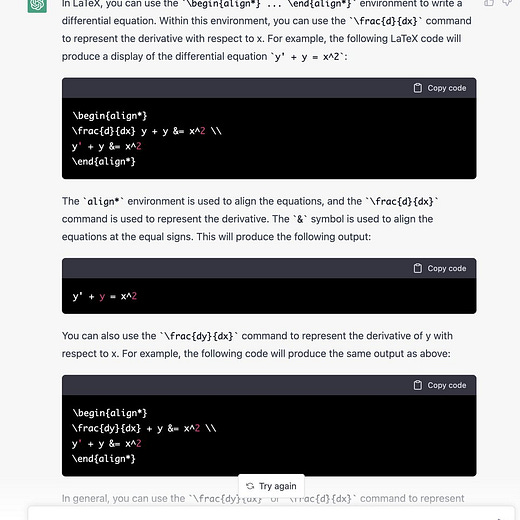

Better at Zero Shot: As a fine-tuned “GPT-3.5 series” model and an InstructGPT sibling, it is a LOT better at zero-shot generation of text that follows your instructions, whether it is generating rhyming poetry (Shakespearean sonnets, Limericks, Song parodies), emulating people (AI experts, Gangster movies, Seinfeld), or writing (college essays that get A’s and B’s from real professors, writing LaTeX, podcast intros).

It has long term memory of up to 8192 tokens, and can take input and generate output about twice as long2 as GPT3.

It still can’t do math, generates false information about the real world, and writes bad code, and does not pass Turing, SAT, or IQ tests.3

If you only need zero-shot textgen, you may be able to use the recently released text-davinci-003 instead. However, you’d be losing out on…

New Chat capability: ChatGPT is also the first chat-focused large language model, and it is astoundingly good at it, meaning you can talk back to it and modify what it generates or have it continue an existing conversation.4 Previous attempts at creating chat experiences in GPT3 involved hacky, unreliable prompt engineering to create contextual memory and extensive software engineering to allow follow-up corrections. This is now a “solved” problem and means you can write blogs iteratively and with ~unlimited length.

Notable, but flawed, but improving safety: To most people, “ChatGPT feels much more filtered, reserved, and somehow judgmental than GPT-3”.

OpenAI clearly invested a great deal of time in making ChatGPT “safe”, specifically by attempting to disable responses related to violence, terrorism, drugs, hate speech, dating, sentience, and eradicating humanity, and also purporting to disable web browsing and knowledge of current dates.

However, every single one of these precautions were compromised in the first 48 hours, using many “jailbreaks” found5 soon after public release, causing commentators to observe that the danger signs are “like watching an Asimov novel come to life”.

Still, OpenAI placed clear warnings6 that this is a test, encourages reporting of results7, and have been observed patching these discoveries in real time.

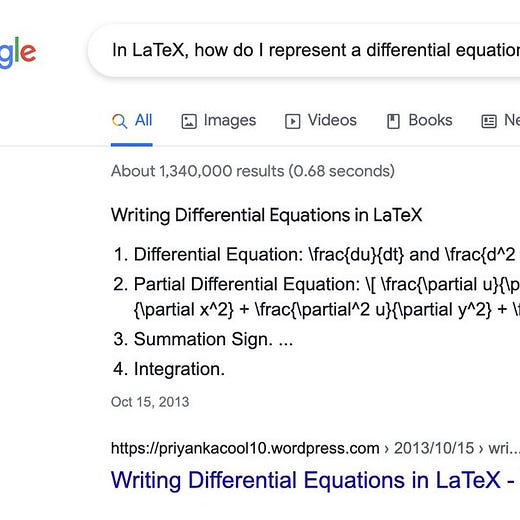

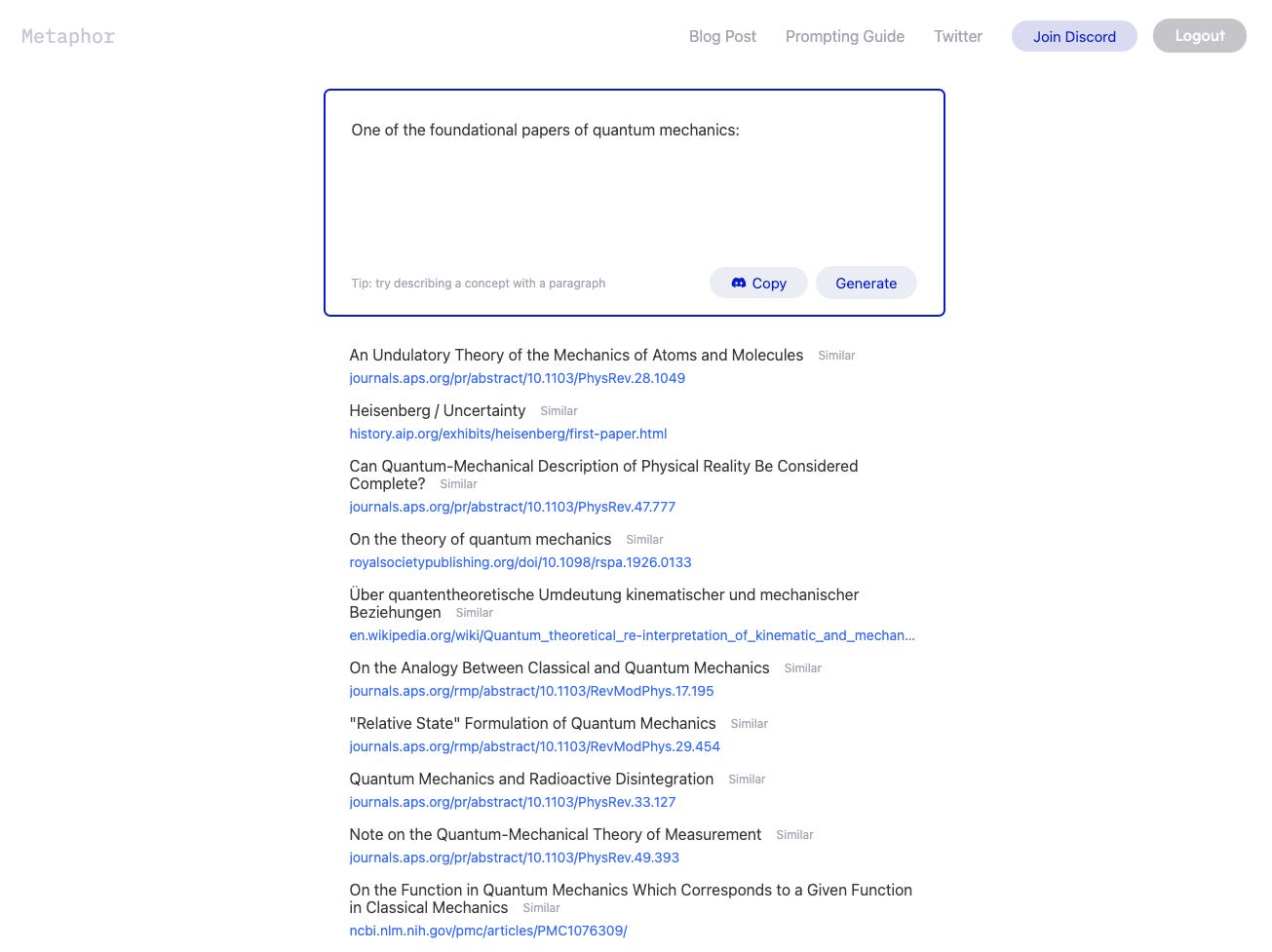

As we have previously explored, the best usecases are where creativity is more valued than precision: brainstorming, drafting, presenting information in creative ways. The biggest unresolved debate is how much a ChatGPT-like experience can replace Google: on one hand, ChatGPT answers questions far more directly and legibly than a Google results page8; on the other, its answers are often incorrect and unsourced9. The unsatisfying outcome will likely be “it depends” — yet disruptive tech tends to be worse than existing tech in almost every way but one.

The final notable development this week has been in the distribution. There is no official ChatGPT API yet, however Daniel Gross demonstrated that you can automate it, using Playwright browser automation, to create an unofficial API and hook it up for a WhatsApp chatbot. This technique has been cloned to a Telegram bot, Chrome extension, Python script, and Nodejs script.

Why ChatGPT is a Big Effing Deal

Some people have concluded that ChatGPT is AGI, which Sam Altman dismisses as “obviously not close, lol”, but I do agree that this is the birth of something that looks like AGI. The main factor is learning that we have somehow gone much faster with Reinforcement Learning via Human Feedback than I knew in October (“What is AGI-Hard?”)10. RLHF is essentially Chat, and this is the first actually good Chat AI that humanity has ever seen: it understands when you want to make edits to prior generations versus when you want to continue on with the conversation; it knows to remember information and retain context, so you can talk naturally to it and it can keep up with you; it demonstrably knows what it can’t do and can generate code to get the information it needs, making it a good central brain for an agentic AI system:

That means that ChatGPT is likely the first11 LLM you can present to a regular human (without any prompt engineering training) that could accomplish any particular commodity task - a general feat that meets some definitions of AGI.

Questions? Comments?

This is my attempt at being concise so I have to cut it short, but I am open to whatever I may have missed and would love your questions! Fire away below!

If you’re still on Twitter I’d appreciate a signal boost.

In fact it is a known flaw that the GPT3.5 models are about 80% more verbose than GPT3 due to well documented biases of the data from human evaluators tending to rate more verbose generations higher.

From this we conclude that GPT is growing increasingly better at replacing wordcels rather than shape rotators.

Metaphor.systems circumvents this problem by generating real links:

In fact this is if anything the most significant advance of ChatGPT that they have significantly undersold on their blogpost: “We trained an initial model using supervised fine-tuning: human AI trainers provided conversations in which they played both sides—the user and an AI assistant. We gave the trainers access to model-written suggestions to help them compose their responses.” Demonstrating that it is viable to train chatbots to work this well purely via RLHF was not a widely known truth, and is a Bannister moment for the RLHF industry.

Adept is arguably first, but doesnt do chat

Great summary. Was trying to make it memorize phone number of my friends today without talking to it as a baby. No luck. Have you noticed that it became less capable lately?

> 11 Adept is arguably first, but doesnt do chat

The only thing standing for AdeptAI is a tweet. I cannot find any examples or usages of it. It's been a few months since their tweet.