Scaling Test Time Compute to Multi-Agent Civilizations: Noam Brown

The Bitter Lesson vs Agent Harnesses & World Models, Debating RL+Reasoning with Ilya, what's *wrong* with the System 1/2 analogy, and the challenges of Test-Time Scaling

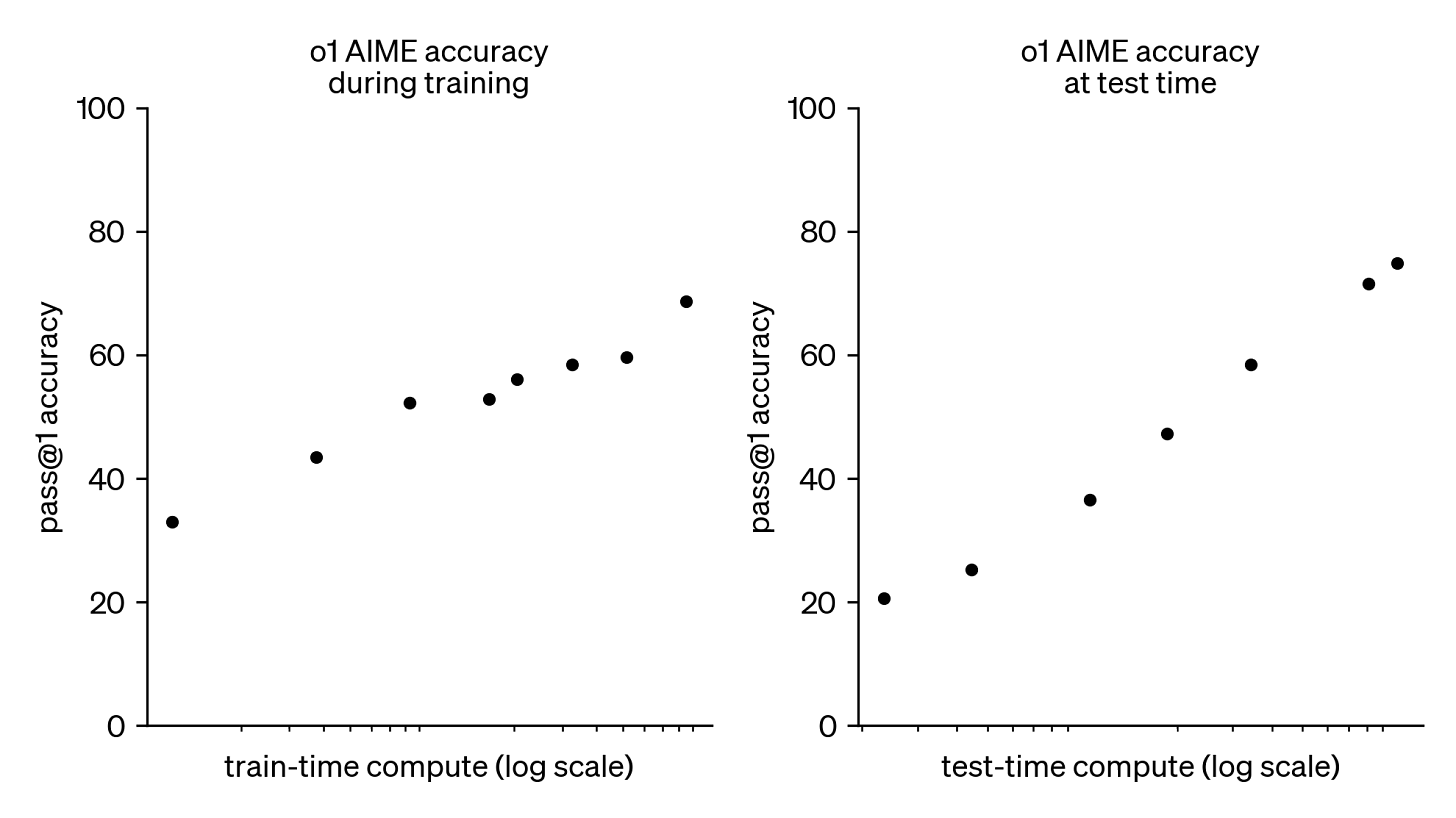

Every breakthrough in AI has been led by a core scaling insight — Moore’s Law gave way to Huang’s Law (silicon), Kaplan et al gave way to Hoffman et al (data1), AlexNet kicked off the deep-learning and GPU for ML revolution (pretraining). As the o1 launch revealed, and with DeepSeek, Anthropic and GDM following shortly after, we’re now solidly in the era of scaling test time compute.

Noam Brown is one of the world’s leading researchers on reasoning (joint credit shared on the o1 launch video and the o1 System Card), and you may have heard him on the TED stage, OpenAI videos, and many of the world’s top AI podcasts, so we’re honored that he took some time to join ours to go deeper for an AI Engineer audience. We’ll stop gassing him up and just get right into the top highlights from today’s pod.

Our notes below:

On Reasoning

Reasoning is emergent: The “Thinking Fast and Slow” System 1 vs System 2 models for Non-reasoning vs Reasoning models, as well as the asymmetry of 1 unit of test time compute being the equivalent of 1000-10,000x more in size, is well understood now, but what is LESS understood is that it could only have happened after GPT4. As Jim Fan said, you have to scale both.

“One thing that I think is underappreciated is that the models, the pre-trained models need a certain level of capability in order to really benefit from this extra thinking. This is kind of why you, you seen the reasoning paradigm emerge around the time that it did. I think it could have happened earlier, but if you try to do the reasoning paradigm on top of GPT-2, I don't think it would have gotten you almost anything… if you ask a pigeon to think really hard about playing chess, it's not going to get that far. It doesn't matter if it thinks for a thousand years, it's like not gonna be able to be better at playing chess. So maybe you do still also, also in like with animals and humans that you need a certain level of intellectual ability, just in terms of System 1 in order to benefit from System 2 as well.”

It’s not well known (nor confirmed) that, after GPT-3, Ilya worked on a project codenamed GPT-Zero in 2021 to explore test time compute. One surprise we had in the pod was that Ilya actually convinced Noam that reasoning LLMs were more within reach than he thought; instead of the other way around:

“…if we had a quadrillion dollars to train these models, then maybe we would, but like, you're going to hit the limits of what's economically feasible before you get to super intelligence, unless you have a reasoning paradigm. And I was convinced incorrectly that the reasoning paradigm would take a long time to figure out because it's like this big unanswered research question. Ilya agreed with me and he said I think we need this additional paradigm, but his take was that, maybe it's not that hard.”

The (unablated) hypothesis is that we could not even have gone from GPT3 → o1, we needed GPT4 and 4o first as a baseline.

Reasoning helps alignment. Safety, steerability, and alignment are very hot topics for certain parts of the AI community, and surprisingly reasoning helps:

“After we released Cicero, a lot of the AI safety community was really happy with the research and like the way it worked because it was a very controllable system. Like we conditioned Cicero on certain concrete actions and that gave it a lot of steerability to say like, okay, well, it's going to pursue a behavior that we can like very clearly interpret. And it very clearly defined, it's not just a language model running loose and doing whatever it feels like. No, it's actually like pretty steerable. And there's this whole reasoning system that steers the way the language model interacts with the human. Actually, a lot of researchers reached out to like reached out to me and said we think this is like potentially a really good way to achieve safety with these systems.”

Reasoning generalizes beyond verifiable rewards. One criticism of RLVR is that it only ever improves models in math and coding domains. Noam answers:

“I'm surprised that this is such a common perception because we've released Deep Research and people can try it out. People do use it. It's very popular. And that is very clearly a domain where you don't have an easily verifiable metric for success… And yet these models are doing extremely well at this domain. So I think that's an existence proof that these models can succeed in tasks that don't have as easily verifiable rewards.”

Visual Reasoning has limits. There has been a lot of excitement over how O3 beats Master-level Geoguessr Players. There are limits though:

“It depends on exactly the kinds of questions that you're asking. Like there are some questions that I think don't really benefit from system 2. I think GeoGuessr is certainly one where you do benefit… the thing I typically point to you is information retrieval. If somebody asks you “when was this person born" and you don't have access to the web, then you either know it or you don't. And you can sit there and you can think about it for a long time. Maybe you can make an educated guess… but you're not going to be able to get the date unless you actually just know it.”

Reasoning was underestimated by nonbelievers at OpenAI but borne out of hitting the Data Wall.

“There was a lot of debate about, first of all, like, what is that extra paradigm? So I think a lot of the researchers looked at reasoning and RL but it was not really about scaling test time compute. It was more about data efficiency, because, you know, the feeling was that we have tons and tons of compute, but we actually are more limited by data. So there's the data wall and we're going to hit that before we hit limits on the compute. So how do we make these algorithms more data efficient? They are more data efficient, but I think that also they are also just like the equivalent of scaling up compute also by a ton… And I remember it was interesting that I talked to somebody who left OpenAI after we had discovered the reasoning paradigm, but before we announced o1 and they ended up going to a competing lab. I saw them afterwards after we announced it, and they told me that, at the time, they really didn't think the strawberry models were that big of a deal. They thought we were making a bigger deal of it than it really deserved to be. And then when we announced o1 and they saw the reaction of their coworkers at this competing lab about how everybody was like this is a big deal. And they like pivoted the whole research agenda to focus on this… a lot of this seems obvious in retrospect, but at the time it's actually not so obvious and it can be quite difficult to recognize something for what it is.”

Reasoning + Windsurf = Feeling the AGI.

Q: Any Windsurf pro tips now that you've immersed in it?

A: I think one thing I'm surprised by is how many people don't even... know that O3 exists. Like, I've been using it day to day. It's basically replaced Google search for me. Like, I just use it all the time. And also for things like coding, I tend to just use the reasoning models. My suggestion is if people have not tried the reasoning models yet, because honestly, people love them. People that use it love them. Obviously, a lot more people use GPT-4.0 and just the default on ChatGPT and that kind of stuff. I think it's worth trying the reasoning models. I think people would be surprised what they can do.”

Test Time Compute will have challenges in scaling2

“We're going to get them thinking instead of three minutes, they're for three hours, and then three days, and then three weeks. There's two concerns:

One is that it becomes much more expensive to get the models to think for that long or scale up test-time compute. Like, as you scale up test-time compute, you're spending more on test-time compute, which means that, like, there's a limit to how much you could spend. That's one potential ceiling.

I should say that these models are becoming more efficient in the way they're thinking, and so they're able to do more with the same amount of test-time compute. And I think that's a very underappreciated point, that it's not just that we're getting these models to think for longer.

The second point is that, like, as you have these models think for longer, you get bottlenecked by wall-clock time. It is really easy to iterate on experiments when these models would respond instantly. It's actually much harder when they take three hours to respond.

And what happens when they have three weeks? It takes you at least three weeks to do those evaluations and to then iterate on that. And on a lot of this, you can parallelize experiments to some extent, but a lot of it, you have to run the experiment, complete it, and then see the results in order to decide on the next set of experiments. I think this is actually the strongest case for long timelines, that the models, because they just have to do so much.

And I think it depends on the domain. So drug discovery, I think, is one domain where this could be a real bottleneck. I mean, if you want to see if something, like, extends human life, it's going to take you a long time to figure out if, like, this new drug that you developed, like, actually extends human life and doesn't have, like, terrible side effects along the way.”

Other commentators have also considered that data for long horizon RL is further away than people think.

Still, the age of test time scaling could not have come at a better (or earlier) time, just as the Orion run is estimated to have maxed out compute until SG1 comes online in December.

On Multi-Agents

There’s been a lot of debate about Multi-Agents recently, with Cognition saying Don’t Build Multi-Agents and Anthropic saying how to build Multi-Agents. There have been many, many, many takes on the debate, but Noam has been doing multi-agent RL for years and has announced the multi-agent team at OpenAI… although that is just the most prominent of a few possible research directions…

“I think the team [name], in many ways, is actually a misnomer, because we're working on more than just multi-agent. Multi-agent is one of the things we're working on.

Some other things we're working on is just like being able to scale up test time compute by a ton. So how, you know, we get these models thinking for 15 minutes now. How do we get them to think for hours? Days, even longer? And be able to solve incredibly difficult problems. So that's one direction that we're pursuing.

Multi-agent is another direction. And here, I think there's a few different motivations. We're interested in both the collaborative and the competitive aspect of multi-agent.

I think the way that I describe it is people often say in AI circles that humans occupy this very narrow band of intelligence. And AIs are just going to, like, quickly catch up. And then surpass, like, this band of intelligence. And I actually don't think that the band of human intelligence is that narrow. I think it's actually quite broad. Because if you compare anatomically identical humans from, you know, caveman times, they didn't get that far in terms of what we would consider intelligence today, right? Like, they're not putting a man on the moon, you know, they're not building semiconductors or nuclear reactors or anything like that. And we have those today, even though we as humans are not. And so, what's the difference? Well, I think the difference is that you have thousands of years, a lot of humans, billions of humans, cooperating and competing with each other, building up civilization over time. The technology that we're seeing is the product of this civilization.

And I think similarly, the AIs that we have today are kind of like the cavemen of AI. And I think that if you're able to have them cooperate and compete with billions of AIs over a long period of time and build up a civilization, essentially, the things that they would be able to produce and answer would be far beyond what is possible today with the AIs that we have today.”

On the Bitter Lesson

Bitter Lesson in Multi-Agents:

“…the way that we are approaching multi-agent in the details and the way we're actually going about it is, I think, very different from how it's been done historically and how it's being done today by other places. I've been in the multi-agent field for a long time… I think that a lot of the approaches that have been taken have been very heuristic and haven't really been following, like, the bitter lesson approach to scaling and research.”

Bitter Lesson vs World Models & Yann LeCun:

“… I think it's pretty clear that as these models get bigger, they have a world model, and that world model becomes better with scale. So, they are implicitly developing a world model, and I don't think it's something that you need to explicitly model… there was this long debate in the multi-agent AI community for a long time about whether you need to explicitly model other agents, like, other people, or if they can be implicitly modeled as part of the environment.

For a long time, I was, like, on the, took the perspective of, like, of course you have to, like, explicitly model these other agents because they're behaving differently from the environment. Like, they take actions, they're unpredictable, you know, they have agency. But I think I've actually shifted over time to thinking that actually, if these models become smart enough, they develop things like theory of mind. They develop an understanding that they're... That they're agents that can take actions and have motives and all this stuff. And these models just develop that implicitly with scale and more capable behavior broadly. So, that's the perspective I take these days.”

Combining Open-Endedness, Multi-Agents, and Self-Play: OpenAI has written about the Weak to Strong problem, and Tim Rocktaschel, head of OpenEndedness at GDM, gave a very well received keynote at ICLR in Singapore (full video here) which inspired a question about the relationship between multi-agent scaling beyond human capabilities (the ultimate limiter in the Bitter Lesson):

“Q: One of the most consistent findings is always that it's better for AIs to self-play and improve competitively, as opposed to sort of humans training and guiding them. And you find that with, AlphaZero and R1 zero. Do you think this will hold for multi-agents, like, self-play to improve better than humans?

A: So, this is a great question. And... I think this is, worth expanding on. So, I think a lot of people today see self-play as the next step and maybe the last step that we need for superintelligence.

And I think if you look at something like AlphaGo and AlphaZero, we seem to be following a very similar trend, right?

Like, the first step in AlphaGo was you do large-scale pre-training. In that case, it was on human Go games. With LLMs, it's pre-training on, you know, tons of, like, internet data. And that gets you a strong model, but it doesn't get you a superhuman model.

And then the next step in the AlphaGo paradigm is you do large-scale test time compute or, like, large-scale inference compute. And in that case with MCTS. And now we have, like, reasoning models that also do, like, this large-scale inference compute. And, again, that, like, boosts the capabilities a ton.

Finally, with AlphaGo and AlphaZero, you have self-play, where the model plays against itself, learns from those games, gets better and better and better, and just goes from something that's, like, around human-level performance to, like, way beyond human capability. These Go policies now are so strong that it's just incomprehensible. Like, what they're doing is incomprehensible to humans. Same thing with chess. And we don't have that right now with language models. And so it's, like, it's really tempting to look at that and say, like, oh, well, we just need these, like, AI models to now interact with each other and learn from them. And then they're just going to, like, get to superintelligence.

… The challenge is that Go is this two-player zero-sum game. And two-player zero-sum games have this very nice property where when you do self-play, you are converging to a minimax equilibrium. And I guess I should take a step back and say, like, in two-player zero-sum games, two-player zero-sum games are chess, Go, even two-player poker, all two-player zero-sum. Well, that's not true. What you typically want is what's called a minimax equilibrium. This is that GTO policy, this policy that you play where you're guaranteeing that you're not going to lose to any opponent in expectation. I think in chess and Go, that's, like, pretty clearly what you want. Interestingly, when you look at poker, it's not as obvious. In a two-player zero-sum version of poker, you could play the GTO minimax policy, and that guarantees that you won't lose to any opponent on Earth. But, again, I mentioned you're not going to make as much money off of a weak player as you could if you instead played an exploitative policy. So, there's this question of, like, what do you want? Do you want to make as much money as possible, or do you want to guarantee that you're not going to lose to any human alive? What all the bots have decided is, like, well, what all the, like, AI developers in these games have decided is, like, well, we're going to choose the minimax policy. And, conveniently, that's exactly what self-play converges to. If you have these AIs play against each other, learn from their mistakes, they converge over time to this minimax policy, guaranteed. But once you go outside a two-player zero-sum game, that's actually not a useful policy anymore. You don't want to just, like, have this very defensive policy, and you're going to end up with really weird behavior if you start doing the same kind of self-play in things like math.”

On Games

From beating everyone at Poker, to ranking top 10% in the world Diplomacy with LLMs, to personally winning the World Diplomacy Championship himself, games are a huge part of Noam’s thinking and career. But not just any kind of game…

“…I have this like huge store of knowledge on AI for imperfect information games. Like this is my area of research for so long. And I know all these things, but I don't get to talk about it very often.

We've made superhuman poker AIs for No Limit Texas Hold'em. One of the interesting things about that is that the amount of hidden information is actually pretty limited because you have two hidden cards when you're playing Texas Hold'em. And so the number of possible states that you could be in is 1,326 when you're playing heads up at least. And you know, that's multiplied by the number of other players that there are at the table, but it's still like not a massive number…

The problem is that as you scale the number of hidden possibilities, like the number of state of, of possible states you could be in that approach breaks down. And there's still this very interesting unanswered question of what do you do when the number of hidden states becomes extremely large? You know, so if you go to Omaha poker, where you have four hidden cards, there are things you could do. That's the kind of heuristic that you could do to reduce the number of states, but actually it's still a very difficult question.

And then if you go to a game like Stratego where you have 40 pieces, so there's like close to 40 factorial different states you could be in. Then all these existing approaches that we used for poker break down and you need different approaches…. If you expand those techniques, maybe you get them to work on things like Stratego and Magic the Gathering, but they're still going to be limited. They're not going to get you like superhuman encode forces with language models.

So I think it's more valuable to just focus on the very general reasoning techniques. And one day as we improve those, I think we'll have a model that just out of the box one day plays Magic the Gathering at a superhuman level. And I think that's the more important and more impressive research direction.

Side note: the LLMs self playing Diplomacy challenge Noam asks for in the pod was shipped at the AIE World’s Fair right after :)

It was a huge honor to host Noam for the pod and we hope you listen to the full thing to pick up even more of the tangents, nuggets, and advice! Tell us if we missed something big.

Timestamps

00:00 Intro – Diplomacy, Cicero & World Championship

02:00 Reverse Centaur: How AI Improved Noam’s Human Play

05:00 Turing Test Failures in Chat: Hallucinations & Steerability

07:30 Reasoning Models & Fast vs. Slow Thinking Paradigm

11:00 System 1 vs. System 2 in Visual Tasks (GeoGuessr, Tic-Tac-Toe)

14:00 The Deep Research Existence Proof for Unverifiable Domains

17:30 Harnesses, Tool Use, and Fragility in AI Agents

21:00 The Case Against Over-Reliance on Scaffolds and Routers

24:00 Reinforcement Fine-Tuning and Long-Term Model Adaptability

28:00 Ilya’s Bet on Reasoning and the O-Series Breakthrough

34:00 Noam’s Dev Stack: Codex, Windsurf & AGI Moments

38:00 Building Better AI Developers: Memory, Reuse, and PR Reviews

41:00 Multi-Agent Intelligence and the “AI Civilization” Hypothesis

44:30 Implicit World Models and Theory of Mind Through Scaling

48:00 Why Self-Play Breaks Down Beyond Go and Chess

54:00 Designing Better Benchmarks for Fuzzy Tasks

57:30 The Real Limits of Test-Time Compute: Cost vs. Time

1:00:30 Data Efficiency Gaps Between Humans and LLMs

1:03:00 Training Pipeline: Pretraining, Midtraining, Posttraining

1:05:00 Games as Research Proving Grounds: Poker, MTG, Stratego

1:10:00 Closing Thoughts – Five-Year View and Open Research Directions

Transcript

Alessio [00:00:04]: Hey everyone, welcome to the Latent Space podcast. This is Alessio, partner and CTO at Decibel, and I'm joined by my co-host Swyx, founder of SmolAI.

swyx [00:00:12]: Hello, hello, and we're here recording on a holiday Monday with Noam Brown from OpenAI. Welcome. Thank you. So glad to have you finally join us. A lot of people have heard you. You've been rather generous of your time on the podcast, Lex Friedman, and you've done a TED Talk recently just talking about the thinking paradigm. But I think maybe perhaps your most interesting recent achievement is winning the World Diplomacy Championship. Yeah. In 2022, you built like sort of Cicero, which was top 10% of human players. I guess my opening question is, how has your diplomacy playing changed since working on Cicero and now personally playing it?

Noam [00:00:52]: When you work on these games, you kind of have to understand the game well enough to like be able to debug your bot. Because if the bot does something that's like really radical and like that he most typically wouldn't... You're not sure if that's like a mistake or if that's just like if it's a bug in the system or it's actually just like the bot being brilliant. When we were working on Diplomacy, I kind of like did this deep dive, like trying to understand the game better. I played in tournaments. I like watched a lot of like tutorial videos and commentary videos on games. And over that process, I got better. And then also seeing the bot, like the way it would behave in these games. Like sometimes it would do things that humans typically wouldn't do. And that taught me about the game as well. When we released Cicero, we announced it in like late 2022. I still found the game like really fascinating. And so I like kept up with it. I like continue to play. And that led to me winning the championship in the World Championship in 2025.

swyx [00:01:45]: So just a couple of months ago. There's always a question of like centaur systems where humans and machines work together. Like, was there an equivalent of what happened in Go where you updated your play style?

Noam [00:01:55]: If you're asking if I used Cicero when I played in the tournament, the answer is no. Seeing the way the bot played and like... Taking inspiration from that, I think, did help me in the tournament. Yeah. Yeah.

swyx [00:02:06]: Do people now ask Turing questions every single time when they're playing Diplomacy? Ask. To try to tell like if the person they're playing with is a bot or a human. Yeah. Like that's the one thing you're worried about when you started.

Noam [00:02:19]: It was really interesting when we were working on Cicero because like, you know, we didn't have the best language models. We were really bottlenecked on the quality of the language models. And sometimes the bot would do, would say like bizarre things. Like, you know, 99% of the time it was fine. But then like every once in a while it would say this like really bizarre thing, like it would just hallucinate about something. Somebody would reference something that they said earlier in a conversation with the bot and the bot would be like, I have no idea what you're talking about. I never said that. And then the person would be like, look, you can just scroll up in the chat and it's literally right there. And the bot would be like, no, you're lying. Oh, context windows. And when it does these kinds of things, like people just kind of like shrugged it off as like, oh, that's just, you know, the person's tired or they're drunk or whatever. Or they're just like trolling me. But I think like, that's because people weren't looking for a bot. They weren't expecting a bot to be in the games. We were actually really scared because we were afraid that people would, would figure out at one point that there's a bot in these games and then they would just like always be on the lookout for it. And they would always be. And if you're, if you're looking for it, you're able to spot it. That's the thing. So I think now that it's announced and that people know to look for it, I think they would have an easier time spotting it. Now that said, the language models have also gotten a lot better since 2022. It's adversarial. Yeah. So at this point, like, you know, the truth is, you know, GPD 4.0. And like 0.3, these models are like passing the Turing test. So I don't think they can really ask that many Turing complete questions that would actually make a difference.

Alessio [00:03:39]: And T-Zero was very small, like 2.7B, right?

Noam [00:03:42]: It was a very small language model. Yeah. It was one of the things we realized over the course of the project that like, oh yeah, you, you really benefit a lot from just having like larger language models. Right.

Alessio [00:03:50]: Yeah. How do you think about today's perception of AI and a lot of like maybe the safety discourse of like, you know, you're going to build a bot that is really good at persuading people into like helping them win. Right. Yeah. A game. And I think maybe today labs want to say they don't work on that type of problem. How do you think about that dichotomy, so to speak, between the two?

Noam [00:04:08]: You know, honestly, like after we released Cicero, a lot of the AI safety community was really happy with the research and like the way it worked because it was a very controllable system. Like we conditioned Cicero on certain concrete actions and that gave it a lot of steerability to say like, okay, well, it's going to pursue a behavior that we can like very clearly interpret. And it very clearly defined, it's not just like, oh, it's a language model, like running loose and doing whatever it feels like. No, it's actually like pretty steerable. And there's this whole reasoning system that steers the way the language model interacts with the human. Actually, a lot of researchers reached out to like reached out to me and said, like, we think this is like potentially a really good way to achieve safety with these systems.

swyx [00:04:50]: I guess the last diplomacy related questions that we might have is, have you updated or tested like O series models on diplomacy? And would you, would you expect. Yeah. A lot more difference.

Noam [00:05:01]: I have not. I think I said this on Twitter at one point that I think this would be a great benchmark. I would love to see all the leading bots play a game of diplomacy with each other and see like who does best. And I think a couple of people have like taken inspiration from that and are actually like building out these benchmarks and like evaling the models. My understanding is that they don't do very well right now. Um, but I think, I think it really is a fascinating benchmark and I think it would be, um, yeah, I think it'd be a really cool thing to try out.

swyx [00:05:25]: Well, we're going to go a little bit into O series now. I think the last time you did a lot of publicity, you were just. Just launching O one, you did your Ted talk and everything. How has the vibe, how have the vibes changed just in general? You said you were very excited to learn from domain experts, like in chemistry, like how they review the old series models. Like how have you updated since let's say end of last year?

Noam [00:05:48]: I think the trajectory was pretty clear, pretty early on in the development cycle. And I think that everything that's unfolded since then has been pretty on track for what I expected. So I wouldn't say that my perception of where, where things are going or has honestly changed that much. Um, I think that we're going to continue to see, as I said before, that we're going to see this paradigm continue to progress rapidly. And I think that that's true even today that we, we saw that with like going from O one preview to O one to O three consistent progress. And we're going to continue to see that going forward. And I think that we're going to see a broadening of what these models can do as well. You know, like we're going to start seeing agentic behavior. Um, we're already starting to see that. We're starting to see agentic behavior, like honestly, for me, O three, I've been using it a ton in my day-to-day life. I just find it so useful, especially the fact that I can now browse the web and like, you know, do meaningful research on my behalf. Like it's kind of like a mini deep research that you can just get a response in three minutes. So yeah, I think it's just going to continue to become more and more useful and more powerful as time goes on and pretty quickly.

Alessio [00:06:51]: Yeah. And talking about deep research, you tweeted about if you need proof that we can do this in unverifiable domains, deep research is kind of like a great example. Can you maybe talk about. If there's something that people are missing, you know, I feel like I hear that repeated a lot. It's like, you know, it's easy to do in coding and math, but like not in these other domains.

Noam [00:07:07]: I frequently get this question, including from pretty established AI researchers that, okay, we're, we're seeing these like reasoning models exceed in math and coding and these, these easily verifiable domains, but are they ever going to succeed in domains where success is less well-defined? I'm surprised that this is such a common perception because we've released deep research and people can try it out. People do use it. It's very popular. And that is very clearly a domain where you don't have an easily verifiable metric for success. It's very like, what, what is the best research report that you could generate? And yet these models are doing extremely well at this, at this domain. So I think that's like an existence proof that these models can succeed in tasks that don't have as easily verifiable rewards.

Alessio [00:07:51]: Is it because there's also not necessarily like a wrong answer? Like there's a spectrum of deep research quality, right? You can have like a report that like looks good. But the information is kind of so-and-so, and then you have a great report. Do you think people have a hard time understanding the difference when they get the result?

Noam [00:08:07]: My impression is that people do understand the difference when they get a result. And I think that they're surprised at how good the deep research results are. There's certainly, it's not, not a hundred percent. It could be better and we're going to make it better. But I think people can tell the difference between a good report and a bad report. And certainly in a good report and a mediocre report.

Alessio [00:08:24]: And that's enough to kind of feed the loop later to build the product and improve the model performance.

Noam [00:08:29]: I mean, I think if you're in a situation where people can't tell the difference between the outputs, then it doesn't really matter if you're like, you know, hill climbing on, on progress. These models are going to get better at domains where there is a measure of success. Now, I think this idea that it has to be like easily verifiable or something like that. I don't think that's true. I think that you can have, you can have these models do well, even in domains where success is a very difficult to define thing could sometimes even be subjective.

swyx [00:08:56]: People lean on a lot. You've, you've done as well is the thinking. Fast and slow analogy for just thinking models. And I think it's reasonably well diffused now. The idea of that, that this is kind of the next scaling paradigm. All analogies are imperfect. What is one way in which thinking fast and slow or system one system two kind of doesn't transfer to how we actually scale these things.

Noam [00:09:21]: One thing that I think is underappreciated is that the models, the pre-trained models need a certain level of capability in order to really benefit from this. Like. Extra thinking. This is kind of why you, you seen the reasoning paradigm emerge around the time that it did. I think it could have happened earlier, but if you try to do the reasoning paradigm on top of GPT-2, I don't think it would have gotten you almost anything. Is this emergence? Hard to say if it's emergence necessarily, but like I haven't done the you know, the measurements to really define that clearly. But I think it's pretty clear, you know, people try chain of thought with GPT, like really small models and they saw that it just didn't really do. Anything. Then you go to bigger models and it starts to, to give a lift. I think there's a lot of debate about like the extent to which this kind of behavior is emergent, but clearly there is a difference. So it's not like there are these two independent paradigms. I think that they are related in the sense that you need a certain level of system one capability in your models in order to have system two is to be able to benefit from system two. Yeah.

swyx [00:10:22]: I have played, tried to play amateur neuroscientist before and try to compare it to the evolution of the brain and how you have to. Evolve the cortex first before you evolve the other parts of the brain. And perhaps that is what we're doing here. Yeah.

Noam [00:10:36]: And you could argue that actually this is not that different from like, I guess the, um, the system one system two paradigm, because, you know, if you ask like a pigeon to think really hard about playing chess, you know, it's not going to get that far. It's, you know, it doesn't matter if it's like things for a thousand years, it's like not gonna be able to be better at playing chess. So maybe you do still also, also in like with animals and humans that you need a certain level of intellectual ability, just in terms of system one in order to benefit from system two as well. Yeah.

swyx [00:11:00]: Just as a side tangent, does this also apply to visual reasoning? So let's say we have, now we have the 4.0, like natively omni model type of thing, then that also makes O3 really good at GeoGuessr. Does that apply to other modalities too?

Noam [00:11:17]: I think the evidence is yes. It depends on exactly the kinds of questions that you're asking. Like there are some questions that I think don't really benefit from system two. I think GeoGuessr certainly one, uh, where you, where you do benefit. I think. Image recognition. If, if I had to guess, it's like one of those things that you probably benefit less from system two thinking. Cause you know it or you don't. Yeah, exactly. There's no way. Yeah. And the, the thing, the thing I typically point to you is just like information, like retrieval. If somebody asks you like, when was this person born and you don't have access to the web, then you either know it or you don't. And you can sit there and you can think about it for a long time. Maybe you can make an educated guess. So you can say like, well, this person was like probably lived around this time. And so this is like a rough date, but you're not going to be able to like get the date unless you've actually. Just, just know it.

swyx [00:12:01]: But like spatial reasoning, like tic-tac-toe might be better. Cause you have all the information there. Yeah.

Noam [00:12:06]: And I think it's true that like with tic-tac-toe, we see that like GBD 4.5 falls over, you know, it plays decently. Well, I shouldn't say it falls over. It does reasonably well control the board. It can make legal moves, but it will make mistakes sometimes. And if you really need that system too, to enable it to play perfectly. Now it's possible that if you got to GBD six and you just did system one, it would also play perfectly. You know, I guess we'll, we'll know why. One day, but I think right now you would need a system too, to really like do well.

Alessio [00:12:34]: What do you think are like the things that you need in system one? So obviously general understanding of like game rules. Do you also need to understand some sort of like metagame of like, you know, usually this is like how you value pieces in different games, even though it's a, you know, how do you generalize in system one? So that then in system two, you can kind of get to the gameplay, so to speak.

Noam [00:12:53]: I think the more that you have in your, in, in the system one, like this is the same thing with humans, you know? Like. Like humans are when they're playing for the first time, a game like chess, they can apply a lot of system to thinking to it. And if you, if you apply a ton of system to thinking to it, like if, if you just present a really smart person with a completely novel game and you tell them like, okay, you're going to play this game against like an AI or like a human that's like mastered this game and you tell them to like sit there and think about it for like three weeks about how to play this game. My guess is they could actually do pretty well, but it certainly helps to build up that system one thinking. Like build up intuition about, about the game, because it will just make you so much, yeah, so much faster.

Alessio [00:13:35]: I think the Pokemon example is a good one of like the system one kind of has maybe all this information about games. And then once you put it in the game, it still needs a lot of harnesses to work. And I'm trying to figure out how much of, can we take things from the harness and have them in system one so that then system two is as harness free as possible.

Noam [00:13:53]: But I guess that's like the question about generalizing games and an AI. Yeah, I guess I view that as a different question. I think the question about like harnesses, in my view, is that the ideal harness is no harness. Right. I think harnesses are like a crutch that eventually we're going to be able to move beyond. So only two calls. And you could ask, you know, you could just ask O3. And actually, you know, it's interesting because like when this playing Pokemon thing kind of like emerged as this like, you know, benchmark. I was actually like pretty opposed to evaling this with our, with our like opening eye models, because my feeling is like, okay, if we're going to do this eval, let's just do it with O3. You know, how far does O3 get without any harness? How far does it get playing Pokemon? And the answer is like not very far, you know, and that's fine. I think it's fine to have an eval where the models do terribly. And I don't think the answer to that should be like, well, let's build a really good harness so that now it can do well on this eval. I think the answer is like, okay, well, let's just like improve the capabilities of our models so they can do well at everything. And then they also happen to make progress on this eval.

Alessio [00:14:56]: Would you consider things like checking? I came for a valid move, a harness, or is this in the, in the model, you know, like chess is like, you can either have the model learn in system one, what moves are valid and what it can and cannot do versus in system two, figuring out.

Noam [00:15:11]: I think, I think there's like, a lot of this is design questions. Like for me, I think you should give the model the ability to check if a move is legal. If you want, like that, that could be an option in the environment of like, okay, here's a, you know, an action that you can like a tool call that you can make to see if an action is legal. If it wants to use that, it can. And then there's like, design is a question of like, well, what do you do if the model makes an illegal move? And I think it's totally reasonable to say like, well, if they make an illegal move, then they lose the game. Like, I don't know what, what happens when a human makes an illegal move in a game of chess? I actually don't know. I haven't played chess that much. Yeah, me neither. You're just not allowed to? Yeah. Like, do you just lose the game? I don't know.

swyx [00:15:44]: So if that's, if that's the case, then I think it's totally reasonable to say like, yeah, we're going to have an eval where that's also the criteria for the AI models. Yeah. But I think like, maybe one way to interpret that in sort of researcher terms is, are you allowed to do search? And one of the famous findings from DeepSeek is that MCTS wasn't that useful to them. But I think like, there are a lot of engineers trying out search and spending a lot of tokens doing that. And maybe it's not worth it.

Noam [00:16:08]: Well, I'm making a distinction here between like a tool call to check whether a move is legal or illegal is different from actually making that move and then seeing whether it ended up being legal or illegal. So if that tool call is available, I think it's totally fine to make that tool call and check whether a move is legal or illegal. I think it's different to have the model say, oh, I'm making this move. Yeah. And then, you know, it gets feedback that like, oh, you made an illegal move. And so then it's like, oh, just kidding. Like, I'm going to do something else now. So, so that's, that's the distinction I'm drawing.

swyx [00:16:40]: Some people have tried to classify that second type of playing things out as test time compute. You would not classify that as test time compute.

Noam [00:16:48]: There's a lot of reasons why you would not want to rely on that paradigm. When you're going to imagine you have a robot, you know, and your robot like take some action in the world and it like breaks something and you're just like, oh, you can't say like, oh, just kidding. I didn't mean to do that. I'm going to undo that action. Like the thing is broken. So if you want to simulate what would happen if I move the robot in this way, and then in the simulation, you saw that this thing broke and then you decide not to do that action. That's totally fine. But you can't just like undo actions that you've taken in the world.

swyx [00:17:14]: There's a couple more things I wanted to cover in this rough area. I actually had an answer on the, on the thinking fast and slow side, which maybe. I'm curious what you think about, like a lot of people are trying to put in effectively model router layers, let's say between like the, the fast response model and the, the long thinking model, Anthropik is explicitly doing that. And I think there's a question about always, do you need a smart judge to route or do you need a dumb judge, judge to route because it's fast. So when you have a model router, let's say, let's say you're passing requests between system one side and system two side, does the router need to be as smart as the smart model or.

Noam [00:17:51]: I think it's possible for a dumb model to recognize that a problem is really hard and that it won't be able to solve it and then route it to a more capable model.

swyx [00:18:01]: But it's also possible for a dumb model to be fooled or to be overconfident.

Noam [00:18:05]: I don't know. I think there's a real trade off there, but I will say like, I think, I think there are a lot of things that people are building right now that will eventually be washed away by scale. So I think harnesses are a good example where I think eventually the models are going to be. And I think this actually happened with the reasoning models. Like before the reasoning models emerged, there was like all of this work that went into engineering, these like agentic systems that like made a lot of calls to GPT-4-0 or like these non-reasoning models to get reasoning behavior. And then it turns out like, oh, we just like created reasoning models and they, you don't need this like complex behavior. In fact, in many ways, it makes it worse. Like you just give the reasoning model the same question without any sort of scaffolding and it just does it. No. You can still, and so people are building scaffolding on top of the reasoning models right now. But I think in many ways, like those scaffolds will also just be replaced by the reasoning models and models in general becoming more capable. And similarly, I think things like model, like these routers, you know, we've said pretty openly that we want to move to a world where there is a single unified model. And in that world, you shouldn't need a router on top of the model. So I think that the router issue will eventually be solved also.

swyx [00:19:18]: Like you're building the router into. The model kind of weights itself.

Noam [00:19:22]: I don't think there will be a benefit for like, I shouldn't say it because it's, I could be wrong about this. Like, you know, it's certainly maybe there's, you know, reasons to route to different model providers or whatever. But I think that routers are going to eventually go away. And I can understand why it's worth doing it in the short term, because like the fact is, it is beneficial right now. And if you're building a product and you're getting a lift from it, then. It's worth doing right now. One of the tricky things I'd imagine that a lot of developers are facing is that like, you kind of have to plan for where these models are going to be in six months and 12 months. And that's like very hard to do because things are progressing very quickly. You know, you don't want to spend six months building something and then just have it be totally washed away by scale. But I think I would encourage developers like when, when they're, you know, building these kinds of things like scaffolds and, and routers, keep in mind that the field is evolving very rapidly. You know, things are going to change in three months, let alone six months. And. And that might require radically changing these things around or, or tossing them out completely. So don't spend six months building something that might get tossed down in six months.

swyx [00:20:29]: It's so hard though. Everyone says this, and then like, no one has concrete suggestions on how.

Alessio [00:20:36]: What about reinforcement fine-tuning? Is this something that obviously you just released it a month ago at UrbanEye, is this something people should spend time on right now, or maybe wait until the next jump?

Noam [00:20:46]: I think reinforcement fine-tuning is pretty cool. And I think it's like worth looking at. I think it's something that's worth looking into because it's really about specializing the models for the data that you have. And I think that, um, something that's like worth, worth looking into for, for developers, like we're not, we're not suddenly going to like have that data baked into the raw model a lot of times. So I think that's kind of like a separate question. Yeah.

Alessio [00:21:11]: So creating the environment and the reward model is the best thing people can do right now. I think the question that people have is like, should I rush to fine-tune the, the model? Using RFT or should I build the harness to then RFT the models as they get better?

Noam [00:21:25]: I think the difference is that like for reinforcement fine-tuning, you're collecting data that's going to be useful as the models improve as well. So if we come out with like future models that are even more capable, you could still fine-tune them on your data. That's, I think actually a good example where you're building something that's going to compliment the model scaling and becoming more capable rather than necessarily getting washed away by the scale.

swyx [00:21:50]: One last question on Ilya, you mentioned on, I think the Sarah and Elad podcast, where you had this conversation with Ilya a few years ago about more RL and reasoning and language models, just any speculation or thoughts on why his attempt, when he tried it, it didn't work or the timing wasn't right and why the time is right now.

Noam [00:22:14]: I don't think I would, I would frame it that way that like his, his attempt didn't work in many ways. It did. So Ilya, for me, I saw that in all of these domains that I'd worked on and poker and Hanabi and diplomacy, having the models think before acting made a huge difference in performance, like orders of magnitude difference. Like 10,000 times. Yeah. Like, you know, a thousand to a hundred thousand times, like it's the equivalent of a model that's like a thousand to a hundred thousand times bigger. And in language models, you weren't really seeing that, that the model, the models would just respond instantly. Some people in the field in the LLM. We're like convinced that like, okay, we just keep scaling pre-training. We're going to get to super intelligence. And I was kind of skeptical of that perspective in late 2021. I was having a meal with Ilya. He asked me what my AGI timelines are very standard SF question. And I told him like, look, I think it's actually quite far away because we're going to need to figure out this reasoning paradigm in a very general way. And with things like LLMs, LLMs are very general, but they don't have a reasoning paradigm. That's very general. And until they do, they're going to be limited in what they can do. You're like, we're going to say. Sure, we're going to scale these things up by a few more orders of magnitude. They're going to become more capable, but we're not going to see super intelligence from just that. And like, yes, if we had a quadrillion dollars to train these models, then maybe we would, but like, you're going to hit the limits of what's economically feasible before you get to super intelligence, unless you have a reasoning paradigm. And I was convinced incorrectly that the reasoning paradigm would take a long time to figure out because it's like this big unanswered research question. And, you know, Ilya agreed with me and he said like, yeah, you know, I think. We need this like additional paradigm, but his take was that like, maybe it's not that hard. I didn't know it at the time, but like he and others at OpenAI had also been thinking about this. They'd also been thinking about RL, they'd been working on it and I think they had some success, but like, you know, with most research, like it does, you have to iterate on things. You have to try out different ideas. You have to, yeah, try different things. And then also as the models become more capable, as they become faster, it becomes easier to iterate on experiments. And I think that the work that. They did, even though it didn't like result in a reasoning paradigm, it all builds on top of previous work. Right. So they built a lot of things that over, over time led to this reasoning paradigm.

swyx [00:24:31]: For listeners, no one can talk about this, but the rumor is that that thing was codenamed GPT zero. If you want to search for that, that line of work, I think there was a time where like basically RL kind of went through a dark age when everyone like went all in on it and then nothing happens and they gave up. And like now it's like sort of the golden age again. So that's what I'm like trying to identify. Like why, what is it? And it could just be that we have smarter base models and better data.

Noam [00:24:57]: I don't think it's just that we have smarter base models. I think it's that. Yeah. So I, we did end up getting a big success with, with reasoning and I, but I think it was in many ways a gradual thing to some extent it was gradual, you know, like there were signs that there were signs of life. And then we like, you know, iterated and tried out some more things. We got like better signs of life. I think it was around like November, 2023 or October, 2023. I think it was around like November, 2023 or October, 2023. When I think I was convinced that we had like very conclusive signs of life that like, oh, this was, this was going to be, this is the paradigm and it's going to be a big deal. That wasn't many ways a gradual thing. I think what OpenAI did well is like when we got those signs of life, they recognized it for what it was and invested heavily in, in scaling it up. And I think that's, that's ultimately what led to reasoning models arriving when they did.

Alessio [00:25:45]: Was there any disagreement internally, especially because like, you know, OpenAI constantly.

Noam [00:25:50]: I think that's also to the credit of OpenAI that like, okay, yes, they figured out the paradigm that was needed and that was why they were investing all this like research effort into this like RL stuff. And I think that's also to the credit of OpenAI that like, okay, yes, they figured out the paradigm. And they were very focused on scaling that up. In fact, the vast majority of resources were focused on scaling that up, but they also recognized the value that that's something else was going to be needed. And it was worth researching, putting researcher effort into, into other directions to figure out what that extra paradigm was going to be. There was a lot of debate about, first of all, like, what is that extra paradigm? So I think a lot of the researchers looked at reasoning and, and RL was not really about scaling test time compute. It was more about. Data efficiency, because, you know, the feeling was that, well, we have tons and tons of compute, but we actually are more limited by data. So there's, there's the data wall and we're going to hit that before we hit limits on, on the compute. So how do we make these algorithms more data efficient? They are more data efficient, but I think that also like, um, they are also just like the equivalent of scaling up compute also by a ton. That was interesting. There was like a lot of debate around like, okay, well, what exactly are we doing here? And then I think also, even when we got the signs of life, I think there was a lot of debate about the significance of it. That like, okay. How much should we invest in scaling up this paradigm? I think, especially when you're, when you're in a small company, like, you know, open AI, like in 2023 was not as big as it is today. And compute was more constrained than it is today. And if you're investing resources in a direction that's coming at the expense of something else. And so if you look at these signs of life on reasoning and you're saying like, okay, well, this looks promising. We're going to scale this up by a ton and invest a lot more resources into it. Where are those resources coming from? You have to make that tough call about where to, where to draw the resources from. And that is a very controversial, very difficult call to make, um, that makes some people unhappy. And I think there was debate about whether we're focusing too much on this paradigm, whether it's really a big deal, whether we would see it generalize and do various things. And I remember it was interesting that I talked to somebody who left open AI after we had discovered the reasoning paradigm, but before we announced a one and they ended up going to a competing lab. I saw them afterwards after we announced it. And they told me that like, at the time, they really didn't think this like reasoning thing, like this, these O series, the strawberry models were like that, that big of a deal. It was like, they thought we were making a bigger deal of it than it really deserved to be. And then when we announced a one and they saw the reaction of their coworkers at this competing lab about how everybody was like, oh crap, like this is a big deal. And they like pivoted the whole research agenda to focus on this, that then they realized like, oh, actually like this maybe is a big deal. You know, a lot of this seems obvious in retrospect, but at the time it's actually not so obvious and it can be quite difficult to recognize something for what it is.

Alessio [00:29:00]: I mean, open AI is like a great history of just making the right bet. I feel like GPD models are kind of similar, right? Where like it started with games, NRL, and then it's like, maybe we can just scale these language models instead. And I'm just impressed by the leadership and obviously the research team that keeps coming up with these insights.

Noam [00:29:19]: Looking back on it today, it might seem obvious that like, oh, of course, like these models get better with scale. So you should just scale them up a ton and it'll get better, but it really is, the best research is obvious in retrospect. And at the time it's not as obvious as it might seem today.

swyx [00:29:35]: Follow-up questions on data efficiency. This is a pet topic of mine. It seems that our current methods of learning are so inefficient still, right? Like compared to the existence proof of humans, we take five samples and we learn something. Right? Like compared to the existence proof of humans, we take five samples and we learn something. Right? Like compared to the existence proof of humans, we take five samples and we learn something. Machines, 200, maybe, you know, per like whatever data point you might need. Anyone doing anything interesting in data efficiency? Or do you think like there's just a fundamental inefficiency that machine learning has that will just always be there compared to humans?

Noam [00:30:05]: I think it's a good point that if you look at the amount of data these models are trained on and you compare it to like the amount of data that a human observes to get the same performance. I guess pre-training, it's a little hard to make an apples to apples comparison because like, I don't know, how many tokens does a baby actually absorb? I guess pre-training, it's a little hard to make an apples to apples comparison because like, I don't know, how many tokens does a baby actually absorb when they're developing? But I think it's a fair statement to say that these models are less data efficient than humans. And I think that that's an unsolved research question and probably one of the most important unsolved research questions.

swyx [00:30:32]: Maybe more important than algorithmic improvements, because you can just, we can increase the supply of data out of the existing set of the world and humans.

Noam [00:30:42]: I guess that's good. So a couple of thoughts on that. Like one is that the answer might be an algorithmic improvement. Like maybe algorithmic improvements do lead to greater efficiency. Like maybe algorithmic improvements do lead to greater efficiency. And the second thing is that like, it's not like humans learn from just reading the internet. So I think it's certainly easiest to learn from just like data that's on the internet, but I don't think that's like the limit of what data you could collect.

swyx [00:31:05]: The last follow up before we change topics to coding, any other just anecdotes or insights from Ilya, just in general? Because like you've worked with him, so there's not that many people that we can talk to that have worked with him.

Noam [00:31:17]: I think I've just been very, very impressed. I think I've just been very impressed with his vision that I think like, especially when I joined and I saw, you know, the internal documents at OpenAI of like what he had been thinking about back in like 2021, 2022, even earlier, I was very impressed that he had a clear vision of like where this was all going and what was needed.

swyx [00:31:36]: Some of his emails from 2016, 17, when they were founding OpenAI was published. And even then he was talking about how he had things like one big experiment is much more valuable than 100 small ones. That was like a core insight. That was like a core insight that differentiated them from brain, for example. It just seems very insightful that he just sees things much more clearly than others. And I just wonder what his production function is like, how do you make a human like that? And how do you improve your own thinking to better model it?

Noam [00:32:04]: I mean, I think it is true that I mean, one of OpenAI's big success was betting on the scaling paradigm. It is just kind of odd because, you know, they were not the biggest lab, you know, it was difficult for them to scale. Back then, it was much more common to do like a lot of small experiments. More academic style. People were trying to figure out these various like algorithmic improvements and OpenAI bet pretty early on like large scale.

swyx [00:32:27]: We had David Luan on who I think was VP Eng at the time of GPT-1 and 2. And he talked about how the differences between brain and OpenAI was basically the cause of Google's inability to come out with a scaled model. Like just structurally, everyone had allocated compute and you had to pool resources together to make bets and you just couldn't.

Noam [00:32:47]: I think that's true. I think that's true. I think OpenAI was structured differently and I think that really helped them. Like OpenAI functions a lot like a startup and other places tended to function more like universities or, you know, research labs as they traditionally existed. The way that OpenAI operates more like as a startup with this mission of building AGI and super intelligence, that helped them organize, collaborate, pool resources together, make hard choices about like how to allocate resources. And I think a lot of the other labs like have now been doing that. It's very effective in trying to adopt paradigms more like that, like setups more like that.

Alessio [00:33:22]: Let's talk about maybe the killer use case, at least in my mind, of these models, which is coding. You released CodeX recently, but I would love to talk through the Noam Brown coding stack. What models you use, how you interact with them. Cursor, Windsurf.

Noam [00:33:35]: Lately, I've been using Windsurf and CodeX. Actually a lot of CodeX. I've been having a lot of fun. You just give it a task and it just goes off and does it and comes back five minutes later with like a, you know, pull request. And is it core research task?

swyx [00:33:47]: Or like side stuff that you don't do usually?

Noam [00:33:49]: I wouldn't say it's like side stuff. I would say basically anything that I would normally try to code up, I try to do it with Codex first.

swyx [00:34:02]: Well, for you, it's free, but yeah, for everybody, it's free right now.

Noam [00:34:04]: And I think that's partly because it's the most effective way for me to do it. And also, it's good for me to get experience working with this technology and then also seeing the shortcomings of it. It just helps me better understand, okay, this is the limits of these models and what we need to push on next.

swyx [00:34:20]: Have you felt the AGI?

Noam [00:34:21]: I've felt the AGI multiple times, yes.

swyx [00:34:25]: How should people push Codex in ways that you've done? And I think you see it before others because obviously you were closer to it.

Noam [00:34:33]: I think anybody can use Codex and feel the AGI. It's kind of funny how you feel the AGI and then you get used to it very quickly.

swyx [00:34:43]: So it's really like... I'm dissatisfied with where it's lacking.

Noam [00:34:46]: Yeah, I know. It's magical. I was actually looking back at the old Sora videos when they were announced. Because remember when Sora came out, it was just magical. You look at that and you're like, it's really here. This is AGI. But if you look at it now, it's kind of like, oh, the people don't move very organically and there's a lack of consistency in some ways. And you see all these flaws in it now that you just didn't really notice when it first came out. And yeah, you get used to this technology very quickly. But I think what's cool about it is that because it's developing so quickly, you get those feel the AGI moments like every few months. So something else is going to come out and just like, it's magical to you. And then you get used to it very quickly.

swyx [00:35:28]: What are your Windsurf pro tips now that you've immersed in it?

Noam [00:35:33]: I think one thing I'm surprised by is how few people... I mean, maybe your audience is going to be more comfortable with reasoning models and use reasoning models more. But I'm surprised at how many people don't even... know that O3 exists. Like, I've been using it day to day. It's basically replaced Google search for me. Like, I just use it all the time. And also for things like coding, I tend to just use the reasoning models. My suggestion is if people have not tried the reasoning models yet, because honestly, people love them. People that use it love them. Obviously, a lot more people use GPT-4.0 and just the default on ChatGPT and that kind of stuff. I think it's worth trying the reasoning models. I think people would be surprised. What they can do.

swyx [00:36:15]: I use Windsurf daily and they still haven't actually enabled it as like a default in Windsurf. Like, I always have to dig up, like, type in O3 and then it's like, oh, yeah, that exists. It's weird. I would say, like, my struggle with it has been that it takes so long to reason and actually break out of flow.

Noam [00:36:34]: I think that is true. Yes. And I think this is one of the advantages of Codex that, like, OK, you can give it a task that's kind of self-contained and like it can go off and do its thing and come back 10 minutes later. And I can see that if you're doing... If you're using this thing is like more like a, like a pair of programmer kind of thing, then, yeah, you want to use GPT-4.1 or something like that.

Alessio [00:36:51]: What do you think are the most broken part of the development cycle with AI? Like, in my mind, it's like pull request review. Like for me, like I use Codex all the time and then I got all these pull requests and it's kind of hard to, like, go through all of them. What other thing would you like people to build to make this even more scalable?

Noam [00:37:09]: I think it's really on us to build a lot more stuff. These models are very limited in some ways. I think I find it frustrating that, you know, you ask them to do something and they spend 10 minutes doing it and then you ask them to do something pretty similar and then they go spend 10 minutes doing it. And like, you know, I think I describe them as like they're geniuses, but it's their first day on the job, you know, and that's like kind of annoying. Like even the smartest person on earth, when it's their first day on the job, you know, they're not going to be like as useful as you would like them to be. So I think being able to get more experience and like act like somebody that's actually been on the job. For like six months instead of one day, I think would make them a lot more useful. But that's really on us to build to build that capability.

Alessio [00:37:51]: Do you think a lot of it is like GPU constraint for you? Like if I think about Codex, why is it asking me to set up the environment myself when like the model, if I ask O3 to like create an environment setup script for a repo, I'm sure I'll be able to do it. But today in the product, I have to do it. So I'm wondering in your mind, could these be a lot more if we just, again, put more test time compute on them? Or do you think there's like a fundamental? There's a fundamental model capability limitation today that we still need a lot of like human harnesses around it.

Noam [00:38:19]: I think that we're in an awkward state right now where like progress is very fast and there's things that are like, clearly we could do this in the models we better. We're going to get to it. It's you're just limited by how many hours there are in the day, you know, so progress can only proceed so quickly. We're trying to get to everything as fast as we can. And I think that O3 is not where the technology will be in six months.

swyx [00:38:41]: I like that question overall. And like, there's a software development. Lifecycle, not just generation of the code, like from issue to PR basically is like the typical commentary of that. And then there's the windsurf side, which is inside your ID, like what else, right? Pull request review is like something that people don't really, there are startups that are built around it. It's not something that Codex does and it could. And so like, then there's like, what else is there, you know, that is sort of rate limiting the amount of software you could be iterating on. It's an open question. I don't, I don't, I don't know if there's an answer. Anything else on it? Yeah. As we, in general, like, where do you think this goes just in form factors or what will we be looking at this time next year in terms of how things are, how, what models are able to do that they're not able to today?

Noam [00:39:29]: I don't think it's gonna be limited to ASWY. You know, I think, I don't think it's going to be limited to software engineering. I think it's going to be able to do a lot of remote work kind of tasks. Yeah.

swyx [00:39:37]: Like freelancer type of work. Yeah.

Noam [00:39:40]: Or just like even things that are not necessarily software engineering. Okay. So. So the way that I think about it is like anybody that's doing a remote work kind of job, I think it's valuable to become familiar with their technology and like kind of get a sense of like what it can do, what it can't do, what it's good at, what it's not good at, because I think the breadth of things that it's going to be able to do is going to expand over time as well.

swyx [00:39:59]: I feel like virtual assistants might be the next thing after ASWY then, because they're the most easily, like, you know, virtual assistants, like hire someone in the Philippines, someone who just looked through your email and all that, because that is entirely, you can intercept. all the inputs and all the outputs and train on that. And maybe OpenAI just buys a virtual assistant company.

Noam [00:40:20]: Yeah. I think what I'm looking forward to is that for things like virtual assistants, the models, like if they're aligned well, they could end up being like really preferable for that kind of work. You know, if there's always this like principal agent problem where if you delegate a task to somebody, then like, are they really aligned with like doing it as you would want it to be done?

swyx [00:40:40]: And just as cheaply, as quickly as they can. Yeah, yeah.

Noam [00:40:44]: And so if you have an AI model that's like actually really aligned to you and your preferences, then that could end up doing a way better job than a human could. Well, not that it's doing a better job than a human could, but like it's doing a better job than a human would.

swyx [00:40:56]: That word alignment, by the way, I think there's like an interesting overriding or homomorphism between safety alignment and instruction following alignment. And I wonder where they diverge.

Noam [00:41:09]: Okay, so I think where it diverges is like, what do you want to align the models to? Like, that's, I think, a difficult question, you know? Like, you could say like you wanted to align it to the user. Okay, well, what happens if the user wants to build a novel virus that's going to wipe out half of humanity? That safety alignment, yeah. So there's a question of like, I think alignment, I think they're related, you know? And I think the big question is like, what are you aligning towards?

swyx [00:41:30]: Yeah, there's like humanity goals, and then there's your personal goals, and everything in between.

Alessio [00:41:35]: So that's kind of, I guess, the individual agent. And you announced that you're leading the multi-agent team at OpenAI. I haven't really seen many. Any announcements? Maybe I missed them on what you've been working on. But what can you share about interesting research directions or anything from there?

Noam [00:41:51]: Yeah, there hasn't really been announcements on this. I think we're working on cool stuff, and I think we'll get to announce some cool stuff at some point. I think the team, in many ways, is actually a misnomer, because we're working on more than just multi-agent. Multi-agent is one of the things we're working on. Some other things we're working on is just like being able to scale up test time compute by a ton. So how, you know, we get these models thinking for 15 minutes now. How do we get them to think? How do we get them to think for hours? How do we get them to think for days, even longer? And be able to solve incredibly difficult problems. So that's one direction that we're pursuing. Multi-agent is another direction. And here, I think there's a few different motivations. We're interested in both the collaborative and the competitive aspect of multi-agent. I think the way that I describe it is people often say in AI circles that humans occupy this very narrow band of intelligence. And AIs are just going to, like, quickly catch up. And then surpass, like, this band of intelligence. And I actually don't think that the band of human intelligence is that narrow. I think it's actually quite broad. Because if you compare anatomically identical humans from, you know, caveman times, they didn't get that far in terms of, like, you know, what we would consider intelligence today, right? Like, they're not putting a man on the moon, you know, they're not, like, building semiconductors or nuclear reactors or anything like that. And we have those today, even though we as humans are not. And so, what's the difference? Well, I think the difference is that you have thousands of years, a lot of humans, billions of humans, cooperating and competing with each other, building up civilization over time. The technology that we're seeing is the product of this civilization. And I think similarly, the AIs that we have today are kind of like the cavemen of AI. And I think that if you're able to have them cooperate and compete with billions. Of AIs over a long period of time and build up a civilization, essentially, the things that they would be able to produce and answer would be far beyond what is possible today with the AIs that we have today.

Alessio [00:43:56]: Do you see that being similar to maybe, like, Jim Phan's Voyager skill library idea, resaving these things? Or is it just the models then being retrained on this new knowledge? Because the humans then have a lot of it in the brain as they grow.

Noam [00:44:10]: I think I'm going to be evasive here and say that, like... We're not going to... Yeah, we're not going to... Until we have something to announce, which I think that we will in the not-too-distant future. I think I'm going to be a bit vague about, like, exactly what we're doing. But I will say that the way that we are approaching multi-agent in the details and the way we're actually going about it is, I think, very different from how it's been done historically and how it's being done today by other places. I've been in the multi-agent field for a long time. I've kind of felt like the multi-agent field has... It's been a bit misguided in some ways in the approaches that the field has taken and, like, the way that it's been approached. And so I think we're trying to take a very principled approach to multi-agent.

swyx [00:44:52]: Sorry, I got to add, like... So you can't talk about what you're doing, but you can say what's misguided. What's misguided?

Noam [00:44:57]: I think that a lot of the approaches that have been taken have been very heuristic and haven't really been following, like, the bitter lesson approach to scaling and research.

Alessio [00:45:07]: Okay. I think maybe this might be a good spot. So, obviously, you've done a lot of amazing work. You've done a lot of amazing work in poker, and I think as the reasoning model got better, I was talking to one of my friends who used to be a hardcore poker grinder, and I told them I was going to interview you. And their question was, at the table, you can get a lot of information from a small sample size about how a person plays. But today, GTO is, like, so prevalent that sometimes people forget that you can play exploitatively. What do you think is the state? As you think about multi-agent and kind of, like, competition, is it always going to be trying to find the optimal thing? Or is a lot of it... Trying to think more in the moment, like, how to exploit somebody?