It's Time to Science

Why the time is right to start the world's first dedicated AI for Science podcast, and why AI Engineers should care

Can you believe there are no dedicated AI for Science podcasts in the world??1 Today, we are fixing that by launching our first Science pod with new hosts!

The gap between the sheer interest from insiders vs the relative disinterest/ignorance from the masses is the widest gap I have observed since I called out the Rise of the AI Engineer in 2023. Here’s just the most obvious points of what we’re seeing:

Biohub’s bet is that biology advances fastest when it looks more like a software team than a grant pipeline, with software engineers, AI researchers, and biologists co-building models, tools, and datasets rather than throwing results over the wall in silos. This obviously comes from up top, with their co-CEOs being Mark Zuckerberg (engineer) and Priscilla Chan (doctor/scientist). As Mark says, this pairing of “frontier AI lab” and “frontier biology lab” will unlock a lot more advances in fundamental biology than possible, enabling us to model humans in silico and treating diseases as “rare as one”.

“2026 in AI for Science will look a lot like 2025 in AI for Software Engineering” — Kevin Weil, OpenAI’s new VP for Science, said yesterday. Both OpenAI and Anthropic have spun up dedicated AI for Science divisions in the past 4 months, pursuing both product and research. DeepMind of course has the longest history in Science among the frontier labs.

In the last 6 months alone, Periodic Labs ($300M seed, upcoming guest!) is building autonomous, AI‑driven “science factory” for materials‑led discovery. Lila Sciences ($350M A) is combining frontier models with robotic labs that generate experimental data across biology, chemistry, and materials science. CuspAI ($100M A, upcoming guest!) is building foundation models that search for novel compounds and industrial materials. In Excelsior Sciences ($95M) is building AI‑guided experimentation that drastically shorten drug‑optimization cycles. Chai Discovery (~$70M) is working on antibody and drug discovery, while Harmonic ($120M C), Axiom ($64M seed) and Math Inc are build math‑native AI systems focused on formal reasoning and verifiable proofs.

Sam Altman and Jakub Pachocki have publicly committed to delivering an “Automated AI Research Intern” by September THIS YEAR (“A system that can meaningfully accelerate human researchers, not just chat or code.” - OpenAI Prism is the starting point), and a “meaningful, Fully Automated AI Researcher by 2028 (“A system capable of autonomously delivering on larger research projects — meaning it could formulate hypotheses, plan experiments, run them, analyze results, and draw conclusions without human hand-holding.”)

As a rule, we try to stay grounded at Latent Space, and veer away from discussing AGI timelines and Superintelligence. But it hard to deny that by 2100, we will likely look back and see applying AI engineering to the hard sciences is one of the most important missions to pursue this century. The stakes for humanity are high across chemistry, materials science, high performance computing/chip design, the biology/ pharmaceuticals complex, and even physics, mathematics, and climate science, not to mention AI research accelerating itself. And of course it will be both financially rewarding and impactful.

None of this is new. Pushing scientific frontiers has always been important, and yet, to paraphrase Jeff Hammerbacher, the best human minds and LLMs of our generation continue to be directed towards astroturfing Reddit, promote a fork of a fork of a VSCode extension, and generally serving slop.

That’s fine, and slop is a natural part of a well rounded diet, but we see very rewarding opportunities to raise the aspirations of AI Engineers and show them why the general skillset of AI and ML Engineering can actually transfer across to the hard sciences even if the total sum of biology knowledge you have is “the Mitochondria is the powerhouse of the cell”:

Standard Knowledge Worker Benchmarks are saturated. We’re tired of every AI lab taking turns incrementing SWE-Bench Verified or MMLU by 0.1% and claiming SOTA. We know that GPT 5.2 Pro can do ~70% of expert level white collar work. The remaining, “final frontier” to hillclimb is on hard sciences like FrontierMath and HLE, our last gasp before discovering new science altogether.

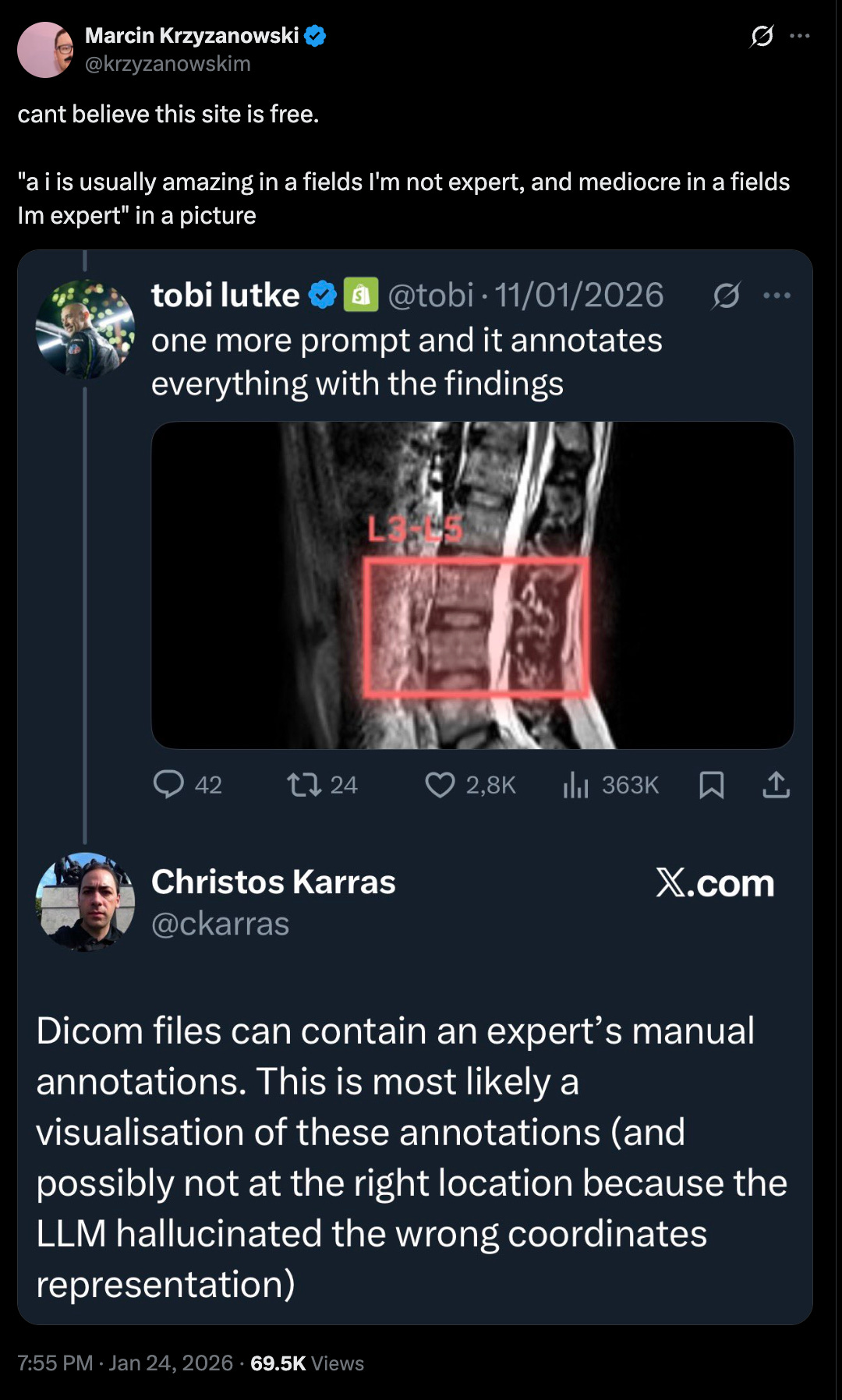

Hard Domains are getting Bitter Lessoned: Everything is converging on transformers and multimodal foundation models, even non-mathematicians can get IMO Gold medals because generalist ML > domain expertise.

AI solves JIT Self Education: Bloom’s 2 Sigma Problem ensures that LLMs can help you quickly ramp up on at least baseline common knowledge2 teach yourself what you need to know to interface with domain experts/enable them to do with your skillset what they can’t do on your own.

What a time to be alive: Even if you are perfectly content in your B2B SaaS or Koding Agent job, it is still an incredible privilege to be alive while all this other progress is ongoing in adjacent fields. We’ll tell these stories, our way.

Persuant to the last point, we think general consumer AI media, or even technical AI media, is woefully underequipped to do good science communication around “AI for Science for Engineers”.

Today, we’re embarking on that journey with Latent Space’s new AI for Science podcast. We’re nervous about it too — but we think this mission is too important, and the gap between the obvious potential and the lack of supply, to wait any longer. We’d love your support and feedback as we start this new adventure.

As a final word, thank you to RJ Honicky (Miraomics) and Brandon Anderson (Atomic) for stepping up to host the new pod. Alessio and I are devtools guys; we could not start AI for Science coverage without actual PhD’s and Science founders!

Proof: there’s an “ML4Sci” podcast and an “ai4.science” event series, a domain we wanted to buy, but no podcast that proudly self identifies as simply “the AI for Science podcast”.

but beware GeLLMann Amnesia at the edges of expertise!

The podcast I’ve been waiting for! Wonder how many there will be this time next year.

Looking forward to AI4Science podcasts