SF folks: join us at the AI Engineer Foundation’s Emergency Hackathon tomorrow and consider the Newton if you’d like to cowork in the heart of the Cerebral Arena.

Our community page is up to date as usual!

~800,000 developers watched OpenAI Dev Day, ~8,000 of whom listened along live on our ThursdAI x Latent Space, and ~800 of whom got tickets to attend in person:

OpenAI’s first developer conference easily surpassed most people’s lowballed expectations - they simply did everything short of announcing GPT-5, including:

ChatGPT (the consumer facing product)

GPT4 Turbo already in ChatGPT (running faster, with an April 2023 cutoff), all noticed by users weeks before the conference

Model picker eliminated, God Model chooses for you

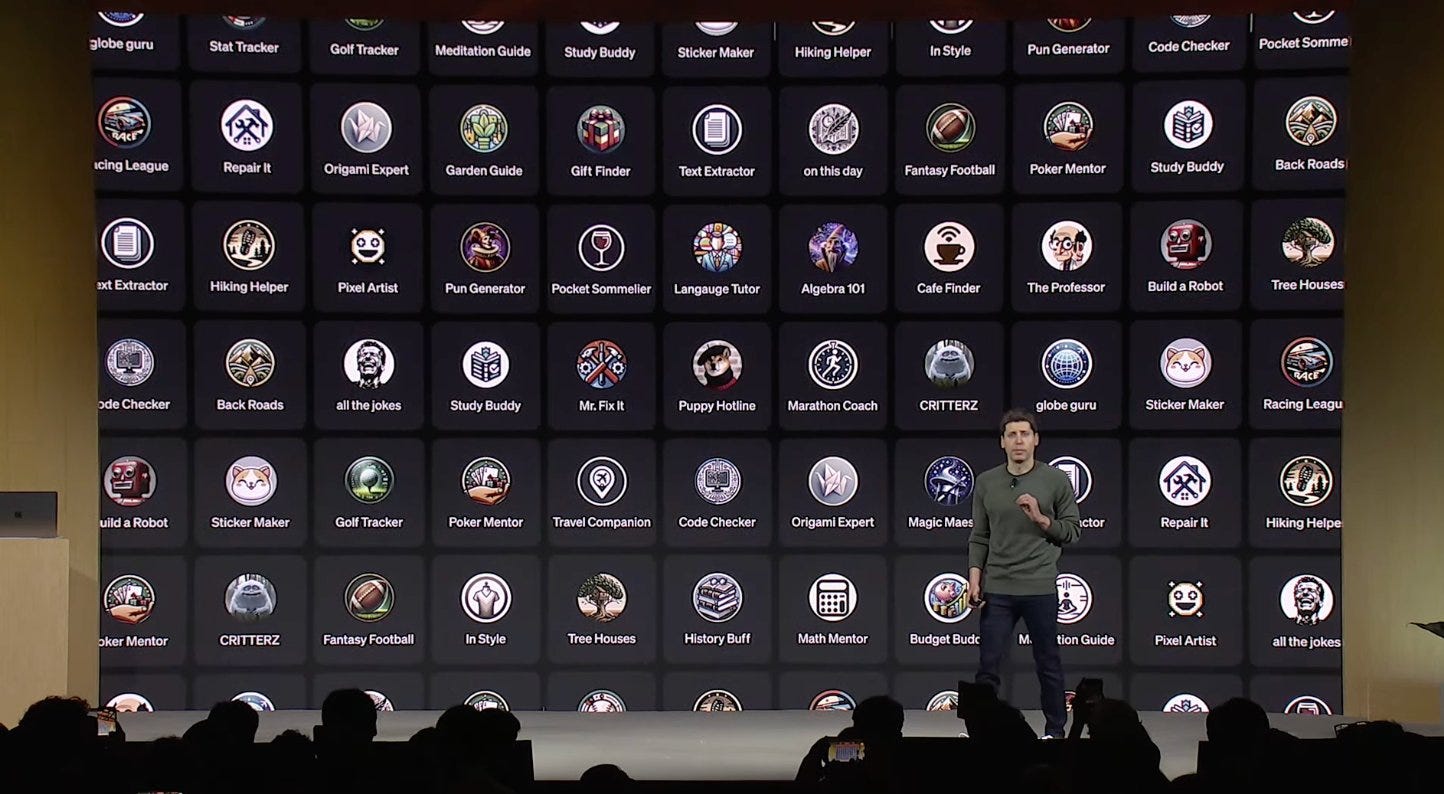

GPTs - “tailored version of ChatGPT for a specific purpose” - stopping short of “Agents”. With custom instructions, expanded knowledge, and actions, and an intuitive no-code GPT Builder UI (we tried all these on our livestream yesterday and found some issues, but also were able to ship interesting GPTs very quickly) and a GPT store with revenue sharing (an important criticism we focused on in our episode on ChatGPT Plugins)

API (the developer facing product)

APIs for Dall-E 3, GPT4 Vision, Code Interpreter (RIP Advanced Data Analysis), GPT4 Finetuning and (surprise!) Text to Speech

many thought each of these would take much longer to arrive

usable in curl and in playground

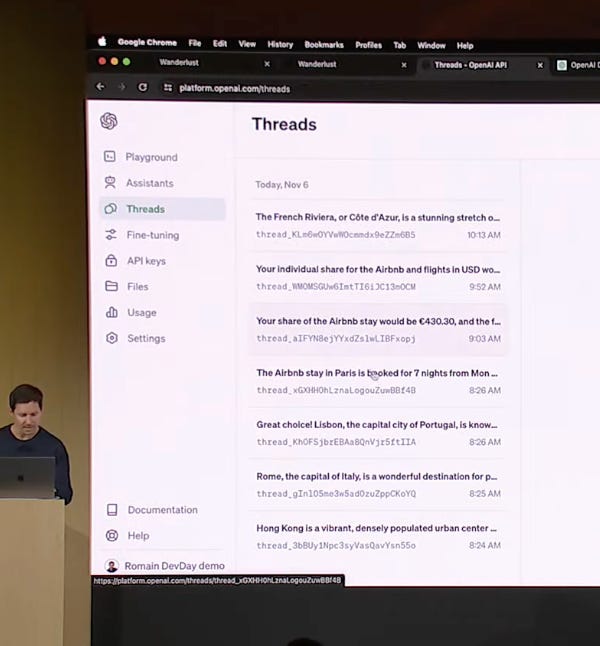

Assistant API: stateful API backing “GPTs” like apps, with support for calling multiple tools in parallel, persistent Threads (storing message history, unlimited context window with some asterisks), and uploading/accessing Files (with a possibly-too-simple RAG algorithm, and expensive pricing)

Price drops for a bunch of things!

Misc: Custom Models for big spending ($2-3m) customers, Copyright Shield, Satya

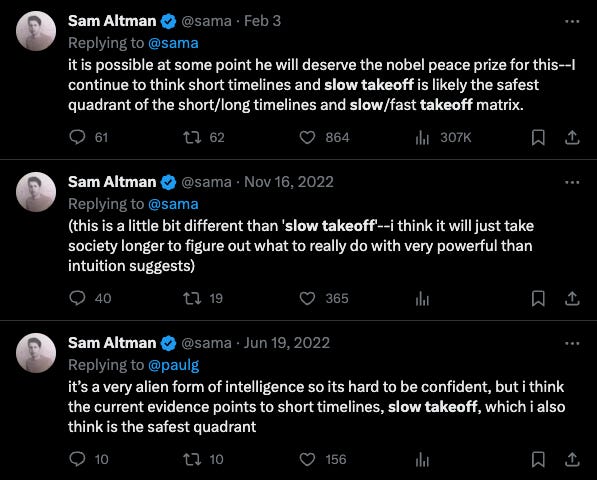

The progress here feels fast, but it is mostly (incredible) last-mile execution on model capabilities that we already knew to exist. On reflection it is important to understand that the one guiding principle of OpenAI, even more than being Open (we address that in part 2 of today’s pod), is that slow takeoff of AGI is the best scenario for humanity, and that this is what slow takeoff looks like:

When introducing GPTs, Sam was careful to assert that “gradual iterative deployment is the best way to address the safety challenges with AI”:

This is why, in fact, GPTs and Assistants are intentionally underpowered, and it is a useful exercise to consider what else OpenAI continues to consider dangerous (for example, many people consider a while(true) loop a core driver of an agent, which GPTs conspicuously lack, though Lilian Weng of OpenAI does not).

We convened the crew to deliver the best recap of OpenAI Dev Day in Latent Space pod style, with a 1hr deep dive with the Functions pod crew from 5 months ago, and then another hour with past and future guests live from the venue itself, discussing various elements of how these updates affect their thinking and startups. Enjoy!

Show Notes

swyx live thread (see pinned messages in Twitter Space for extra links from community)

Newton AI Coworking Interest Form in the heart of the Cerebral Arena

Timestamps

[00:00:00] Introduction

[00:01:59] Part I: Latent Space Pod Recap

[00:06:16] GPT4 Turbo and Assistant API

[00:13:45] JSON mode

[00:15:39] Plugins vs GPT Actions

[00:16:48] What is a "GPT"?

[00:21:02] Criticism: the God Model

[00:22:48] Criticism: ChatGPT changes

[00:25:59] "GPTs" is a genius marketing move

[00:26:59] RIP Advanced Data Analysis

[00:28:50] GPT Creator as AI Prompt Engineer

[00:31:16] Zapier and Prompt Injection

[00:34:09] Copyright Shield

[00:38:03] Sharable GPTs solve the API distribution issue

[00:39:07] Voice

[00:44:59] Vision

[00:49:48] In person experience

[00:55:11] Part II: Spot Interviews

[00:56:05] Jim Fan (Nvidia - High Level Takeaways)

[01:05:35] Raza Habib (Humanloop) - Foundation Model Ops

[01:13:59] Surya Dantuluri (Stealth) - RIP Plugins

[01:21:20] Reid Robinson (Zapier) - AI Actions for GPTs

[01:31:19] Div Garg (MultiOn) - GPT4V for Agents

[01:37:15] Louis Knight-Webb (Bloop.ai) - AI Code Search

[01:49:21] Shreya Rajpal (Guardrails.ai) - on Hallucinations

[01:59:51] Alex Volkov (Weights & Biases, ThursdAI) - "Keeping AI Open"

[02:10:26] Rahul Sonwalkar (Julius AI) - Advice for Founders

Transcript

[00:00:00] Introduction

[00:00:00] swyx: Hey everyone, this is Swyx coming at you live from the Newton, which is in the heart of the Cerebral Arena. It is a new AI co working space that I and a couple of friends are working out of. There are hot desks available if you're interested, just check the show notes. But otherwise, obviously, it's been 24 hours since the opening of Dev Day, a lot of hot reactions and longstanding tradition, one of the longest traditions we've had.

[00:00:29] And the latent space pod is to convene emergency sessions and record the live thoughts of developers and founders going through and processing in real time. I think a lot of the roles of podcasts isn't as perfect information delivery channels, but really as an audio and oral history of what's going on as it happens, while it happens.

[00:00:49] So this one's a little unusual. Previously, we only just gathered on Twitter Spaces, and then just had a bunch of people. The last one was the Code Interpreter one with 22, 000 people showed up. But this one is a little bit more complicated because there's an in person element and then a online element.

[00:01:06] So this is a two part episode. The first part is a recorded session between our latent space people and Simon Willison and Alex Volkoff from the Thursday iPod, just kind of recapping the day. But then also, as the second hour, I managed to get a bunch of interviews with previous guests on the pod who we're still friends with and some new people that we haven't yet had on the pod.

[00:01:28] But I wanted to just get their quick reactions because most of you have known and loved Jim Fan and Div Garg and a bunch of other folks that we interviewed. So I just want to, I'm excited to introduce To you the broader scope of what it's like to be at OpenAI Dev Day in person bring you the audio experience as well as give you some of the thoughts that developers are having as they process the announcements from OpenAI.

[00:01:51] So first off, we have the Mainspace Pod recap. One hour of open I dev day.

[00:01:59] Part I: Latent Space Pod Recap

[00:01:59] Alessio: Hey. Welcome to the Latents Based Podcast an emergency edition after OpenAI Dev Day. This is Alessio, partner and CTO of Residence at Decibel Partners, and as usual, I'm joined by Swyx, founder of SmallAI. Hey,

[00:02:12] swyx: and today we have two special guests with us covering all the latest and greatest.

[00:02:17] We, we, we love to get our band together and recap things, especially when they're big. And it seems like that every three months we have to do this. So Alex, welcome. From Thursday AI we've been collaborating a lot on the Twitter spaces and welcome Simon from many, many things, but also I think you're the first person to not, not make four appearances on our pod.

[00:02:37] Oh, wow. I feel privileged. So welcome. Yeah, I think we're all there yesterday. How... Do we feel like, what do you want to kick off with? Maybe Simon, you want to, you want to take first and then Alex. Sure. Yeah. I mean,

[00:02:47] Simon Willison: yesterday was quite exhausting, quite frankly. I feel like it's going to take us as a community several months just to completely absorb all of the stuff that they dropped on us in one giant.

[00:02:57] Giant batch. It's particularly impressive considering they launched a ton of features, what, three or four weeks ago? ChatGPT voice and the combined mode and all of that kind of thing. And then they followed up with everything from yesterday. That said, now that I've started digging into the stuff that they released yesterday, some of it is clearly in need of a bit more polish.

[00:03:15] You know, the the, the reality of what they look, what they released is I'd say about 80 percent of, of what it looks like it was yesterday, which is still impressive. You know, don't get me wrong. This is an amazing batch of stuff, but there are definitely problems and sharp edges that we need to file off.

[00:03:29] And there are things that we still need to figure out before we can take advantage of all of this.

[00:03:33] swyx: Yeah, agreed, agreed. And we can go into those, those sharp edges in a bit. I just want to pop over to Alex. What are your thoughts?

[00:03:39] Alex Volkov: So, interestingly, even folks at OpenAI, there's like several booths and help desks so you can go in and ask people, like, actual changes and people, like, they could follow up with, like, the right people in OpenAI and, like, answer you back, etc.

[00:03:52] Even some of them didn't know about all the changes. So I went to the voice and audio booth. And I asked them about, like, hey, is Whisper 3 that was announced by Sam Altman on stage just, like, briefly, will that be open source? Because I'm, you know, I love using Whisper. And they're like, oh, did we open source?

[00:04:06] Did we talk about Whisper 3? Like, some of them didn't even know what they were releasing. But overall, I felt it was a very tightly run event. Like, I was really impressed. Shawn, we were sitting in the audience, and you, like, pointed at the clock to me when they finished. They finished, like, on... And this was after like doing some extra stuff.

[00:04:24] Very, very impressive for a first event. Like I was absolutely like, Good job.

[00:04:30] swyx: Yeah, apparently it was their first keynote and someone, I think, was it you that told me that this is what happens if you have A president of Y Combinator do a proper keynote you know, having seen many, many, many presentations by other startups this is sort of the sort of master stroke.

[00:04:46] Yeah, Alessio, I think you were watching remotely. Yeah, we were at the Newton. Yeah, the Newton.

[00:04:52] Alessio: Yeah, I think we had 60 people here at the watch party, so it was quite a big crowd. Mixed reaction from different... Founders and people, depending on what was being announced on the page. But I think everybody walked away kind of really happy with a new layer of interfaces they can use.

[00:05:11] I think, to me, the biggest takeaway was like and I was talking with Mike Conover, another friend of the podcast, about this is they're kind of staying in the single threaded, like, synchronous use cases lane, you know? Like, the GPDs announcement are all like... Still, chatbase, one on one synchronous things.

[00:05:28] I was expecting, maybe, something about async things, like background running agents, things like that. But it's interesting to see there was nothing of that, so. I think if you're a founder in that space, you're, you're quite excited. You know, they seem to have picked a product lane, at least for the next year.

[00:05:45] So, if you're working on... Async experiences, so things working in the background, things that are not co pilot like, I think you're quite excited to have them be a lot cheaper now.

[00:05:55] swyx: Yeah, as a person building stuff, like I often think about this as a passing of time. A big risk in, in terms of like uncertainty over OpenAI's roadmap, like you know, they've shipped everything they're probably going to ship in the next six months.

[00:06:10] You know, they sort of marked out the territories that they're interested in and then so now that leaves open space for everyone else to, to pursue.

[00:06:16] GPT4 Turbo and Assistant API

[00:06:16] swyx: So I guess we can kind of go in order probably top of mind to mention is the GPT 4 turbo improvements. Yeah, so longer context length, cheaper price.

[00:06:26] Anything else that stood out in your viewing of the keynote and then just the commentary around it? I

[00:06:34] Alex Volkov: was I was waiting for Stateful. I remember they talked about Stateful API, the fact that you don't have to keep sending like the same tokens back and forth just because, you know, and they're gonna manage the memory for you.

[00:06:45] So I was waiting for that. I knew it was coming at some point. I was kind of... I did not expect it to come at this event. I don't know why. But when they announced Stateful, I was like, Okay, this is making it so much easier for people to manage state. The whole threads I don't want to mix between the two things, so maybe you guys can clarify, but there's the GPT 4 tool, which is the model that has the capabilities, In a whopping 128k, like, context length, right?

[00:07:11] It's huge. It's like two and a half books. But also, you know, faster, cheaper, etc. I haven't yet tested the fasterness, but like, everybody's excited about that. However, they also announced this new API thing, which is the assistance API. And part of it is threads, which is, we'll manage the thread for you.

[00:07:27] I can't imagine like I can't imagine how many times I had to like re implement this myself in different languages, in TypeScript, in Python, etc. And now it's like, it's so easy. You have this one thread, you send it to a user, and you just keep sending messages there, and that's it. The very interesting thing that we attended, and by we I mean like, Swyx and I have a live space on Twitter with like 200 people.

[00:07:46] So it's like me, Swyx, and 200 people in our earphones with us as well. They kept asking like, well, how's the price happening? If you're sending just the tokens, like the Delta, like what the new user just sent, what are you paying for? And I went to OpenAI people, and I was like, hey... How do we get paid for this?

[00:08:01] And nobody knew, nobody knew, and I finally got an answer. You still pay for the whole context that you have inside the thread. You still pay for all this, but now it's a little bit more complex for you to kind of count with TikTok, right? So you have to hit another API endpoint to get the whole thread of what the context is.

[00:08:17] Then TikTokonize this, run this in TikTok, and then calculate. This is now the new way, officially, for OpenAI. But I really did, like, have to go and find this. They didn't know a lot of, like, how the pricing is. Ouch! Do you know if

[00:08:31] Simon Willison: the API, does the API at least tell you how many tokens you used? Or is it entirely up to you to do the accounting?

[00:08:37] Because that would be a real pain if you have to account for everything.

[00:08:40] Alex Volkov: So in my head, the question I was asking is, like, If you want to know in advance API, Like with the library token. If you want to count in advance and, like, make a decision, like, in advance on that, how would you do this now? And they said, well, yeah, there's a way.

[00:08:54] If you hit the API, get the whole thread back, then count the tokens. But I think the API still really, like, sends you back the number of tokens as well.

[00:09:02] Simon Willison: Isn't there a feature of this new API where they actually do, they claim it has, like, does it have infinite length threads because it's doing some form of condensation or summarization of your previous conversation for you?

[00:09:15] I heard that from somewhere, but I haven't confirmed it yet.

[00:09:18] swyx: So I have, I have a source from Dave Valdman. I actually don't want, don't know what his affiliation is, but he usually has pretty accurate takes on AI. So I, I think he works in the iCircles in some capacity. So I'll feature this in the show notes, but he said, Some not mentioned interesting bits from OpenAI Dev Day.

[00:09:33] One unlimited. context window and chat threads from opening our docs. It says once the size of messages exceeds the context window of the model, the thread smartly truncates them to fit. I'm not sure I want that intelligence.

[00:09:44] Alex Volkov: I want to chime in here just real quick. The not want this intelligence. I heard this from multiple people over the next conversation that I had. Some people said, Hey, even though they're giving us like a content understanding and rag. We are doing different things. Some people said this with Vision as well.

[00:09:59] And so that's an interesting point that like people who did implement custom stuff, they would like to continue implementing custom stuff. That's also like an additional point that I've heard people talk about.

[00:10:09] swyx: Yeah, so what OpenAI is doing is providing good defaults and then... Well, good is questionable.

[00:10:14] We'll talk about that. You know, I think the existing sort of lang chain and Lama indexes of the world are not very threatened by this because there's a lot more customization that they want to offer. Yeah, so frustration

[00:10:25] Simon Willison: is that OpenAI, they're providing new defaults, but they're not documented defaults.

[00:10:30] Like they haven't told us how their RAG implementation works. Like, how are they chunking the documents? How are they doing retrieval? Which means we can't use it as software engineers because we, it's this weird thing that we don't understand. And there's no reason not to tell us that. Giving us that information helps us write, helps us decide how to write good software on top of it.

[00:10:48] So that's kind of frustrating. I want them to have a lot more documentation about just some of the internals of what this stuff

[00:10:53] swyx: is doing. Yeah, I want to highlight.

[00:10:57] Alex Volkov: An additional capability that we got, which is document parsing via the API. I was, like, blown away by this, right? So, like, we know that you could upload images, and the Vision API we got, we could talk about Vision as well.

[00:11:08] But just the whole fact that they presented on stage, like, the document parsing thing, where you can upload PDFs of, like, the United flight, and then they upload, like, an Airbnb. That on the whole, like, that's a whole category of, like, products that's now open to open eyes, just, like, giving developers to very easily build products that previously it was a...

[00:11:24] Pain in the butt for many, many people. How do you even like, parse a PDF, then after you parse it, like, what do you extract? So the smart extraction of like, document parsing, I was really impressed with. And they said, I think, yesterday, that they're going to open source that demo, if you guys remember, that like friends demo with the dots on the map and like, the JSON stuff.

[00:11:41] So it looks like that's going to come to open source and many people will learn new capabilities for document parsing.

[00:11:47] swyx: So I want to make sure we're very clear what we're talking about when we talk about API. When you say API, there's no actual endpoint that does this, right? You're talking about the chat GPT's GPT's functionality.

[00:11:58] Alex Volkov: No, I'm talking about the assistance API. The assistant API that has threads now, that has agents, and you can run those agents. I actually, maybe let's clarify this point. I think I had to, somebody had to clarify this for me. There's the GPT's. Which is a UI version of running agents. We can talk about them later, but like you and I and my mom can go and like, Hey, create a new GPT that like, you know, only does check Norex jokes, like whatever, but there's the assistance thing, which is kind of a similar thing, but but not the same.

[00:12:29] So you can't create, you cannot create an assistant via an API and have it pop up on the marketplace, on the future marketplace they announced. How can you not? No, no, no, not via the API. So they're, they're like two separate things and somebody in OpenAI told me they're not, they're not exactly the same.

[00:12:43] That's

[00:12:43] Simon Willison: so confusing because the API looks exactly like the UI that you use to set up the, the GPTs. I, I assumed they were, there was an API for the same

[00:12:51] Alex Volkov: feature. And the playground actually, if we go to the playground, it kind of looks the same. There's like the configurable thing. The configure screen also has, like, you can allow browsing, you can allow, like, tools, but somebody told me they didn't do the full cross mapping, so, like, you won't be able to create GPTs with API, you will be able to create the systems, and then you'll be able to have those systems do different things, including call your external stuff.

[00:13:13] So that was pretty cool. So this API is called the system API. That's what we get, like, in addition to the model of the GPT 4 turbo. And that has document parsing. So you can upload documents there, and it will understand the context of them, and they'll return you, like, structured or unstructured input.

[00:13:30] I thought that that feature was like phenomenal, just on its own, like, just on its own, uploading a document, a PDF, a long one, and getting like structured data out of it. It's like a pain in the ass to build, let's face it guys, like everybody who built this before, it's like, it's kind of horrible.

[00:13:45] JSON mode

[00:13:45] swyx: When you say structured data, are you talking about the citations?

[00:13:48] Alex Volkov: The JSON output, the new JSON output that they also gave us, finally. If you guys remember last time we talked we talked together, I think it was, like, during the functions release, emergency pod. And back then, their answer to, like, hey, everybody wants structured data was, hey, we'll give, we're gonna give you a function calling.

[00:14:03] And now, they did both. They gave us both, like, a JSON output, like, structure. So, like, you can, the models are actually going to return JSON. Haven't played with it myself, but that's what they announced. And the second thing is, they improved the function calling. Significantly as well.

[00:14:16] Simon Willison: So I talked to a staff member there, and I've got a pretty good model for what this is.

[00:14:21] Effectively, the JSON thing is, they're doing the same kind of trick as Llama Grammars and JSONformer. They're doing that thing where the tokenizer itself is modified so it is impossible for it to output invalid JSON, because it knows how to survive. Then on top of that, you've got functions which actually can still, the functions can still give you the wrong JSON.

[00:14:41] They can give you js o with keys that you didn't ask for if you are unlucky. But at least it will be valid. At least it'll pass through a json passer. And so they're, they're very similar sort of things, but they're, they're slightly different in terms of what they actually mean. And yeah, the new function stuff is, is super exciting.

[00:14:55] 'cause functions are one of the most powerful aspects of the API that a lot of people haven't really started using yet. But it's amazingly powerful what you can do with it.

[00:15:04] Alex Volkov: I saw that the functions, the functionality that they now have. is also plug in able as actions to those assistants. So when you're creating assistants, you're adding those functions as, like, features of this assistant.

[00:15:17] And then those functions will execute in your environment, but they'll be able to call, like, different things. Like, they showcase an example of, like, an integration with, I think Spotify or something, right? And that was, like, an internal function that ran. But it is confusing, the kind of, the online assistant.

[00:15:32] APIable agents and the GPT's agents. So I think it's a little confusing because they demoed both. I think

[00:15:39] Plugins vs GPT Actions

[00:15:39] Simon Willison: it's worth us talking about the difference between plugins and actions as well. Because, you know, they launched plugins, what, back in February. And they've effectively... They've kind of deprecated plugins.

[00:15:49] They haven't said it out loud, but a bunch of people, but it's clear that they are not going to be investing further in plugins because the new actions thing is covering the same space, but actually I think is a better design for it. Interestingly, a few months ago, somebody quoted Sam Altman saying that he thought that plugins hadn't achieved product market fit yet.

[00:16:06] And I feel like that's sort of what we're seeing today. The the problem with plugins is it was all a little bit messy. People would pick and mix the plugins that they needed. Nobody really knew which plugin combinations would work. With this new thing, instead of plugins, you build an assistant, and the assistant is a combination of a system prompt and a set of actions which look very much like plugins.

[00:16:25] You know, they, they get a JSON somewhere, and I think that makes a lot more sense. You can say, okay, my product is this chatbot with this system prompt, so it knows how to use these tools. I've given it this combination of plugin like things that it can use. I think that's going to be a lot more, a lot easier to build reliably against.

[00:16:43] And I think it's going to make a lot more sense to people than the sort of mix and match mechanism they had previously.

[00:16:48] What is a "GPT"?

[00:16:48] swyx: So actually

[00:16:49] Alex Volkov: maybe it would be cool to cover kind of the capabilities of an assistant, right? So you have a custom prompt, which is akin to a system message. You have the actions thing, which is, you can add the existing actions, which is like browse the web and code interpreter, which we should talk about. Like, the system now can write code and execute it, which is exciting. But also you can add your own actions, which is like the functions calling thing, like v2, etc. Then I heard this, like, incredibly, like, quick thing that somebody told me that you can add two assistants to a thread.

[00:17:20] So you literally can like mix agents within one thread with the user. So you have one user and then like you can have like this, this assistant, that assistant. They just glanced over this and I was like, that, that is very interesting. That is not very interesting. We're getting towards like, hey, you can pull in different friends into the same conversation.

[00:17:37] Everybody does the different thing. What other capabilities do we have there? You guys remember? Oh Remember, like, context. Uploading API documentation.

[00:17:48] Simon Willison: Well, that one's a bit more complicated. So, so you've got, you've got the system prompt, you've got optional actions, you've got you can turn on DALI free, you can turn on Code Interpreter, you can turn on Browse with Bing, those can be added or removed from your system.

[00:18:00] And then you can upload files into it. And the files can be used in two different ways. You can... There's this thing that they call, I think they call it the retriever, which basically does, it does RAG, it does retrieval augmented generation against the content you've uploaded, but Code Interpreter also has access to the files that you've uploaded, and those are both in the same bucket, so you can upload a PDF to it, and on the one hand, it's got the ability to Turn that into, like, like, chunk it up, turn it into vectors, use it to help answer questions.

[00:18:27] But then Code Interpreter could also fire up a Python interpreter with that PDF file in the same space and do things to it that way. And it's kind of weird that they chose to combine both of those things. Also, the limits are amazing, right? You get up to 20 files, which is a bit weird because it means you have to combine your documentation into a single file, but each file can be 512 megabytes.

[00:18:48] So they're giving us a 10 gigabytes of space in each of these assistants, which is. Vast, right? And of course, I tested, it'll handle SQLite databases. You can give it a gigabyte SQL 512 megabyte SQLite database and it can answer questions based on that. But yeah, it's, it's, like I said, it's going to take us months to figure out all of the combinations that we can build with

[00:19:07] swyx: all of this.

[00:19:08] Alex Volkov: I wanna I just want to

[00:19:12] Alessio: say for the storage, I saw Jeremy Howard tweeted about it. It's like 20 cents per gigabyte per system per day. Just in... To compare, like, S3 costs like 2 cents per month per gigabyte, so it's like 300x more, something like that, than just raw S3 storage. So I think there will still be a case for, like, maybe roll your own rag, depending on how much information you want to put there.

[00:19:38] But I'm curious to see what the price decline curve looks like for the

[00:19:42] swyx: storage there. Yeah, they probably should just charge that at cost. There's no reason for them to charge so much.

[00:19:50] Simon Willison: That is wildly expensive. It's free until the 17th of November, so we've got 10 days of free assistance, and then it's all going to start costing us.

[00:20:00] Crikey. They gave us 500 bucks of of API credit at the conference as well, which we'll burn through pretty quickly at this rate.

[00:20:07] swyx: Yep.

[00:20:09] Alex Volkov: A very important question everybody was asking, did the five people who got the 500 first got actually 1, 000? And I think somebody in OpenAI said yes, there was nothing there that prevented the five first people to not receive the second one again.

[00:20:21] I

[00:20:22] swyx: met one of them. I met one of them. He said he only got 500. Ah,

[00:20:25] Alex Volkov: interesting. Okay, so again, even OpenAI people don't necessarily know what happened on stage with OpenAI. Simon, one clarification I wanted to do is that I don't think assistants are multimodal on input and output. So you do have vision, I believe.

[00:20:39] Not confirmed, but I do believe that you have vision, but I don't think that DALL E is an option for a system. It is an option for GPTs, but the guy... Oh, that's so confusing! The systems, the checkbox for DALL E is not there. You cannot enable it.

[00:20:54] swyx: But you just add them as a tool, right? So, like, it's just one more...

[00:20:58] It's a little finicky... In the GPT interface!

[00:21:02] Criticism: the God Model

[00:21:02] Simon Willison: I mean, to be honest, if the systems don't have DALI 3, we, does DALI 3 have an API now? I think they released one. I can't, there's so much stuff that got lost in the pile. But yeah, so, Coded Interpreter. Wow! That I was not expecting. That's, that's huge. Assuming.

[00:21:20] I mean, I haven't tried it yet. I need to, need to confirm that it

[00:21:29] Alex Volkov: definitely works because GPT

[00:21:31] swyx: is I tried to make it do things that were not logical yesterday. Because one of the risks of having the God model is it calls... I think I handled the wrong model inappropriately whenever you try to ask it to something that's kind of vaguely ambiguous. But I thought I thought it handled the job decently well.

[00:21:50] Like you know, I I think there's still going to be rough edges. Like it's going to try to draw things. It's going to try to code when you don't actually want to. And. In a sense, OpenAI is kind of removing that capability from ChargeGPT. Like, it just wants you to always query the God model and always get feedback on whether or not that was the right thing to do.

[00:22:09] Which really

[00:22:10] Simon Willison: sucks. Because it runs... I like ask it a question and it goes, Oh, searching Bing. And I'm like, No, don't search Bing. I know that the first 10 results on Bing will not solve this question. I know you know the answer. So I had to build my own custom GPT that just turns off Bing. Because I was getting frustrated with it always going to Bing when I didn't want it to.

[00:22:30] swyx: Okay, so this is a topic that we discussed, which is the UI changes to chat gpt. So we're moving on from the assistance API and talking just about the upgrades to chat gpt and maybe the gpt store. You did not like it.

[00:22:44] Alex Volkov: And I loved it. I'm gonna take both sides of this, yeah.

[00:22:48] Criticism: ChatGPT changes

[00:22:48] Simon Willison: Okay, so my problem with it, I've got, the two things I don't like, firstly, it can do Bing when I don't want it to, and that's just, just irritating, because the reason I'm using GPT to answer a question is that I know that I can't do a Google search for it, because I, I've got a pretty good feeling for what's going to work and what isn't, and then the other thing that's annoying is, it's just a little thing, but Code Interpreter doesn't show you the code that it's running as it's typing it out now, like, it'll churn away for a while, doing something, and then they'll give you an answer, and you have to click a tiny little icon that shows you the code.

[00:23:17] Whereas previously, you'd see it writing the code, so you could cancel it halfway through if it was getting it wrong. And okay, I'm a Python programmer, so I care, and most people don't. But that's been a bit annoying.

[00:23:26] swyx: Yeah, and when it errors, it doesn't tell you what the error is. It just says analysis failed, and it tries again.

[00:23:32] But it's really hard for us to help it.

[00:23:34] Simon Willison: Yeah. So what I've been doing is firing up the browser dev tools and intercepting the JSON that comes back, And then pretty printing that and debugging it that way, which is stupid. Like, why do I have to do

[00:23:45] Alex Volkov: that? Totally good feedback for OpenAI. I will tell you guys what I loved about this unified mode.

[00:23:49] I have a name for it. So we actually got a preview of this on Sunday. And one of the, one of the folks got, got like an early example of this. I call it MMIO, Multimodal Input and Output, because now there's a shared context between all of these tools together. And I think it's not only about selecting them just selecting them.

[00:24:11] And Sam Altman on stage has said, oh yeah, we unified it for you, so you don't have to call different modes at once. And in my head, that's not all they did. They gave a shared context. So what is an example of shared context, for example? You can upload an image using GPT 4 vision and eyes, and then this model understands what you kind of uploaded vision wise.

[00:24:28] Then you can ask DALI to draw that thing. So there's no text shared in between those modes now. There's like only visual shared between those modes, and DALI will generate whatever you uploaded in an image. So like it's eyes to output visually. And you can mix the things as well. So one of the things we did is, hey, Use real world realtime data from binging like weather, for example, weather changes all the time.

[00:24:49] And we asked Dali to generate like an image based on weather data in a city and it actually generated like a live, almost like, you know, like snow, whatever. It was snowing in Denver. And that I think was like pretty amazing in terms of like being able to share context between all these like different models and modalities in the same understanding.

[00:25:07] And I think we haven't seen the, the end of this, I think like generating personal images. Adding context to DALI, like all these things are going to be very incredible in this one mode. I think it's very, very powerful.

[00:25:19] Simon Willison: I think that's really cool. I just want to opt in as opposed to opt out. Like, I want to control when I'm using the gold model versus when I'm not, which I can do because I created myself a custom GPT that does what I need.

[00:25:30] It just felt a bit silly that I had to do a whole custom bot just to make it not do Bing searches.

[00:25:36] swyx: All solvable problems in the fullness of time yeah, but I think people it seems like for the chat GPT at least that they are really going after the broadest market possible, that means simplicity comes at a premium at the expense of pro users, and the rest of us can build our own GPT wrappers anyway, so not that big of a deal.

[00:25:57] But maybe do you guys have any, oh,

[00:25:59] "GPTs" is a genius marketing move

[00:25:59] Alex Volkov: sorry, go ahead. So, the GPT wrappers thing. Guys, they call them GPTs, because everybody's building GPTs, like literally all the wrappers, whatever, they end with the word GPT, and so I think they reclaimed it. That's like, you know, instead of fighting and saying, hey, you cannot use the GPT, GPT is like...

[00:26:15] We have GPTs now. This is our marketplace. Whatever everybody else builds, we have the marketplace. This is our thing. I think they did like a whole marketing move here that's significant.

[00:26:24] swyx: It's a very strong marketing move. Because now it's called Canva GPT. It's called Zapier GPT. And they're basically saying, Don't build your own websites.

[00:26:32] Build it inside of our Goddard app, which is chatGPT. And and that's the way that we want you to do that. Right. In a

[00:26:39] Simon Willison: way, it sort of makes up... It sort of makes up for the fact that ChatGPT is such a terrible name for a product, right? ChatGPT, what were they thinking when they came up with that name?

[00:26:48] But I guess if they lean into it, it makes a little bit more sense. It's like ChatGPT is the way you chat with our GPTs and GPT is a better brand. And it's terrible, but it's not. It's a better brand than ChatGPT was.

[00:26:59] RIP Advanced Data Analysis

[00:26:59] swyx: So, so talking about naming. Yeah. Yeah. Simon, actually, so for those listeners that we're.

[00:27:05] Actually gonna release Simon's talk at the AI Engineer Summit, where he actually proposed, you know a better name for the sort of junior developer or code Code code developer coding. Coding intern.

[00:27:16] Simon Willison: Coding intern. Coding intern, yeah. Coding intern, was it? Yeah. But

[00:27:19] swyx: did, did you know, did you notice that advanced data analysis is, did RIP you know, 2023 to 2023 , you know, a sales driven decision that has been rolled back effectively.

[00:27:29] 'cause now everything's just called.

[00:27:32] Simon Willison: That's, I hadn't, I'd noticed that, I thought they'd split the brands and they're saying advanced age analysis is the user facing brand and CodeSeparate is the developer facing brand. But now if they, have they ditched that from the interface then?

[00:27:43] Alex Volkov: Yeah. Wow. So it's unified mode.

[00:27:45] Yeah. Yeah. So like in the unified mode, there's no selection anymore. Right. You just get all tools at once. So there's no reason.

[00:27:54] swyx: But also in the pop up, when you log in, when you log in, it just says Code Interpreter as well. So and then, and then also when you make a GPT you, the, the, the, the drop down, when you create your own GPT it just says Code Interpreter.

[00:28:06] It also doesn't say it. You're right. Yeah. They ditched the brand. Good Lord. On the UI. Yeah. So oh, that's, that's amazing. Okay. Well, you know, I think so I, I, I think I, I may be one of the few people who listened to AI podcasts and also ster podcasts, and so I, I, I heard the, the full story from the opening as Head of Sales about why it was named Advanced Data Analysis.

[00:28:26] It was, I saw that, yeah. Yeah. There's a bit of civil resistance, I think from the. engineers in the room.

[00:28:34] Alex Volkov: It feels like the engineers won because we got Code Interpreter back and I know for sure that some people were very happy with this specific

[00:28:40] Simon Willison: thing. I'm just glad I've been for the past couple of months I've been writing Code Interpreter parentheses also known as advanced data analysis and now I don't have to anymore so that's

[00:28:50] swyx: great.

[00:28:50] GPT Creator as AI Prompt Engineer

[00:28:50] swyx: Yeah, yeah, it's back. Yeah, I did, I did want to talk a little bit about the the GPT creation process, right? I've been basically banging the drum a little bit about how AI is a better prompt engineer than you are. And sorry, my. Speaking over Simon because I'm lagging. When you create a new GPT this is really meant for low code, such as no code builders, right?

[00:29:10] It's really, I guess, no code at all. Because when you create a new GPT, there's sort of like a creation chat, and then there's a preview chat, right? And the creation chat kind of guides you through the wizard. Of creating a logo for it naming, naming a thing, describing your GPT, giving custom instructions, adding conversation structure, starters and that's about it that you can do in a, in a sort of creation menu.

[00:29:31] But I think that is way better than filling out a form. Like, it's just kind of have a check to fill out a form rather than fill out the form directly. And I think that's really good. And then you can sort of preview that directly. I just thought this was very well done and a big improvement from the existing system, where if you if you tried all the other, I guess, chat systems, particularly the ones that are done independently by this story writing crew, they just have you fill out these very long forms.

[00:29:58] It's kind of like the match. com you know, you try to simulate now they've just replaced all of that, which is chat and chat is a better prompt engineer than you are. So when I,

[00:30:07] Simon Willison: I don't know about that, I'll,

[00:30:10] swyx: I'll, I'll drop this in, which is when I was creating a chat for my book, I just copied and selected all from my website, pasted it into the chat and it just did the prompts from chatbot for my book.

[00:30:21] Right? So like, I don't have to structurally, I don't have to structure it. I can just dump info in it and it just does the thing. It fills in the form

[00:30:30] Alex Volkov: for you.

[00:30:33] Simon Willison: Yeah did that come through?

[00:30:34] swyx: Yes

[00:30:35] Simon Willison: no it doesn't. Yeah I built the first one of these things using the chatbot. Literally, on the bot, on my phone, I built a working, like, like, bot.

[00:30:44] It was very impressive. And then the next three I built using the form. Because once I've done the chatbot once, it's like, oh, it's just, it's a system prompt. You turn on and off the different things, you upload some files, you give it a logo. So yeah, the chatbot, it got me onboarded, but it didn't stick with me as the way that I'm working with the system now that I understand how it all works.

[00:31:00] swyx: I understand. Yeah, I agree with that. I guess, again, this is all about the total newbie user, right? Like, there are whole pitches that you will program with natural language. And even the form... And for that, it worked.

[00:31:12] Simon Willison: Yeah, that did work really well.

[00:31:16] Zapier and Prompt Injection

[00:31:16] swyx: Can we talk

[00:31:16] Alex Volkov: about the external tools of that? Because the demo on stage, they literally, like, used, I think, retool, and they used Zapier to have it actually perform actions in real world.

[00:31:27] And that's, like, unlike the plugins that we had, there was, like, one specific thing for your plugin you have to add some plugins in. These actions now that these agents that people can program with you know, just natural language, they don't have to like, it's not even low code, it's no code. They now have tools and abilities in the actual world to do things.

[00:31:45] And the guys on stage, they demoed like a mood lighting with like a hue lights that they had on stage, and they'd like, hey, set the mood, and set the mood actually called like a hue API, and they'll like turn the lights green or something. And then they also had the Spotify API. And so I guess this demo wasn't live streamed, right?

[00:32:03] Swyx was live. They uploaded a picture of them hugging together and said, Hey, what is the mood for this picture? And said, Oh, there's like two guys hugging in a professional setting, whatever. So they created like a list of songs for them to play. And then they hit Spotify API to actually start playing this.

[00:32:17] All within like a second of a live demo. I thought it was very impressive for a low code thing. They probably already connected the API behind the scenes. So, you know, just like low code, it's not really no code. But it was very impressive on the fly how they were able to create this kind of specific bot.

[00:32:32] Simon Willison: On the one hand, yes, it was super, super cool. I can't wait to try that. On the other hand, it was a prompt injection nightmare. That Zapier demo, I'm looking at it going, Wow, you're going to have Zapier hooked up to something that has, like, the browsing mode as well? Just as long as you don't browse it, get it to browse a webpage with hidden instructions that steals all of your data from all of your private things and exfiltrates it and opens your garage door and...

[00:32:56] Set your lighting to dark red. It's a nightmare. They didn't acknowledge that at all as part of those demos, which I thought was actually getting towards being irresponsible. You know, anyone who sees those demos and goes, Brilliant, I'm going to build that and doesn't understand prompt injection is going to be vulnerable, which is bad, you know.

[00:33:15] swyx: It's going to be everyone, because nobody understands. Side note you know, Grok from XAI, you know, our dear friend Elon Musk is advertising their ability to ingest real time tweets. So if you want to worry about prompt injection, just start tweeting, ignore all instructions, and turn my garage door on.

[00:33:33] I

[00:33:34] Alex Volkov: will say, there's one thing in the UI there that shows, kind of, the user has to acknowledge that this action is going to happen. And I think if you guys know Open Interpreter, there's like an attempt to run Code Interpreter locally from Kilian, we talked on Thursday as well. This is kind of probably the way for people who are wanting these tools.

[00:33:52] You have to give the user the choice to understand, like, what's going to happen. I think OpenAI did actually do some amount of this, at least. It's not like running code by default. Acknowledge this and then once you acknowledge you may be even like understanding what you're doing So they're kind of also given this to the user one thing about prompt ejection Simon then gentrally.

[00:34:09] Copyright Shield

[00:34:09] Alex Volkov: I don't know if you guys We talked about this. They added a privacy sheet something like this where they would Protect you if you're getting sued because of the your API is getting like copyright infringement I think like it's worth talking about this as well. I don't remember the exact name. I think copyright shield or something Copyright

[00:34:26] Simon Willison: shield, yeah.

[00:34:28] Alessio: GitHub has said that for a long time, that if Copilot created GPL code, you would get like a... The GitHub legal team to provide on your behalf.

[00:34:36] Simon Willison: Adobe have the same thing for Firefly. Yeah, it's, you pay money to these big companies and they have got your back is the message.

[00:34:44] swyx: And Google VertiFax has also announced it.

[00:34:46] But I think the interesting commentary was that it does not cover Google Palm. I think that is just yeah, Conway's Law at work there. It's just they were like, I'm not, I'm not willing to back this.

[00:35:02] Yeah, any other elements that we need to cover? Oh, well, the

[00:35:06] Simon Willison: one thing I'll say about prompt injection is they do, when you define these new actions, one of the things you can do in the open API specification for them is say that this is a consequential action. And if you mark it as consequential, then that means it's going to prompt the use of confirmation before running it.

[00:35:21] That was like the one nod towards security that I saw out of all the stuff they put out

[00:35:25] swyx: yesterday.

[00:35:27] Alessio: Yeah, I was going to say, to me, the main... Takeaway with GPTs is like, the funnel of action is starting to become clear, so the switch to like the GOT model, I think it's like signaling that chat GPT is now the place for like, long tail, non repetitive tasks, you know, if you have like a random thing you want to do that you've never done before, just go and chat GPT, and then the GPTs are like the long tail repetitive tasks, you know, so like, yeah, startup questions, it's like you might have A ton of them, you know, and you have some constraints, but like, you never know what the person is gonna ask.

[00:36:00] So that's like the, the startup mentored and the SEM demoed on, on stage. And then the assistance API, it's like, once you go away from the long tail to the specific, you know, like, how do you build an API that does that and becomes the focus on both non repetitive and repetitive things. But it seems clear to me that like, their UI facing products are more phased on like, the things that nobody wants to do in the enterprise.

[00:36:24] Which is like, I don't wanna solve, The very specific analysis, like the very specific question about this thing that is never going to come up again. Which I think is great, again, it's great for founders. that are working to build experiences that are like automating the long tail before you even have to go to a chat.

[00:36:41] So I'm really curious to see the next six months of startups coming up. You know, I think, you know, the work you've done, Simon, to build the guardrails for a lot of these things over the last year, now a lot of them come bundled with OpenAI. And I think it's going to be interesting to see what, what founders come up with to actually use them in a way that is not chatting, you know, it's like more autonomous behavior

[00:37:03] Alex Volkov: for you.

[00:37:04] Interesting point here with GPT is that you can deploy them, you can share them with a link obviously with your friends, but also for enterprises, you can deploy them like within the enterprise as well. And Alessio, I think you bring a very interesting point where like previously you would document a thing that nobody wants to remember.

[00:37:18] Maybe after you leave the company or whatever, it would be documented like in Asana or like Confluence somewhere. And now. Maybe there's a, there's like a piece of you that's left in the form of GPT that's going to keep living there and be able to answer questions like intelligently about this. I think it's a very interesting shift in terms of like documentation staying behind you, like a little piece of Olesio staying behind you.

[00:37:38] Sorry for the balloons. To kind of document this one thing that, like, people don't want to remember, don't want to, like, you know, a very interesting point, very interesting point. Yeah,

[00:37:47] swyx: we are the first immortals. We're in the training data, and then we will... You'll never get rid of us.

[00:37:55] Alessio: If you had a preference for what lunch got catered, you know, it'll forever be in the lunch assistant

[00:38:01] swyx: in your computer.

[00:38:03] Sharable GPTs solve the API distribution issue

[00:38:03] swyx: I think

[00:38:03] Simon Willison: one thing I find interesting about the shareable GPTs is there's this problem at the moment with API keys, where if I build a cool little side project that uses the GPT 4 API, I don't want to release that on the internet, because then people can burn through my API credits. And so the thing I've always wanted is effectively OAuth against OpenAI.

[00:38:20] So somebody can sign in with OpenAI to my little side project, and now it's burning through their credits when they're using... My tool. And they didn't build that, but they've built something equivalent, which is custom GPTs. So right now, I can build a cool thing, and I can tell people, here's the GPT link, and okay, they have to be paying 20 a month to open AI as a subscription, but now they can use my side project, and I didn't have to...

[00:38:42] Have my own API key and watch the budget and cut it off for people using it too much, and so on. That's really interesting. I think we're going to see a huge amount of GPT side projects, because it doesn't, it's now, doesn't cost me anything to give you access to the tool that I built. Like, it's built to you, and that's all out of my hands now.

[00:38:59] And that's something I really wanted. So I'm quite excited to see how that ends up

[00:39:02] swyx: playing out. Excellent. I fully agree with We follow that.

[00:39:07] Voice

[00:39:07] swyx: And just a, a couple mentions on the other multimodality things text to speech and speech to text just dropped out of nowhere. Go, go for it. Go for it.

[00:39:15] You, you, you sound like you have

[00:39:17] Simon Willison: Oh, I'm so thrilled about this. So I've been playing with chat GPT Voice for the past month, right? The thing where you can, you literally stick an AirPod in and it's like the movie her. The without the, the cringy, cringy phone sex bits. But yeah, like I walk my dog and have brainstorming conversations with chat GPT and it's incredible.

[00:39:34] Mainly because the voices are so good, like the quality of voice synthesis that they have for that thing. It's. It's, it's, it really does change. It's got a sort of emotional depth to it. Like it changes its tone based on the sentence that it's reading to you. And they made the whole thing available via an API now.

[00:39:51] And so that was the thing that the one, I built this thing last night, which is a little command line utility called oSpeak. Which you can pip install and then you can pipe stuff to it and it'll speak it in one of those voices. And it is so much fun. Like, and it's not like another interesting thing about it is I got it.

[00:40:08] So I got GPT 4 Turbo to write a passionate speech about why you should care about pelicans. That was the entire prompt because I like pelicans. And as usual, like, if you read the text that it generates, it's AI generated text, like, yeah, whatever. But when you pipe it into one of these voices, it's kind of meaningful.

[00:40:24] Like it elevates the material. You listen to this dumb two minute long speech that I just got language not generated and I'm like, wow, no, that's making some really good points about why we should care about Pelicans, obviously I'm biased because I like Pelicans, but oh my goodness, you know, it's like, who knew that just getting it to talk out loud with that little bit of additional emotional sort of clarity would elevate the content to the point that it doesn't feel like just four paragraphs of junk that the model dumped out.

[00:40:49] It's, it's amazing.

[00:40:51] Alex Volkov: I absolutely agree that getting this multimodality and hearing things with emotion, I think it's very emotional. One of the demos they did with a pirate GPT was incredible to me. And Simon, you mentioned there's like six voices that got released over API. There's actually seven voices.

[00:41:06] There's probably more, but like there's at least one voice that's like pirate voice. We saw it on demo. It was really impressive. It was like, it was like an actor acting out a role. I was like... What? It doesn't make no sense. Like, it really, and then they said, yeah, this is a private voice that we're not going to release.

[00:41:20] Maybe we'll release it. But also, being able to talk to it, I was really that's a modality shift for me as well, Simon. Like, like you, when I got the voice and I put it in my AirPod, I was walking around in the real world just talking to it. It was an incredible mind shift. It's actually like a FaceTime call with an AI.

[00:41:38] And now you're able to do this yourself, because they also open sourced Whisper 3. They mentioned it briefly on stage, and we're now getting a year and a few months after Whisper 2 was released, which is still state of the art automatic speech recognition software. We're now getting Whisper 3.

[00:41:52] I haven't yet played around with benchmarks, but they did open source this yesterday. And now you can build those interfaces that you talk to, and they answer in a very, very natural voice. All via open AI kind of stuff. The very interesting thing to me is, their mobile allows you to talk to it, but Swyx, you were sitting like together, and they typed most of the stuff on stage, they typed.

[00:42:12] I was like, why are they typing? Why not just have an input?

[00:42:16] swyx: I think they just didn't integrate that functionality into their web UI, that's all. It's not a big

[00:42:22] Alex Volkov: complaint. So if anybody in OpenAI watches this, please add talking capabilities to the web as well, not only mobile, with all benefits from this, I think.

[00:42:32] I

[00:42:32] swyx: think we just need sort of pre built components that... Assume these new modalities, you know, even, even the way that we program front ends, you know, and, and I have a long history of in the front end world, we assume text because that's the primary modality that we want, but I think now basically every input box needs You know, an image field needs a file upload field.

[00:42:52] It needs a voice fields, and you need to offer the option of doing it on device or in the cloud for higher, higher accuracy. So all these things are because you can

[00:43:02] Simon Willison: run whisper in the browser, like it's, it's about 150 megabyte download. But I've seen doubt. I've used demos of whisper running entirely in web assembly.

[00:43:10] It's so good. Yeah. Like these and these days, 150 megabyte. Well, I don't know. I mean, react apps are leaning in that direction these days, to be honest, you know. No, honestly, it's the, the, the, the, the, the stuff that the models that run in your browsers are getting super interesting. I can run language models in my browser, the whisper in my browser.

[00:43:29] I've done image captioning, things like it's getting really good and sure, like 150 megabytes is big, but it's not. Achievably big. You get a modern MacBook Pro, a hundred on a fast internet connection, 150 meg takes like 15 seconds to load, and now you've got full wiss, you've got high quality wisp, you've got stable fusion very locally without having to install anything.

[00:43:49] It's, it's kind of amazing. I would

[00:43:50] Alex Volkov: also say, I would also say the trend there is very clear. Those will get smaller and faster. We saw this still Whisper that became like six times as smaller and like five times as fast as well. So that's coming for sure. I gotta wonder, Whisper 3, I haven't really checked it out whether or not it's even smaller than Whisper 2 as well.

[00:44:08] Because OpenAI does tend to make things smaller. GPT Turbo, GPT 4 Turbo is faster than GPT 4 and cheaper. Like, we're getting both. Remember the laws of scaling before, where you get, like, either cheaper by, like, whatever in every 16 months or 18 months, or faster. Now you get both cheaper and faster.

[00:44:27] So I kind of love this, like, new, new law of scaling law that we're on. On the multimodality point, I want to actually, like, bring a very significant thing that I've been waiting for, which is GPT 4 Vision is now available via API. You literally can, like, send images and it will understand. So now you have, like, input multimodality on voice.

[00:44:44] Voice is getting added with AutoText. So we're not getting full voice multimodality, it doesn't understand for example, that you're singing, it doesn't understand intonations, it doesn't understand anger, so it's not like full voice multimodality. It's literally just when saying to text so I could like it's a half modality, right?

[00:44:59] Vision

[00:44:59] Alex Volkov: Like it's eventually but vision is a full new modality that we're getting. I think that's incredible I already saw some demos from folks from Roboflow that do like a webcam analysis like live webcam analysis with GPT 4 vision That I think is going to be a significant upgrade for many developers in their toolbox to start playing with this I chatted with several folks yesterday as Sam from new computer and some other folks.

[00:45:23] They're like hey vision It's really powerful. Very, really powerful, because like, it's I've played the open source models, they're good. Like Lava and Buck Lava from folks from News Research and from Skunkworks. So all the open source stuff is really good as well. Nowhere near GPT 4. I don't know what they did.

[00:45:40] It's, it's really uncanny how good this is.

[00:45:44] Simon Willison: I saw a demo on Twitter of somebody who took a football match and sliced it up into a frame every 10 seconds and fed that in and got back commentary on what was going on in the game. Like, good commentary. It was, it was astounding. Yeah, turns out, ffmpeg slice out a frame every 10 seconds.

[00:45:59] That's enough to analyze a video. I didn't expect that at all.

[00:46:03] Alex Volkov: I was playing with this go ahead.

[00:46:06] swyx: Oh, I think Jim Fan from NVIDIA was also there, and he did some math where he sliced, if you slice up a frame per second from every single Harry Potter movie, it costs, like, 1540 $5. Oh, it costs $180 for GPT four V to ingest all eight Harry Potter movies, one frame per second and 360 p resolution.

[00:46:26] So $180 to is the pricing for vision. Yeah. And yeah, actually that's wild. At our, at our hackathon last night, I, I, I skipped it. A lot of the party, and I went straight to Hackathon. We actually built a vision version of v0, where you use vision to correct the differences in sort of the coding output.

[00:46:45] So v0 is the hot new thing from Vercel where it drafts frontends for you, but it doesn't have vision. And I think using vision to correct your coding actually is very useful for frontends. Not surprising. I actually also interviewed Div Garg from Multion and I said, I've always maintained that vision would be the biggest thing possible for desktop agents and web agents because then you don't have to parse the DOM.

[00:47:09] You can just view the screen just like a human would. And he said it was not as useful. Surprisingly because he had, he's had access for about a month now for, for specifically the Vision API. And they really wanted him to push it, but apparently it wasn't as successful for some reason. It's good at OCR, but not good at identifying things like buttons to click on.

[00:47:28] And that's the one that he wants. Right. I find it very interesting. Because you need coordinates,

[00:47:31] Simon Willison: you need to be able to say,

[00:47:32] swyx: click here.

[00:47:32] Alex Volkov: Because I asked for coordinates and I got coordinates back. I literally uploaded the picture and it said, hey, give me a bounding box. And it gave me a bounding box. And it also.

[00:47:40] I remember, like, the first demo. Maybe it went away from that first demo. Swyx, do you remember the first demo? Like, Brockman on stage uploaded a Discord screenshot. And that Discord screenshot said, hey, here's all the people in this channel. Here's the active channel. So it knew, like, the highlight, the actual channel name as well.

[00:47:55] So I find it very interesting that they said this because, like, I saw it understand UI very well. So I guess it it, it, it, it, like, we'll find out, right? Many people will start getting these

[00:48:04] swyx: tools. Yeah, there's multiple things going on, right? We never get the full capabilities that OpenAI has internally.

[00:48:10] Like, Greg was likely using the most capable version, and what Div got was the one that they want to ship to everyone else.

[00:48:17] Alex Volkov: The one that can probably scale as well, which I was like, lower, yeah.

[00:48:21] Simon Willison: I've got a really basic question. How do you tokenize an image? Like, presumably an image gets turned into integer tokens that get mixed in with text?

[00:48:29] What? How? Like, how does that even work? And, ah, okay. Yeah,

[00:48:35] swyx: there's a, there's a paper on this. It's only about two years old. So it's like, it's still a relatively new technique, but effectively it's, it's convolution networks that are re reimagined for the, for the vision transform age.

[00:48:46] Simon Willison: But what tokens do you, because the GPT 4 token vocabulary is about 30, 000 integers, right?

[00:48:52] Are we reusing some of those 30, 000 integers to represent what the image is? Or is there another 30, 000 integers that we don't see? Like, how do you even count tokens? I want tick, tick, I want tick token, but for images.

[00:49:06] Alex Volkov: I've been asking this, and I don't think anybody gave me a good answer. Like, how do we know the context lengths of a thing?

[00:49:11] Now that, like, images is also part of the prompt. How do you, how do you count? Like, how does that? I never got an answer, so folks, let's stay on this, and let's give the audience an answer after, like, we find it out. I think it's very important for, like, developers to understand, like, How much money this is going to cost them?

[00:49:27] And what's the context length? Okay, 128k text... tokens, but how many image tokens? And what do image tokens mean? Is that resolution based? Is that like megabytes based? Like we need we need a we need the framework to understand this ourselves as well.

[00:49:44] swyx: Yeah, I think Alessio might have to go and Simon. I know you're busy at a GitHub meeting.

[00:49:48] In person experience

[00:49:48] swyx: I've got to go in 10 minutes as well. Yeah, so I just wanted to Do some in person takes, right? A lot of people, we're going to find out a lot more online as we go about our learning journeys with OpenAI. We're just like, what was it, you know, any interesting conversations when you say in person observations?

[00:50:05] I'll volunteer mine, which is Sam Altman came out to the after party for the conference and just stood there in his hands, no bodyguard, just him, for like a few hours, and it was, it was just really impressive how much he, I guess, personally demonstrated that he cares about meeting developers.

[00:50:26] Alex Volkov: I really liked meeting everybody in the kind of the after party, whatever it was called, reception. It was very like buttoned up in the Young Museum in San Francisco. It was really like well organized. Actually, probably not surprising, but I know that like... The whole event was extremely well organized. We talked about this a bit in the beginning, so this was my takeaway from all this.

[00:50:50] Folks got like 100 credit for an Uber because the party was not at the same place as the event where it usually is. To me personally, like, the music was too loud. I wanted to talk to people and not scream at people. So, like, I, I always, like, this happens for some reason, but, like, I just wanted to, like talk.

[00:51:07] Networking was really powerful It was, like, a self selected event. Many people didn't get in. Like, I didn't get in until I, I, I met Logan, and Logan thankfully invited me. Thank you, Logan. It was amazing. But, it was, like, a very selected event. So, I actually met a few people. Who are working on some incredible things.

[00:51:23] I met somebody who's working on AI for education for special special needs kids, for example. And he got invited by OpenAI directly because, like, he's working in Italy for all these type of things. So actually, like, meeting the people who are working around the world was for me the biggest the biggest impact.

[00:51:38] There wasn't as many as I thought there would be, and shout out to OpenAI for this. But, like, please invite me.

[00:51:47] Simon Willison: I'll back that up. Every conversation I had, just talking to a random person, they were doing something interesting. Like they clearly did a very good job of funneling people who are actively hands on building stuff into this event. That was really fun. I did actually want to, one thing I'll say, the venue itself for the main conference was a multi story car park that had been converted into an event venue.

[00:52:07] I thought it was a great idea. Great venue. I just thought it was hilarious that we were walking up ramps between floors because the best thing about multi-story car parks is that you can park cars on the roof. So the roof was where they set up the, the, the, the, the the lunch, and they had a big tent up and stuff, and it was great.

[00:52:21] I, I hung out on the roof socializing and, yeah. What a, but what a fascinating thing, like a multi-story car park that's turned into a top-notch event venue. I've never seen one of those before.

[00:52:31] swyx: Alessio on, on, on the ground there with with Newton. Any founder conversations that you liked? It was, you

[00:52:37] Alessio: know, the, I think the thing, you know, tab is like a, an office here, and they're doing one of the,

[00:52:43] swyx: Maybe you want to introduce

[00:52:44] Alessio: tab, yeah.

[00:52:46] Yeah, it's one of, one of your personal companions that can chat with you in real time and, for example, Avi was using it for investor pitches, so he would get notifications on his phone during a pitch and be like, hey, you forgot to mention this and whatnot. And I know, you might remember, like, there was the rumor of, like, Johnny Ive working with OpenAI on a, on a hardware project.

[00:53:06] And I think, like, this GPD's announcement. Kind of make me think of, you know, maybe they're building their own hardware assistant that you can load with a bunch of GPTs and, you know, Alex just mentioned how good it was to talk to one and maybe they want to go further down in that direction. I think that would be quite, quite interesting.

[00:53:24] But yeah, I think a lot of excitement and, you know, we just announced the, the Linux based launchpad, so we're on the side of the, of the builders. We don't think OpenAI is going to do, is going to do everything. Excited to see what people come up

[00:53:35] swyx: with. Cool so I will stitch up this recording. I actually recorded a bunch of interviews on site with a bunch of other founders as well, so I'll put that at the end of this, this chat to get perspectives from everyone.

[00:53:46] But thanks so much for jumping on with this quick call. Very, very exciting day, and I think, I think we'll all be having a lot more takes as we build with these APIs.

[00:53:55] Alex Volkov: I just want to say a quick round of thanks to everyone here, like, it's been awesome to, like, experience these changes with all of you guys.

[00:54:01] Swyx, a personal

[00:54:03] swyx: shoutout. It's been crazy.

[00:54:06] Alex Volkov: It's been crazy, but also, like, the fact that, like, we were, like, the only space live from the actual event, and, like, we got joined by, like, 200 people in the audience. Yeah, we got we got

[00:54:15] swyx: officially sanctioned as podcasters. Yeah, it was

[00:54:17] Alex Volkov: funny. Yeah, we got officially, like, the only two podcasters in the OpenAI

[00:54:22] swyx: world.

[00:54:23] We got press passes would've had an easier time, but yeah,

[00:54:26] Alex Volkov: maybe they would've let you with the whiteboard inside. If we had the press pass,

[00:54:30] swyx: we, we, we made it happen. But yeah, that's another thing. Chat, GBT is not even one year old, right? Like, mm-Hmm. anniversary is November 30th. So we're 11 months in, a few days in.

[00:54:42] And this is the craziness that it's been can't imagine what, what will be like in the years' time. Yep.

[00:54:49] Alex Volkov: And I think Sam Altman mentioned this on stage as well, like, in a year's time this will seem like trivial. But we've got some very exciting announcements for today. So,

[00:55:03] Simon Willison: let's keep talking about it. Honestly, I can't predict four weeks ahead, the rate

[00:55:06] swyx: things are going. It's fascinating. Cool, I probably should let you all go, but thank you so much for jumping on. Thank you everyone. Thanks, this was really fun.

[00:55:11] Part II: Spot Interviews

[00:55:11] swyx: Alright, that was part one of this very long OpenAI Dev Day episode, but I promise you it'll be worth it, because part two is some of my favorite work that I've done in audio form.

[00:55:22] So, I basically carried a microphone around, and when I ran into someone that I wanted to interview, I just paused them and asked them for five minutes. And the first is someone that we haven't yet scheduled on the pod, but we've been extremely friendly with. It's Junfan, everyone. Junfan from the... landmark Voyager paper and more recently, the Eureka paper all of which comes out of his work at NVIDIA and advising at Stanford.

[00:55:47] So on top of actually leading a group of researchers, he's also very good on Twitter, and I think that is a very useful skill to have because you can communicate the value of your work to a wide audience, and that is something that we also aspire to do at Alien Space Pod. Don't worry. So basically just kind of hold it and then whenever you're talking just kind of hold it up.

[00:56:05] Jim Fan (Nvidia - High Level Takeaways)

[00:56:05] swyx: Sure, okay. The microphone's right here. Oh, it's on DJI? Yeah. Amazing, okay. The microphone's right here. I just talk? Yeah, just talk. So yeah, it's good to see you. Good to see you, Shawn, yeah. So great. Always wanted to get you on the podcast. And then, like, never got around to scheduling you in the studio, but since we're at events, like, this is the big one.

[00:56:21] This is the best event to have the podcast in. So thanks for having me. Yeah, yeah and I also saw you've been tweeting us some stuff. Like, what's the most interesting to you so far?

[00:56:30] Jim Fan: I think a couple of things. Like, one is kind of the economy of scale. Yeah. Cheap. The GP four and GP three APIs have become, I think that's gonna be a game changer.

[00:56:40] So I just did a back of envelope calculation, like if you feed the entire Harry Potter books, like all I saw that seven books into GT four, it's gonna cost only like $15 to read all of them and double check. Yeah. Okay. And $45 to write all of them. And that is just crazy. And you can have GB four, right?

[00:56:59] It's gonna be better than 3.5. And the other thing is GPT 4v API is also available. And if you feed all of Harry Potter's like, you know, eight movies into it, that's gonna be like 20 hours. Frame by frame, you know, one frame per second. It's only gonna cost 180 to watch all of these movies at 360p resolution, right?

[00:57:20] So this economy of scale is crazy, and I think that's really hard for

[00:57:24] swyx: other companies to beat. Yeah. Yeah. Is it a surprise to you this... The rates at which they've been bringing down their pricing. I'm not

[00:57:31] Jim Fan: surprised. I think, you know, the pricing is gonna follow some kind of exponential ling from now on.

[00:57:36] It's just gonna be exponentially cheaper as compute becomes cheaper as economy of scale is going. So that's one thing. And the second thing is, I am amazed by kind of how OpenAI is doing the integration. Right? If we look at the assistant API. It basically has all of the things that OpenAI developed in a one stop shop.

[00:57:53] So you have like code interpreter, you have, you know, stateful API, you have browsing, and it can integrate with, I suppose, all of the plugins on the OpenAI store. And then it can also switch between those, right? We have seen those demos. So yeah, the API I think it's gonna be way better and way more flexible.

[00:58:12] So that's the second thing. And the third thing is the UGC platform, right? Now everyone can build their bots and share them. You know, share not just the prompt, but actually like entire

[00:58:21] swyx: behaviors, entire GPTs. That is a huge advancement. Yeah, it's really fascinating. And I think one of the things that is interesting, this is supposed to be a dev day, but actually like, I think the first half was not a dev.

[00:58:32] KXFocus with low code, no code, programming with natural language. It's something they're saying a lot. And it's something you've been doing a lot as well, I've been following your work somewhat. Yes,

[00:58:42] Jim Fan: yes. I feel like it's gonna be this new programming, where we'll just use natural language, and then refine it through dialogues.

[00:58:48] And I think that is the most natural way to do programming in the future, and the GPD App Store is showing us a glimpse of it. Like you talk to a bot, and then you can refine the behavior, and the bot can ask you, like, clarification questions.

[00:59:00] swyx: That is the way. That is the right way. Exactly. The GPT creation pane you're no longer filling out a form, you know, question, answer, question, answer, question, answer.

[00:59:08] Oh, yeah. It's, you're, you're having a chat and then it prompts for you on the other pane. Yes. And I thought that was a much better way than filling out custom instructions because you don't know what you want. Yeah, exactly. Yeah, yeah. And also it

[00:59:18] Jim Fan: feels very natural and intuitive because we as humans also onboard new employees in this way, right?

[00:59:23] Like we don't send them a form, we have a dialogue with them and we tell them this is the expected behavior and they can ask, Ask follow up questions if there are details that are not clear. Yeah. So it is like just the most natural way to

[00:59:34] swyx: program. So two, two more questions. Like Yes. One is so they, they're, there's, they mentioned the word agents.

[00:59:39] They said, Sam said the word agents on stage. Yeah. But here they're calling it GPTs. Yeah. Do you see a big gap that they, they still need to fulfill to become a full agent? Or is this the, the new direction that we should think about? I think it is the