Scaling without Slop

We've been quiet — announcing our 2026 plans! The State of Latent Space is here.

First off, a few major announcements:

Yi Tay returns to the podcast today! If you enjoyed his insights on being a 10,000x Yolo Researcher, then you’ll love our catchup on Gemini’s IMO Gold and more.

Welcome to the 80,000 AINews subscribers! We are doubling down on Substack, making Latent Space the one email subscription to keep up on a daily (AINews), weekly (LS Pod), and broader (LS Essays) basis. You can opt-in/out as needed.

Latent Space is also turning into a podcast network! Our first new show, focused on AI For Science, will launch next week, inspired by our Chan-Zuckerberg pod! We also have a new physical space/studio at Kernel in SF!

AI Engineer is growing! The first official AIE Europe is coming to London April 8-10, and both Super Early Bird tix and Speaker CFPs first deadline is Jan 31st.

We are hiring across all platforms: AIE Head of Operations, AIE Marketing & Community Manager, LS Researcher/Writer (or occasional Guest Writer!)

At every AIE conference I run, I give myself the challenge of doing the shortest keynote for a “State of the Nation” type address. For AIE Code, I declared War on Slop.

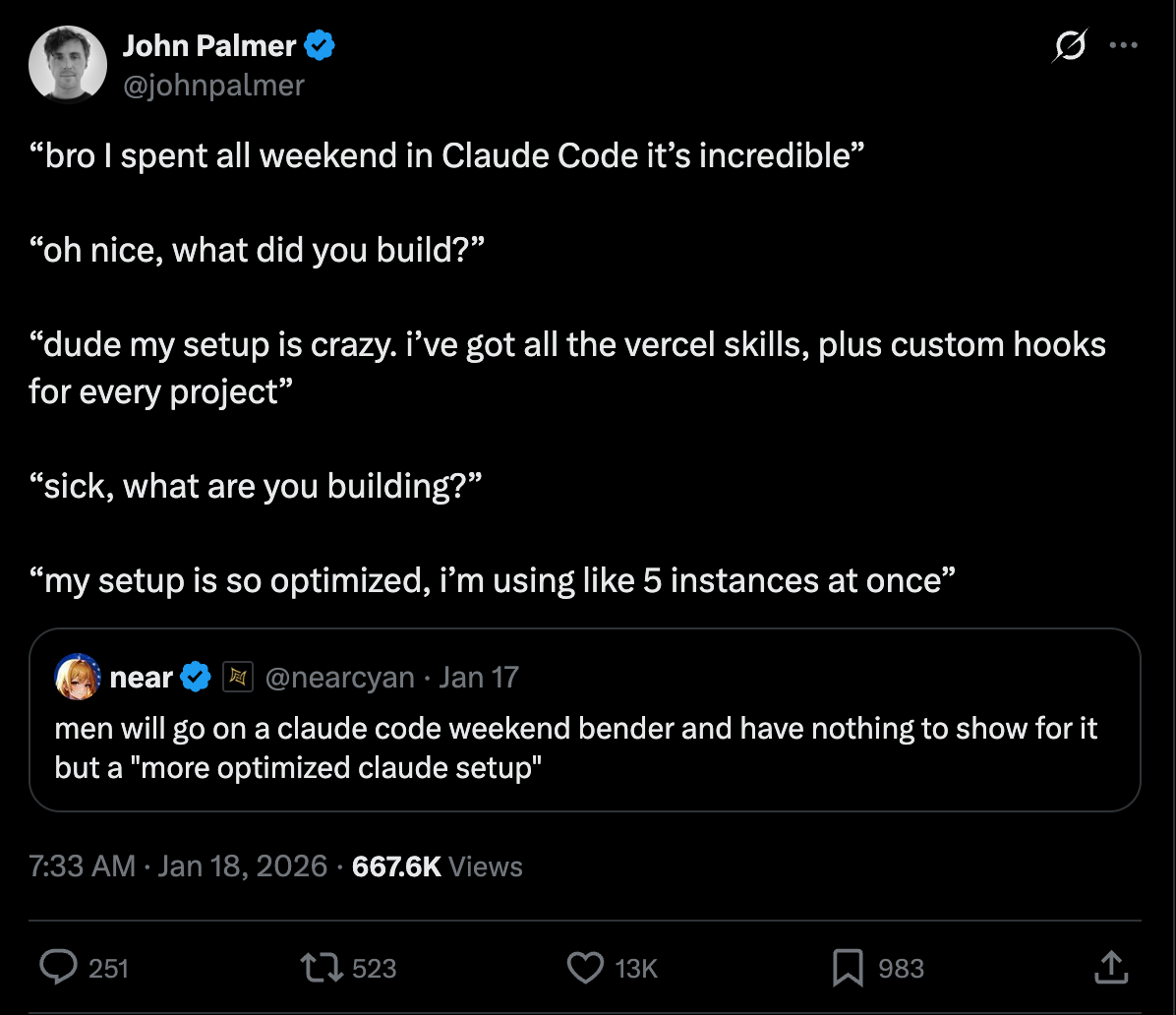

This was partially inspired by seeing the motivating factors behind products like Codemaps and Review, and partially a reaction to the “Canadian Girlfriend AI Coding” performative productivity porn that took over the timeline:

I didn’t expect Slop to then be picked up as word of the year by Merriam-Webster and the Economist and the American Dialect Society1, but clearly the theme of fighting Slop is resonating as generative AI rips the authenticity from creation, piles up open source maintainer workload, fills up academic conference submissions, taking up 20% of YouTube videos (earning millions per year) and running entire Instagram channels and the Apple App Store, slowly deadening the Internet.

There are a few gut reactions to Slop that are reasonable:

simply reject all AI-generated content, perhaps making exception for AI-assisted, perhaps requiring proof of human review

severely cut back on quantity to message and deliver quality

just eat the steak and learn to enjoy the slop machine

However, they feel variously dissatisfying in their general defeatism and pessimistic view of human taste being scalable.

First off, I’ll reiterate the obvious point that plenty of Slop has been made by humans, both well-meaning and cynical, for centuries — AI does not have a monopoly on making Slop. However, AI does make it easy to scale thoughtless output and it is harder to signal intent, effort and quality — an emphasis of my recent writing retreat. If you creatively/skillfully wield AI as "a new brush”, then AI Kino is very possible.

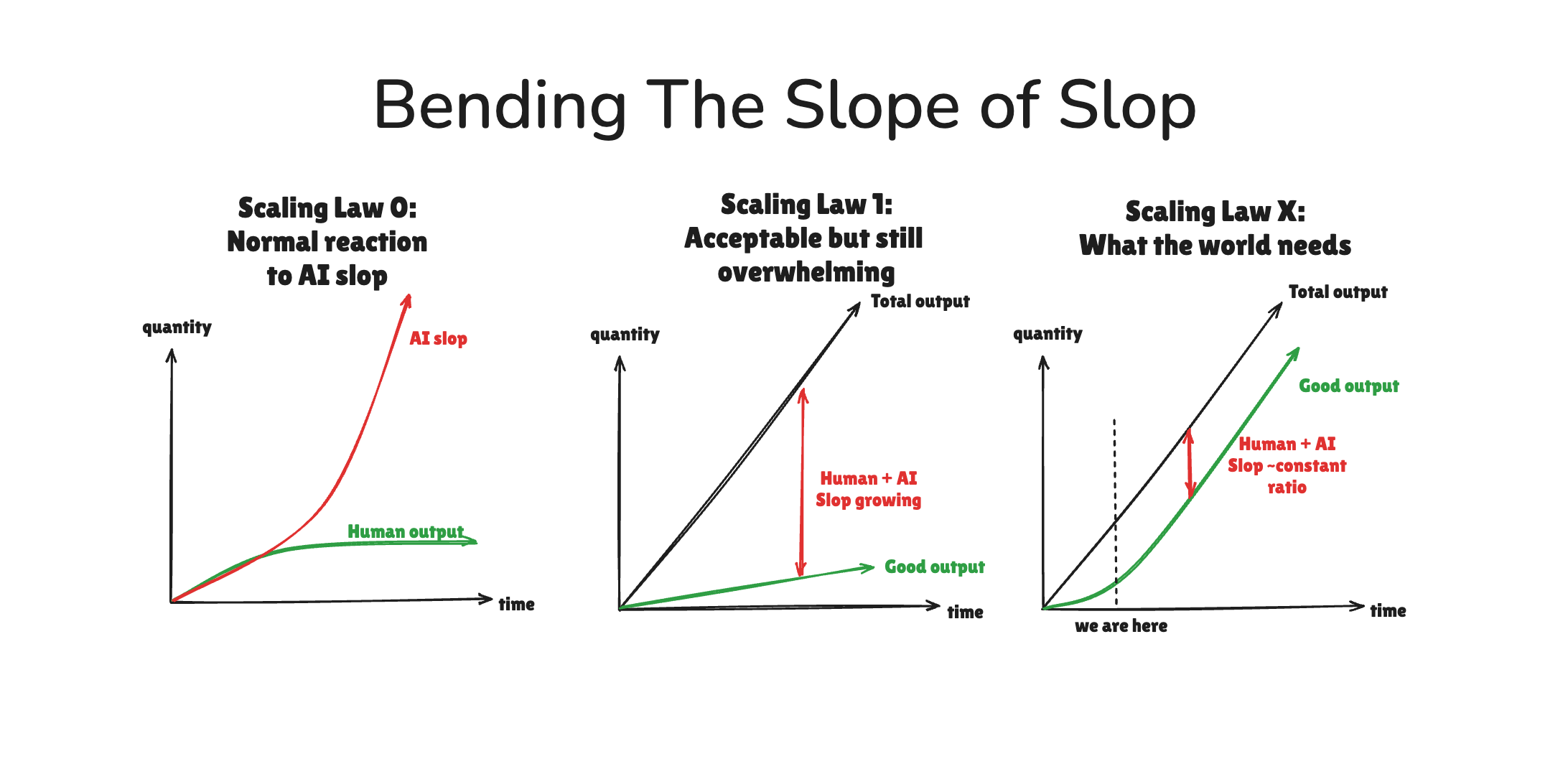

But more importantly, if your solution to AI slop basically means you cut back on your own human output, that doesn’t solve the fact that AI slop will continue to far outpace human output, and therefore simply overwhelm you and your community by sheer brute force and our own recsys’ inability to keep up with our tastes. Humanity slowly starves to death gorging on its own excrement spewed from artificial intestines.

The most important problem in media now is scaling without slop, period, whether or not AI generated or entirely human-origin. Actually I lied, it’s not just a media problem: any act of creation and exercise of free will is is subject to the quality-quantity tradeoff, whether you’re building a new product or texting your adult kids.

Any experienced creator will tell you that there can be very little relationship between effort and result - the thing you slaved on for a month gets overdone and incoherent, whereas the throwaway tweet of frustration done in 3 seconds gets viral and used as an example for decades to come. Sometimes -you- can’t tell what’s slop, sometimes one man’s slop is another man’s kino.

The central problem to solve is the changing the slope of slop, not giving up on humans.

This is the problem I am working on now for both AI Engineer and Latent Space. The case studies of increasing quantity AND quality have been fascinating.

Scaling AI Engineer

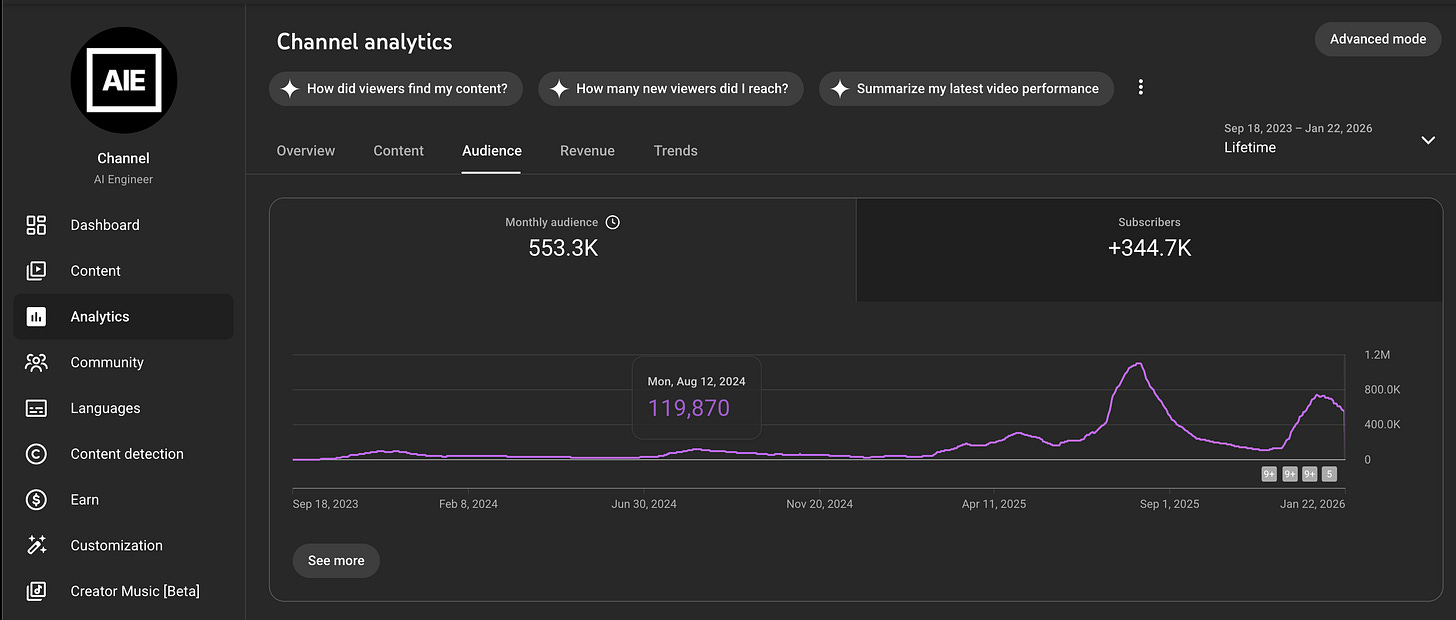

AI Engineer grew from one event a year in 2023 and 2024 to 4 (AIE NYC, AIE WF, AIE Paris, AIE CODE) in 2025, and there will be at least 7 AIEs around the world in 2026.

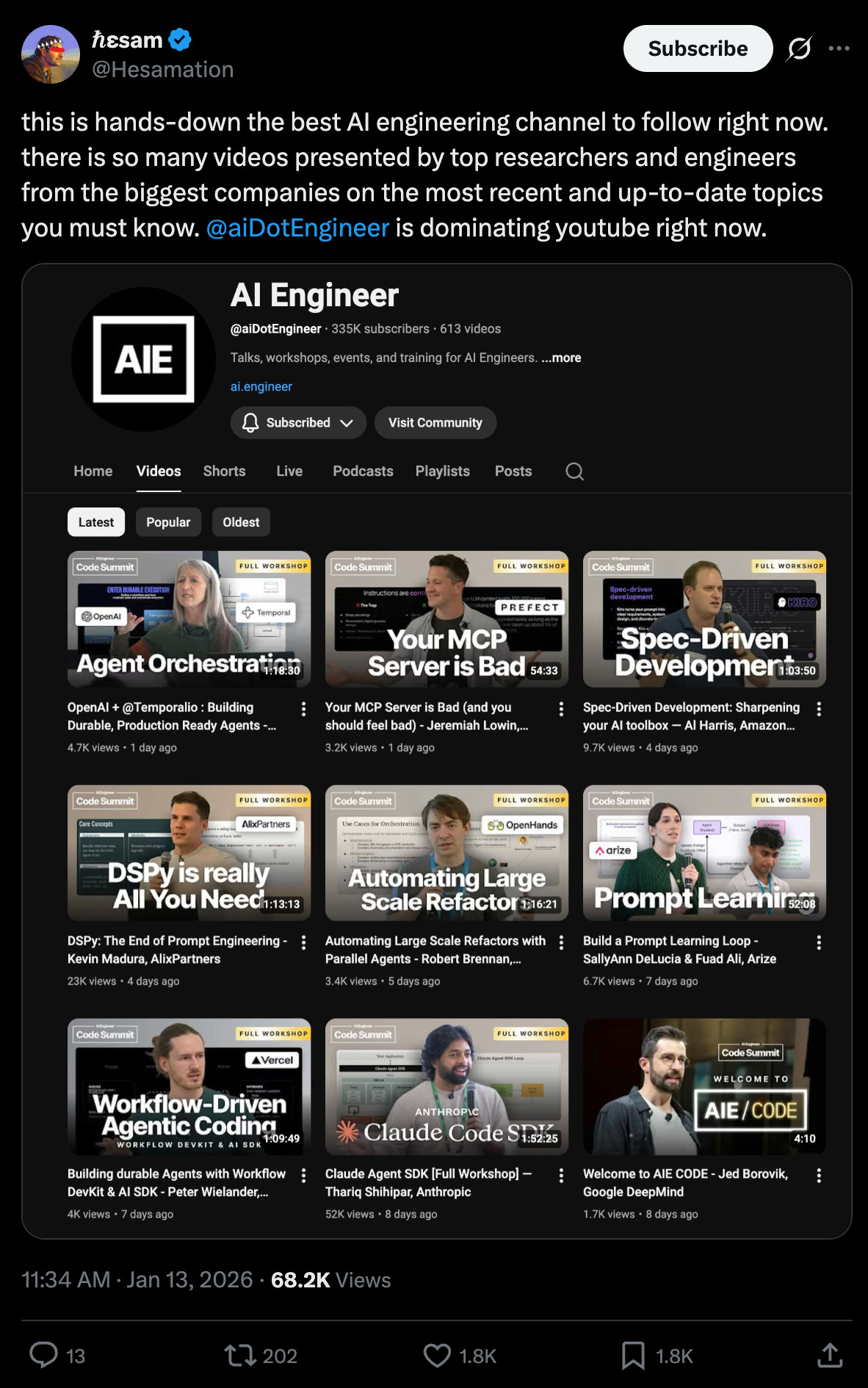

Viewership did not just 3x, but more than 10x’ed:

And anecdotally, quality has also improved:

I remember a former 2024 speaker who is known for speaking his mind, and being privately pretty cynical about AIE, in 2025 coming back to me to say that conversations and talks felt a lot more authentic and serious this time around.

I say this not as a brag, but I think there are a lot of conscious and subconscious decisions made that contribute toward building a leading IRL and online community for serious, grounded AI discussion without resorting to fearmongering or hyperbole.

Slop has definitely crept in, but here we have kept closer to “Scaling Law X” than Scaling Law 0 and I’m happy about that.

Scaling Latent Space

Latent Space (now joined with AINews) has been a tougher beast. The value of media cycles often fit to Sonal Choksi’s McClusky Curve — flanked on the left by TBPN, and on the right by Dwarkesh. We did AMAZING as a podcast - regularly in the 30-50th ranks of the overall US Tech podcasts in Apple, and considered by many to be a top 3 AI podcast, being featured on the GPT5 launch, and featuring conversations with Greg Brockman and Fei-Fei Li and Mark Zuckerberg and Noam Brown and Bret Taylor and Chris Lattner and the Claude Code team and more household names to come.

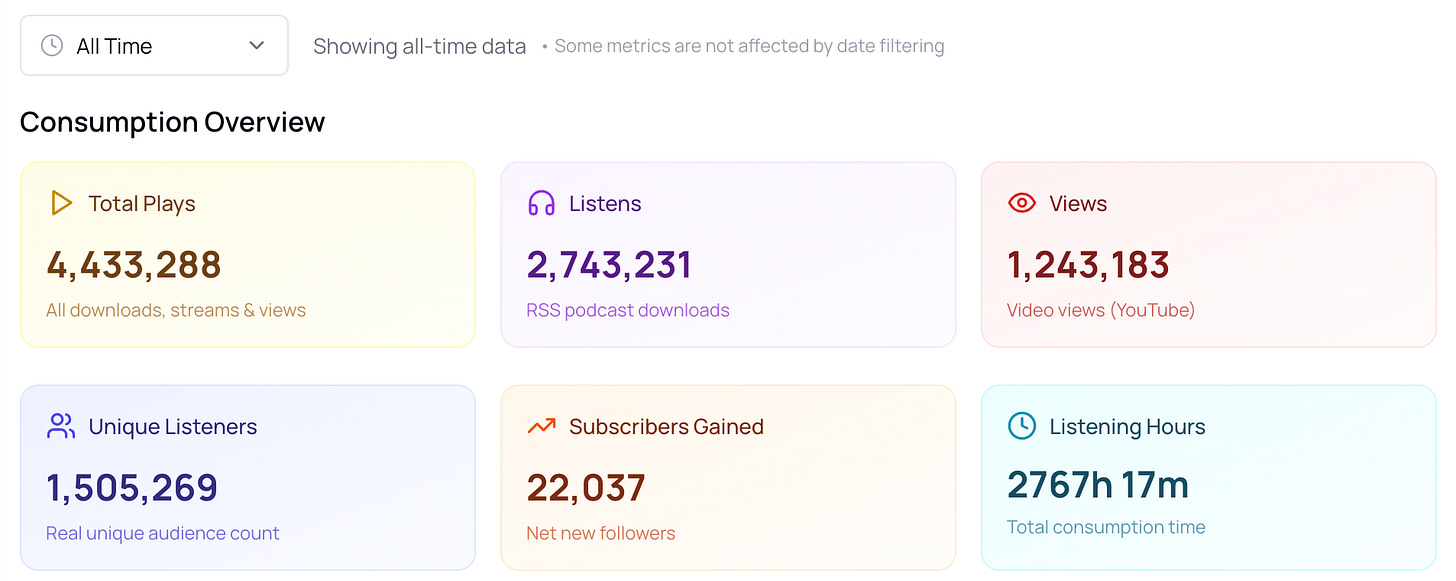

While our YouTube numbers are still wanting (pls like and subscribe! we started YouTube way, way too late in this game), audio subscribers are still incredibly strong, and Flightcast estimates us at about 1.5m all time unique listeners now.

But we fell behind on writing, evidenced by 2025’s Agent Labs Thesis far underperforming the AI Engineer Reading List (which we’ve continued to maintain, but slowly left behind as less and less frontier knowledge is published).

There’s only so much that one person can do. I am very inspired by creative networks that build a platform for multiple people to shine and contribute their talents, like Dropout TV and Morning Brew and SemiAnalysis. This is part of the movement that a16z has termed New Media, and perhaps unlike most on that list, I want to try to build a platform clearly bigger than me and hopefully bigger than the some of its parts.

The game plan for 2026’s Latent Space is threefold:

More shows, more hosts: The 1-2 hour interview podcast format is cool but Alessio and I can only do so much. We’ll be doing more education (the booming area of AI for Science is our next focus, with new hosts), but also entertainment (AI can be fun! and AI people are REALLY FUN). Most of our new investments will go into more YouTube and video-native formats.

More formats: AINews joining Latent Space gives you a place for regular daily updates and commentary. TBA on where the future of AI News should be beyond just a newsletter… but ideas are welcome

More writing: Writing is thinking, and good thinking powers everything great we have done with Latent Space. We will double down on this and, if you’re keen to help, join us as a researcher or writer full time, or even apply to guest post (we have always done very well serving as editors for guest posts)

The Elements of Curation: Tuning High Taste

The common thread among AIE and LS is that we have to curate very well, and then scale one person’s curation to many, hopefully by crowdsourcing but also taking audience/community feedback very seriously. The most visible output of this is “saying no a lot”, and this is a lonely job saying no to friends and to sponsors, but even that is too broad a brush. There is no “school for curators”, no Maven or Udemy course for curating. You have to have a point of view, you have to know your audience better than they know themselves, and you have to have the freedom to make mistakes and survive making experiments with a high failure rate. Fortunately, we have enough backing and support (read: donations, partially from generous investors (who have been explicitly told we will never sell or sell out), but operating expenses actually come from Substack subscribers! thank you!) that we don’t have to couple this with any sort of business model.

We just want to Make Good Shit at Scale.

Welcome to 2026. It’s gonna be a fun ride.

where it was a runner-up last year, and coined 2 years ago perhaps by @deepfates

Echoing what I heard from some others - AINews subscribe form does not work - just tried it again - no verification link. Opt-out/opt-in does not help either.

This is great! I’ll be submitting some writing / posts for the guest writing. So much to share about AI architecture being deep in the AI-augmented SWE space in my work.