Thanks to the almost 30k people1 who tuned in to the last episode!

Your podcast cohosts have been busy shipping:

Alessio open sourced smol-podcaster, which makes the show notes here!

swyx launched GodMode. Maybe someday the Cursor of browsers?

We’re also helping organize a Llama Finetuning Hackameetup this Saturday in anticipation of the CodeLlama release.

Lastly, more speakers were announced at AI Engineer Summit! 👀

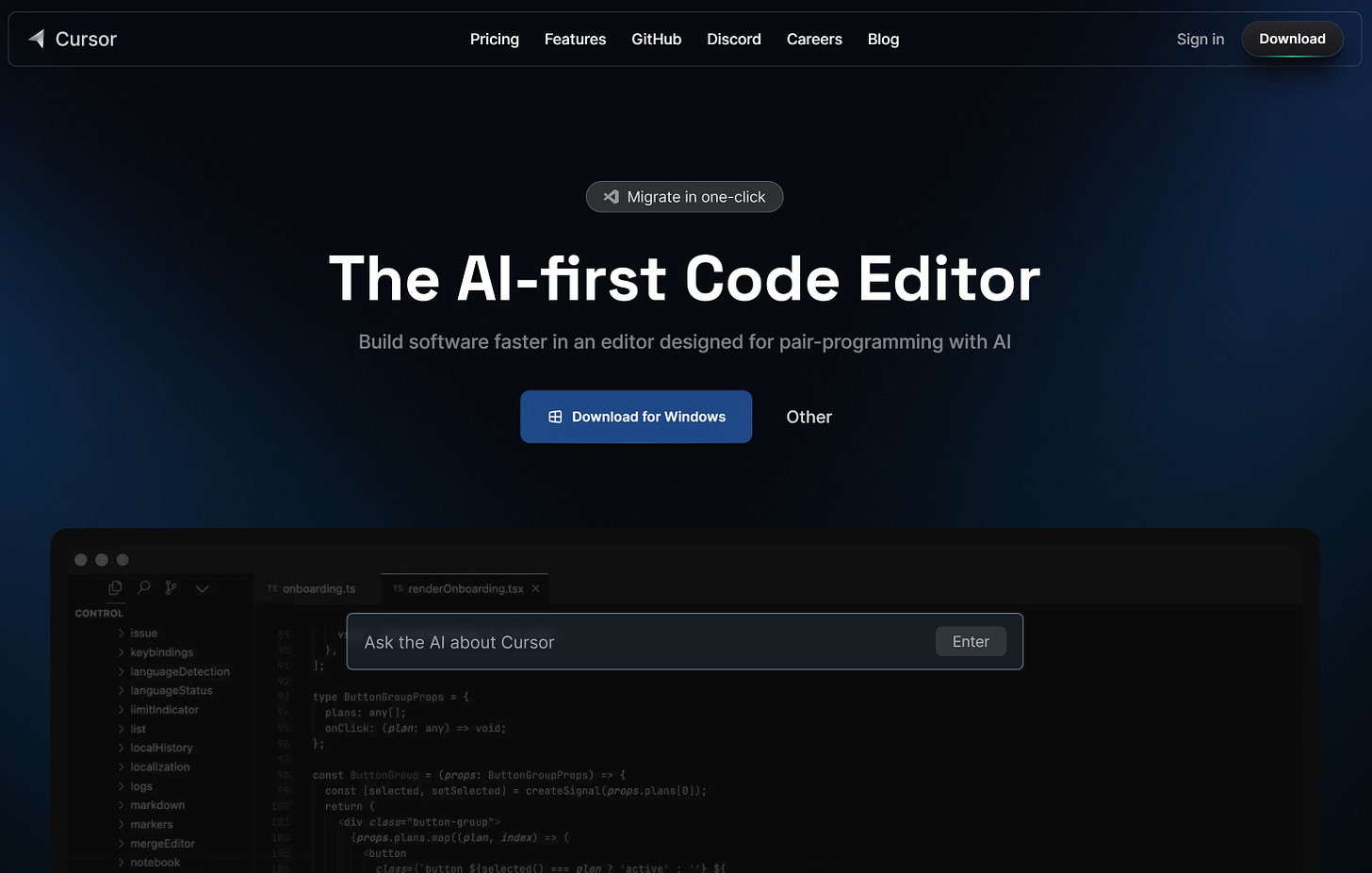

~46% of code typed through VS Code is written by Copilot2. How do we get closer to 90+%? Aman Sanger says we need a brand new AI-powered IDE to get there; and we’re excited to be the first podcast ever to tell the Cursor story.

If you haven’t heard of Cursor, you may have been living under a rock. Here are just some of the rave reviews going around in the past week alone:

“Cursor is the best product I've used in a while” - Alex MacCaw

“Someone finally put GPT into a code editor in a seamless way. It's so elegant and easy. No more copying and pasting.” - Andrew McCalip

“Coding with AI is getting insane.” - Mckay Wrigley

“This is mind blowing 🤯” - Linus Ekenstam

“Cursor + gpt4-32k = illegal levels of productivity” - Sully Omarr

“EL MEJOR EDITOR DE CÓDIGO con IA” - Carlos Santana

A decade ago, “platform risk” meant building apps on social media platforms was risky as you could get cut off from the social network3.

Today, the AI version of “platform risk” is building AI products within an existing product (like an AI extension for VS Code, or a Figma plugin). Since Copilot, a generation of VSCode plugins have launched (including Cody, Cosine, and previous guests Codeium and Codium4), only to be challenged by Copilot X itself.

A core AI Engineering thesis is that new capabilities in AI demands new innovation in AI UX (and that AI UX can actually be a viable moat). Take VS Code for example; when Github was first working on Copilot, there was actually no way to support the “ghost autocomplete” feature we all use today5. They eventually convinced the team to build it, and Copilot’s success speaks for itself.

If you’re a startup building on top of VSC today, you do not have the same access and influence on the roadmap. Your UX is limited to what they allow you to do, and often that caps your ability to successfully compete against them.

Since Cursor owns the whole IDE, they can do things you can’t (yet) do in VSCode:

Cursor’s Gameplan

Cursor is competing head to head against VS Code by forking Microsoft’s IDE and building their own AI-powered version. A few of Cursor’s unique features:

Native chat: Chat is a core piece of Cursor. Users can choose between GPT-3.5 and GPT-4 to ask questions and receive answers based on their code.

“Mentioning” files: you can easily add files into your request context by using “@”; this works both for code as well as documentation. If you want to do a change that includes multiple files, you can include them in your question to make sure the change is reflected in all of them.

Custom prompting engine: Cursor built Priompt, their custom prompting engine. As your chats go over the context window size, Priompt figures out which messages to keep in the history, which files to drop from the prompt, etc.

Moving beyond typing: while IDEs are familiar to folks as today’s interfaces, in the future Cursor hopes to have agents you can delegate tasks to. Instead of a back and forth on a new feature or bug fix, you can ask it to do the whole thing for you end to end.

After diving deep into Cursor we nerded out on model usage, training, quantization, and evaluation. There’s a ton of great content in this episode, we hope you’ll enjoy it!

As always, feedback welcome in the comments, and tag us on socials for future guest suggestions!

Show Notes

HungryHungryHippos, Hyena, etc (see our FlashAttention episode)

Aman Tweets

“Training will look like researchers/practitioners offloading large-scale training jobs to specialized “training” companies: a state of the world that resembles chip design & fabrication.” - Mosaic prediction

“The size of all code/history on Github public repos is 92TB. The size of Google's monorepo in 2015 was 86TB (of much higher quality code). If Google were willing to deploy code models trained on their own data, they'd have a noticable advantage over everyone else.” - May 2023

Timestamps

[00:00:00] Intros

[00:02:31] Developing CAD models vs coding models

[00:05:23] Deciding to build a new IDE optimized for large language models

[00:10:50] Getting early access to GPT-4 and realizing its potential for software development

[00:12:32] Rethinking the UI/UX for coding

[00:18:24] Cursor's features like system prompts and chat

[00:22:24] Tips for prompting GPT-3/4 for code generation and editing

[00:27:24] Cursor's documentation and context features

[00:29:30] The potential of coding agents like Code Interpreter

[00:38:23] Cursor's internal prompting tool Priompt

[00:40:47] The challenges of very long context lengths for models

[00:45:44] The compute costs for prompt tokens vs. completion tokens

[00:49:36] How quantization interacts with model utilization

[00:51:24] Issues with human eval for benchmarking code models

[00:53:12] Thoughts on training models vs. relying on foundation models from big providers

[00:55:34] The origin story of Cursor's parent company AnySphere

[00:56:00] Lightning Round

Transcript

Alessio: Hey everyone, welcome to the Latent Space podcast. This is Alessio, partner and CTO at Residence at Decibel Partners, and I'm joined by my co-host Swyx, writer and editor of Latent Space. [00:00:20]

Swyx: Hey, and today we're back in the studio again after a little break and we have Aman Sanger in the house. Hey Aman. Hey, thanks for coming. Thanks for having me. So I wanted to introduce our guests and then have you fill in the blanks. So you worked at Gamelon, Bridgewater, McKinsey, Google, and You.com, all on sort of kind of AI related things and some finance related things. You also ran your own consultancy, Abelian AI, and you graduated in CS and math from MIT recently. Worked on a few projects, including Instill, which I think we'll cover a little bit later, and most recently Cursor.so, which we'll cover for the vast majority of the podcast. But just on a personal side, what's one thing that people should know about you that, you know, might not be so obvious on LinkedIn? Oh, interesting. [00:01:01]

Aman: In a previous life, I played a lot of squash. [00:01:05]

Swyx: You were a top seed? [00:01:06]

Aman: Yeah. So in high school, I kind of competed in tournaments and most people probably don't really know what squash is. It's like tennis in many ways. It's like a racket sport, but it's indoors. You play against a wall. I guess now pickleball is all the rage with, with racket sports, but yeah, the story is I used to play tennis and then I moved to a building that had a squash court in it and then I picked it up. I loved it. And I've been playing ever since. So I competed a lot in high school, played a bunch at FIT, have not had the chance to play much here. In San Francisco, there aren't too many courts. [00:01:38]

Swyx: We can organize a squash tournament and then you'll crush it, of course. Is there anything about the athlete mentality that you take with you as a founder? [00:01:47]

Aman: Yeah, I think it can be at times a bit too much, but I'm very competitive. I really hate losing. Now I think I'll go on runs and if someone tries passing me, I won't let it happen. I'll just kick it into overdrive and maybe I'll turn the corner if I know they're going to beat me, but I can't let someone pass me when I'm running. And I think the same is true with starters, where the competitive nature, I think it in general helps motivate me and makes me, I guess, just work harder. [00:02:17]

Swyx: Yeah. Okay. Well, we'll have a bunch of competitive questions later, but we'll go over the timeline. [00:02:22]

Alessio: Let's jump into how you got to Cursor. So in August 2022, you launched something called Instill. Can you talk a little bit about that? [00:02:31]

Aman: Yeah, and maybe before I go into Instill, I should talk about what I was even doing before that, because Instill was actually a very brief foray from what I was doing with my original co-founder, Michael. So we had both actually gone to the same high school together, gone to MIT together. And then after graduating, we knew we wanted to start something. And in June, what we were working on was also called Cursor, but very different. We basically were very, very fanatical users of Copilot. We loved it. And we had a little bit of experience with computer-aided design or CAD software. A lot of our friends, in fact, were mechanical engineers. And we'd heard a lot about how tedious it was to just design these parts and software like SOLIDWORKS and whatnot. It was pretty obvious to us that if you could train a transformer on the task of predicting the next token, not just for code, but for CAD, then you could get a really useful product that could speed up mechanical engineering. So that's actually what we'd worked on up until Instill, even a little bit after Instill. And yeah, I can go into more detail about that. It was pretty interesting. That's probably how, despite these days doing less stuff with model training than in the past. For that, it was all just kind of rolling our own models from scratch, a lot of training, a lot of inference. [00:03:48]

Alessio: I'm always curious to hear about what made you interested in that. Obviously, you've been at the forefront of a lot of this AI work. Why was that the most interesting thing to you? Did you think there were not as many people going after that? Did you think you had a unique insight into it? Because we got a lot of people listening that want to be founders and want to figure out how to make that decision. [00:04:09]

Swyx: Yeah. [00:04:10]

Aman: First off, I've always been incredibly fascinated by AI. The first time I originally learned how to program, actually, because I'd seen the results from ImageNet and I'd heard deep learning, and that just sounded insanely cool to me. And so my first programming project was building and training a neural network in Java, because that was the only language I knew from my AP Computer Science class. But ever since then, everything I've done has been involving ML, AI. The reason I wanted to, I guess, found a company is, first off, I had been working with Michael on a couple of other things. We'd done an AI consultancy in the past. We worked really well together and really just enjoyed working on stuff on our own. With CAD, we were doing a little bit of ideation, and I think we were quite worried about competition in a lot of other areas. I think that worry has definitely subsided a little bit with what we're working on now, obviously. A lot of competition in the coding space. But it seemed like the kind of thing where not a lot of eyes were on this. It seemed very technically possible, at least at the time. And the market was pretty sizable, if you looked into it. So it was both a really interesting technical problem. And then if you just tried to analyze the space, it seemed like a good idea. [00:05:23]

Alessio: How do you decide to move off of it? That's another important answer as a founder. [00:05:28]

Aman: I think there are a few key things that we did not take into account when we were working on this. One was if you look at the original Codex paper, our assumption was this is the model that powers Copilot. It was trained on 100 billion tokens, or it was something like 50 billion tokens of Python. And one interesting insight from it was that you actually get no transfer benefits from the pre-trained model on text to code. So that means they took GBD3, and for the smaller models, for the models that weren't trained in all the Python data, there were some benefits where GBD3 transferred really well faster. But then for the final Codex model, it turns out that there were no transfer benefits, meaning you just took a model, you trained it from scratch on those 100 billion tokens of Python code, it would do just as well as the GBD3 12 billion model that was fine-tuned. The issue was that it was only true for GBD3 and 100 billion tokens of Python code. These days, I mean, the jury's still out on this, but it seems pretty clear that the benefits from learning language are quite helpful with code. I guess that kind of goes into the issues with CAD, where one, you're dealing with much less data than code. If you assume, first off, that 50 billion, 100 billion tokens is all you need, then maybe with like 10x less, you could get a pretty useful model. In reality, Copilot today is powered by probably trillions of tokens of code, as well as text. And when you're dealing with, at most, from scraping every single bit of CAD data, you can find 10 billion tokens. It's just not enough to train a useful model. We tried scaling, and no matter what kinds of regularization techniques we used, we just couldn't get it past a few billion parameters without overfitting. That was the big thing. And then the other is that there's no transfer. If you try to test these models today, and even with GBD4, there's a prompt that I like to use, which is good for testing like 3.5 versus 4 if you don't know which one's behind the scenes. But even 4 sometimes struggles with it. And the prompt is you kind of lay out, I think it's like a famous kind of Gary Marcus prompt as well, where you lay out a bunch of kind of cubes on a table, right? And you describe it, and as you increase the complexity, you know, 3.5 drops out, you increase the complexity more, 4 drops off. But it's clear that these models are not that good at spatial reasoning, and that's exactly what's needed for CAD. [00:07:52]

Swyx: Oh, yeah, that's right. [00:07:54]

Aman: What you want to do is if I were to design this table with CAD in front of you, I would first draw a rectangle, then I would do an extrusion operation, which would basically take the rectangle and then extend it orthogonal to the plane, such that it's like a volume, [00:08:14]

Swyx: right? [00:08:15]

Aman: And then the model has to realize that, okay, the shape now that exists is this structure here, this table. And then the really difficult thing is for other operations, it'll need to point to the constructed geometry that was built. And basically, the model effectively to work well, it needs to kind of, in its mind, imagine this 3D structure. And the models are not good at that. If you try fine tuning code models on this task, or language models, they're just not going to transfer well at all. [00:08:44]

Alessio: Do you think in like two, three years, there will be a good AI-powered CAD software? [00:08:47]

Aman: Yeah, my perspective now is that I think the best way here is probably redesigning the entire system. One other big pain point was we tried to build plugins with all the major pieces of CAD software, like SolidWorks, Onshape, and so on and so forth. And if you think it's hard to build a plugin for some of the older IDEs, you've not seen these pieces of software. And so I think even if you got a good model, it might be really hard to actually get distribution and create a good plugin that works. So it feels like with the advancements you have in kind of text to images, and there's some new companies kind of doing stuff with text to 3D, it feels like the reasonable approach is actually just scrap the way that people are doing CAD right now. And I suspect a company or some companies will come around and do this quite well. [00:09:37]

Swyx: That's really good insight. And we have more sort of general LLM products thoughts to ask you at the end. We wanted to get into Cursor, since that is your primary product right now. In January 2023, you announced it to the world. Maybe take us into the, I guess, idea maze leading up to Cursor. [00:09:54]

Aman: Yeah, I guess it still was one kind of brief, brief pivot period where we tried doing text to images. The reason we decided against it was that I don't think we're the founders for that kind of company. We learned this from CAD, and we strongly believe this now that it is much better to be a user of the product you're building. And we just weren't big users of any of the text to image tools. So it was around December where we managed to actually get early access to GPT-4. And before then, we had played around a little bit with using earlier versions of 3.5 on writing code. And we kind of given up. It just seemed like if you looked at Text DaVinci 2 or Code DaVinci 2, those older 3.5 variants, they just couldn't really do anything meaningful. But then we opened up the playground, started copy pasting code into there. And it was ridiculous. This is before everyone started using human. [00:10:50]

Swyx: Did you use the early version? [00:10:52]

Aman: Yes. So, okay. [00:10:54]

Swyx: So, it was an earlier version. The unhinged raw. [00:10:56]

Aman: Oh, it was still, no, it was still, it wasn't, yeah, it was still very safe. But before people started using, human eval was the thing, but before everyone started talking about it and knowing about it, we kind of pasted it in and it got 85%. And we were just like, wow, best open source model at the time got 30%. And Code DaVinci 2 got something like 47%. And yeah, GPT-4 today, it gets about the same score. And so we then started, you know, writing code in there, just copying and pasting random pieces of code from whatever kind of things we were testing and developing. We found that it was not just good at creating net new things, but refactoring code, editing code, helping you debug kind of every single aspect of software development felt so different with these models. And then we kind of, in our heads, just plotted out the future. And this is GPT-4, like what happens when you have 4.5, GPT-5? These models are just going to get better and better and better at programming. And the future is probably not going to be more and more things that you tab enter for autocomplete. I think that's a very useful tool. We use Copilot every day. We find it quite useful, but you can't have a world in which language models are able to produce 90%, 95% of the code, and it still follows that form factor. I think you have to redesign the entire way, the entire UX of writing software. And that was our take with Cursor, where you need to own the full IDE and completely redesign the flow of producing software and just doing software development in general. [00:12:32]

Swyx: Those are big statements that we need to dig into a little bit more. I want to backtrace a little bit. So you got early access to GPT-4. That actually means that you were backed by OpenAI, you joined OpenAI Fund before you were Cursor. [00:12:46]

Aman: Yeah, basically. Kind of. [00:12:48]

Swyx: Oh, okay. Because I'm trying to get the chronology and I assumed you were, they funded you because of Cursor. [00:12:52]

Aman: Yeah, so OpenAI is this program Converge. That was the program we participated in. And through that, the main thing was early access to on-release models that we got to play with. Obviously, none of this went to production. None of this could go into production. It was just kind of a sneak peek of GPT-4. And so, yeah, before we actually built out Cursor, we didn't take money from OpenAI, but we were a part of this program. [00:13:14]

Swyx: Got it. Yeah. And then you also mentioned one more thing, which was interesting. You still use Copilot, but you also use Cursor. Yes. You also mentioned that Copilot is probably trained on trillions of tokens, which means that's extensive training since the original Codex. That's my guess. [00:13:30]

Aman: I mean, if you look at the stack, for example, right? It's what? One to two trillion tokens? Something around that. I'm very skeptical that Copilot is training on less, especially with all the lawsuits you see with whether or not it's quote-unquote fair use. [00:13:43]

Swyx: So yeah, my guess is trillions of tokens. [00:13:44]

Aman: I don't really know. But yeah, I'm sure if you did the math on how much public code there is in GitHub, it's almost certainly the trillions. [00:13:52]

Swyx: One of the reasons I harp on this is one of our pet themes is tracking the dataset to parameter ratio. And Copilot cannot be that big because it returns relatively quickly. So it's going to be in the low billions, right? So how do you do trillions of tokens to the low billions? That's interesting. Yeah. [00:14:12]

Aman: I think I have some thoughts on this because there's the whole thing with chinchilla scaling and then people are now saying, oh, chinchilla scaling doesn't matter because of inference. But Copilot could be a mixture of experts. That's one other speculation. I don't know if that's true. I mean, it probably wasn't the case at least a year or two ago. My guess is it's probably a small model that's very over-trained. From what I've heard, there are also lots of tricks you can do with caching where even if the model is quite big, it doesn't take, it effectively takes no time to ingest the entire prompt. Yeah. [00:14:46]

Swyx: Semantic caching is what they are calling it, right? I guess if it roughly embeds to the same thing, just return the same thing. [00:14:50]

Aman: I think it's partially that, right? Where let's say the suffix or the code before where your cursor is has changed slightly. They might not actually go ahead and use a different... [00:15:02]

Swyx: That seems dangerous for code. [00:15:03]

Aman: It does seem a little dangerous, but it gives you this like incredibly snappy response. And the other thing is the KV cache, right? Where you can just, I don't think there's any open source framework that does this right now, but what you can do is if you've already computed something over the KV cache, then you can just... [00:15:19]

Swyx: This is the attention KV cache for people following. [00:15:21]

Aman: So this is the attention KV cache, right? And if you've already computed all the keys and values, you can just store that in memory and then load that back up in the GPU and you don't need to process the prompt again. And I speculate they're doing something like that behind the scenes. [00:15:35]

Swyx: That's a lot of memory. That's a lot of story. It is. [00:15:38]

Aman: Well, unless they're using something like multi-query attention or... [00:15:41]

Swyx: Yeah. We'll talk about that in your Llama 2 piece. And then the final big opinion that you drop in there was you must write your own IDE as opposed to write a VS Code extension, which there's plenty of them out there. SourceGraph is doing one and I've been working closely with Morph, which just put out Rift. So this is obviously a big undertaking. Maybe explain more a little bit about why build your own IDE. Yeah. [00:16:05]

Aman: The reason we decided to do this is I think in the future, today, what Cursor can provide and what any of these tools can provide isn't that much different than, I guess, what you get in VS Code. But it was more of a long-term decision where in the long term, you're going to need to design just a very different UX that the extensions don't give you. One story we'd heard is that with Copilot, in order to actually get the multi-line ghost text implemented, it wasn't actually a part of the extension. I think the team at GitHub had to call up VS Code and have them make a change to the source in order for that extension API to be enabled. That's what allows for multi-line ghost text completion. And this is scary. If you look today, there are other things that VS Code in their source code has enabled as APIs that are just closed off to everyone but Copilot. So I think there's this fundamental platform risk where you're competing with the incumbent that owns the platform you're building on. And we thought it would just not really be tenable in that sense. And then the other thing is if you want to do other kind of fancy things. So one example of a feature we're kind of building right now is instead of just... So Copilot is great for completing the next line, completing the next few lines, but what if you wanted to do a kind of sort of edit, where instead of just completing this line, it changes the line above or delete something. There's no way that you can do something like that in VS Code, but we have the UI for this that we've kind of built out in Cursor. We're currently training models in order to get it to work well. But again, this is a feature that we think once we get it to work, will be quite useful. Could be on par with Copilot level in terms of usefulness. And it's just fundamentally impossible unless you own the IDE. There are a lot of other ones like those that we're kind of cooking up. And then there are small things. I do think like in terms of inline edits, which means inside the editor, you can press command K in Cursor and then ask for some kind of modification of the code or ask for a generation of the code. And I do think we have probably the best UX for that because if you look at what someone like Sourcegraph does, I mean, Sourcegraph code is a great product, but they basically have to use the GitHub pull request comment feature in order to do it. And I think like these paper cuts kind of add up over time. [00:18:24]

Swyx: They do. And it's very impressive how quickly you can try it out, you know, obviously encourage everyone listening to try out Cursor. And the download is really quick. The binary is super small. And then when you spin it up, it boots up really fast. And it's just a text file that guides you through the tutorial. It's really, it's really great. [00:18:39]

Alessio: I was using it today. Actually I will open right now. The first thing I like, you guys have like bring your own keys. So that's like one of the things that I don't see in enough products, like bring your own API key instead of like sign up for an account and do all of that. [00:18:54]

Swyx: Well, so like you have to trust them that they won't. [00:18:56]

Alessio: Look at this guy. [00:18:59]

Swyx: I just wonder if like OpenAI could do one more thing, which is just, you know, do a limit, the spend limit per key. So like that leaves space for like other companies to come in and do that. But I mean, OpenAI could just build it tomorrow. [00:19:10]

Alessio: I saw Logan tweeted about whether or not it would be interesting to have per key billing. [00:19:15]

Swyx: I mean, I think that would be. They're clearly thinking about it. [00:19:17]

Aman: Yeah. They have more important things like GPD 4.5. We can talk about that one. [00:19:20]

Swyx: Yes. [00:19:21]

Alessio: Let's talk a bit about what you do. So first of all, unlike some of the other tools, you guys have like a system prompt kind of thing, which are like rules for AI. What was like the decision behind that? Did you see people being frustrated with always having to repeat the same thing in the prompt? [00:19:38]

Aman: Yeah. The problem was for encoding some small rules that the model will tend to get wrong. So for example, we use Solid instead of react. [00:19:46]

Aman: Solid is just another reactive UI framework. It's a decent bit faster. And my co-founders know a lot more about the details in this than I do. But like the other really nice benefit is that with the VS code fork that we're using, you can kind of inject solid into multiple routes. While react is meant to be kind of like it takes over the very root of the entire DOM. Solid instead, you can inject it inside of like multiple HTML components. It's much more performant that way. And so yeah, we use solid because of that. And then the issue is every time you create a TSX file, write a component, GPD 4 by default will assume it's react, right? And so it'll get the code wrong. And so just encoding rules like that are pretty helpful on the side of that problem. For some of our users who are less familiar with English too, it's helpful to kind of add a prompt to say describe this in whatever language they're most comfortable with. [00:20:45]

Swyx: And so for those who don't know, you primarily, the main model for most people is GPT 3.5 and pro users can use GPT 4. You're prompting GPT 3.5 with these system prompts first. Any other tips apart from your company specific ones, apart from the English as a second language ones, how do you prompt GPT 3 or 4 for code? [00:21:05]

Aman: So this is interesting because I think in general, these models are good at just producing net new code or rewriting code from scratch. The thing that they're not great at is producing edits or modifications. So producing a diff is incredibly painful. And I'm sure you guys may have encountered this if doing stuff with agents, but they just get line numbers wrong pretty often. And when you're producing a diff, you know, it's fewer tokens of compute. And there's, there's some theories that like, you know, the more tokens of compute you kind of use up, the more the model is kind of expending on thinking, thinking, yeah, chain of thought. That's one thing we've kind of struggled with. And so that takes probably chaining to get it to work well, where one kind of technique we do is we have GPT 4 kind of propose a draft PR and then we have 3.5 go and kind of heal a draft diff and then we have 3.5 go and go and heal those changes. So you'll have to do things like this in order to get it work around those limitations with edits. In terms of general code writing, I think with 4, it's just, it's been super, super straightforward. 4 is fantastic. 3.5 would strongly recommend using the Azure model because there you get access to completions, meaning you can put kind of words in GPT 3.5's mouth and let it finish it. Kind of like what you can do with Claude. And that's really helpful. [00:22:24]

Swyx: I always assumed that was going away as a API because OpenAI is like clearly not interested in maintaining that. I mean, they're straight up deprecating it now. Yeah. [00:22:33]

Aman: It's a little frustrating because I think it's really useful for code, right? Because when you can do stuff in the middle of the line, it's impossible to do that with the chat format. But with the completion format, it becomes trivial. [00:22:46]

Swyx: So one thing I learned from working with Jesse on GPT 4 OpenAI, he always asked GPT to comment your code before writing the code. And that's the chain of thought for code, right? So when I ask you for code, give me a fully commented code with only a brief explanation on how it works, bias towards the most efficient solution and offer an alternative implementation if it fits. If it's unclear what environment or library versions I'm working with that might significantly change your answer, please ask me to clarify. That's my custom instructions right now for code. And I'm just like, hey, we should come together as a community and just share these custom instructions or system prompts. Yeah. [00:23:18]

Aman: When you get it to be more verbose, I do worry a bit in terms of UX because more tokens means it takes a lot longer to get to the answer. And then it's also just, I don't want to read a massive answer. I just often want the answer immediately, or I just want kind of a short block of code to answer that. That is a trade off you kind of will have to deal with. And the same thing with diffs, right? Where the diffs are going to be so much faster if you get them to work, but it's just going to result in lower quality edits. [00:23:47]

Alessio: One nice thing you do in the chat is actually remove some of the code you don't touch. So I was using it to make some changes to the code base and in each function, it would say like add a comment with existing code and then tell you just the stuff to change and the stuff to add to it, which kind of frustrates me with gbt4 sometimes. It just re-gives you the whole function definition instead of just that. I noticed that in the chat, you can now apply change and put it into the code if you don't start the conversation from the file itself. Why is that so hard? Like so many products have it. Is it actually hard or is it just like a UX decision to have you? [00:24:24]

Aman: So there are two ways of doing that, right? So when you say apply change, do you mean you select a region in the code, press the button and then it makes it just like, makes it in? [00:24:33]

Alessio: Right here, it told me to like add these three lines of Python and I'm like, I don't want to copy paste them. You know, I did it, but it would be good to just do. [00:24:40]

Aman: So if it just makes the change for you. Yeah, this is something we're going to be adding this week. So yeah, this is definitely like something a lot of users have asked for and it should be reasonably straightforward to do. I think the issues we want to use for sparingly because of how expensive it is and 3.5 actually kind of struggles with this. [00:24:59]

Swyx: Interesting. [00:25:00]

Alessio: And then I noticed, so you can chat either with or without context. So with context, you pass it parts of your code base without, you don't. Every time it loads the license file. So is there anything that you're working on to make sure that like you don't have like license infringement and stuff like that, or is it just like the model for some reason thinks the license file is really important? [00:25:22]

Aman: Yeah, right now it probably uses vanilla embeddings. We're working on a couple of interesting techniques for much better retrieval. One of them is basically fine tuning a model to kind of memorize a code base. So there was a paper that came out a little while ago from Google, which is called documents or it's called transformers as a differentiable search index. The idea here is you train a transformer on a code base or you train it on a corpus of documents in order to basically directly answer questions about which document is relevant given the question. So the mapping would be some query, some question, and in this case, a question about a piece of code. And then the model would directly output the not just file, but let's say the actual function or the class that solves it. It wouldn't output all the code for it, but it only just output like the symbol that corresponds to it. And we've seen some initially promising results with this direction. If you look at the original paper and then there are some follow on work, it actually does a lot better than very old school retrieval techniques like BM25 and even embedding based techniques. And so this is an approach we're experimenting with and we think it could prove quite helpful. The other direction is just improving embeddings. If you looked at the recent paper by, I think it was Alibaba. So there was a recent model. If you do the math, it costs them $1,000, less than $1,000 to train this thing. And it beats OpenAI on non-code related tasks, sadly non-code related. OpenAI still kind of holds the crown for code related embeddings. But we think there's some promise in potentially training our own embeddings and then fine tuning it on particular code bases so it performs better there. So these are both directions. We're kind of independently exploring to improve the performance of retrieval. But in the short term, we do have the ability to use kind of re-rankers and more kind of advanced tuning. So if you look in the chat, I think there may be a button you can click which lets you enable re-rankers, which should improve the performance a decent bit. [00:27:24]

Swyx: Awesome. [00:27:25]

Alessio: Anything else in the product that we're missing? We have inline generation and inline question asking to the model. You have the chat interface on the right. Yeah. [00:27:36]

Aman: So one thing that our users have found quite helpful is being able to add files or add documentation. So if you want to add Next.js docs, the most recent docs, you just do add Next.js in the chat or in command K and you'll be able to then basically get that information in your context. We have a lot of features that will be coming up quite soon. One that we're quite excited about is basically code interpreter style mode of using the chat. And so I don't mean that, I guess, in the traditional sense of code interpreter. But code interpreter is probably the one example of, as far as I know, the one example of an agent that works really well, that has some sort of kind of product market fit. And I think the reason it works super well is because when you try to get agents to do some massive task, I don't know, many people who like reviewing PRs or reviewing large diffs, it's much more fun to kind of be in flow. And I think the way that the code interpreter is able to deal with this is it breaks it down to these kind of small units that are very auditable and understandable. When you ask the model to produce a graph, you just see the graph and then you can kind of tell more or less it's wrong. And then you can go and see the code and the code's very understandable. So I think it's pretty important to kind of have the agent do these very small, discrete units and then show the output in a way that's very easy for the user to understand and then go in and fix. And so we're building a kind of flow like that in the chat that should be coming out in the next two weeks, which we're very excited by, because we've done a bunch of experimental stuff with agents. And the big thing has always been this problem where it just produces a bunch of code and it's just so hard to tell whether or not it's correct or not. It's less efficient because it'll end up having some bugs. And then it would have been better if the user just went and wrote all of it themselves. [00:29:30]

Swyx: There's one approach with a former guest of ours, Itamar, on Codium, whose essentially approach is you need to develop the spec, the tests, and the source code in harmony kind of together. Well, the spec is the prompt, and then the spec could generate a test or a spec could generate code. And the only way to validate the code is to run it with tests, is kind of his analysis of what the agent space, what the code agent space may look like. [00:29:54]

Aman: I think tests are pretty promising a direction. If you have a really, really rigorous set of tests where you can completely confirm whether or not the agent has done the right thing, I think that solves it. But I think it's only one part of the overall puzzle here. I do think you're going to want the model to... Like the issue is, it's really kind of painful to go and write this massive, massive prompt describing everything. I want to be able to kind of do it in flow and just see a change, then go step by step from there. I think that's just a more fun way of doing it. I think the more fun and more easy to use kind of product will win, assuming the capabilities are about equal. So that's kind of our bet here. Yeah, that's great. [00:30:34]

Swyx: Have you thought about like, so you said you can add docs, which is really cool. And I've thought about this before, but I always get hung up on versioning. You just choose to not care about it and just embed the most current docs? Yes, we embed the most current docs. [00:30:46]

Aman: You can add whatever docs you want, if you just have a URL. You can paste the URL for the docs in. [00:30:53]

Swyx: You give a crawler, yeah. [00:30:54]

Aman: We crawl it in the background and embed it. And so you can have a custom, basically a custom version or whatever version you use. It's stored locally for you. What kind of crawl diff? [00:31:05]

Swyx: Like if you've just written... Yeah, that means you've written a search engine, kind of. [00:31:10]

Aman: It's very, very basic. Docs are very, very easy to crawl relative to other things because it's like, they're all like this kind of sort of markdown-like format. [00:31:19]

Swyx: Yeah. [00:31:20]

Aman: Definitely have not written a crawler for the entire net. [00:31:23]

Swyx: The other thing on Code Interpreter, we've also done an episode on that. I'm very excited about it. I think it's GPT 4.5, you know, because it's GPT 4 that has been fine-tuned on more code. Yeah. Plus it has inference time capabilities that you cannot do in the traditional LLM setting. Anyway, the most important thing about GPT 4 is that it has the sandbox. So the main question for you is, are you going to run the sandbox in your environment or do you want to run it on our local machine since you have access to that too? Yeah, I think we want to be very careful with this. [00:31:52]

Aman: You don't want to do sudo rm-rm star or something. Our plan is to run it on the local machine, but always kind of prompting the user whether or not they want it. I think if we want to do things where the agent takes many... So for the Code Interpreter style thing, the great thing is because you're breaking it down to these units, you can kind of batch together a bunch of commands at each step, just kind of ask the user because they're always kind of watching. For agents that are running completely in the background, I think there you probably will need to have some kind of contained environment where it's safe for agents to execute arbitrary code. One pretty bad attack is if one team wanted to, let's say, prompt inject the model, they could just kind of in a piece of code, just like have a comment that said something like, When you're doing this kind of edit, you should do rm-rf or do something really, really dangerous. And then the issue is if an agent is kind of running in the background, and then it does that, and it grabs that piece of information, and then it gets actually successfully prompt injected, it'll just execute that thing. The same actually may be true with documentation, where someone malicious, if they had access to some piece of documentation that other people use, could try to prompt inject agents that are then going and running code and running terminal commands. [00:33:06]

Alessio: Today, people just hijack npm packages. [00:33:09]

Swyx: Yeah, there'll be more of that, I'm sure, shenanigans, as they call it. But yeah, I think probably the safest way is to have sandboxes in the cloud. And yeah, I've been calling this the sort of the agent cloud phenomenon. I think Fly, IO, Modal, and E2B are in that space already. And then I think Repl.it is exploring it. It'd be interesting for you guys to get in that game. I have trouble articulating what's different about an agent cloud versus a typical serverless sandbox thing that you can spin up. Basically, I think for people to, if agent cloud is a real category, we have to identify what kinds of feedback do we want to give the AI that's different to a human? That's the extent of my thoughts on what this would take. [00:33:52]

Aman: I think the key thing that not enough people are probably doing is giving the AI access to a lot more tools. So the classic example I like to bring up is, if you look at the old kind of alpha code model, which went and got 50% on some programming contest, a competition, 50th percentile of pretty good programmers, right? This was a base model that basically got, I think, something around 28% on human eval. And they use this interesting inference strategy of having the model generate a bunch of test cases, and then running the test cases, seeing which one passed. They use some other, there's some other details there where they do clustering and whatever. But the key thing is kind of letting the model generate tests, run the tests on all the outputs that it's generated. And that brings a 28% code forces model to 50th percentile. Gbd4, you just add a very basic prompt, please complete this Python function, and it gets 85%, 87% on human eval. Now who knows how tainted that benchmark is? But assuming it's reasonable, like what score do you think gbd4, the same kind of inference strategy as alpha code would get on that benchmark? It would do really well. And then gbd4 is at this level where they can actually not just run the test and like binary yes or no, use that answer, but it would see the results of the test and be able to modify the code or the test base in those. And so I think just like, that's just one tool. The other tools you could have access to would be language servers. So this is a great thing with VS Code, where VS Code kind of invented the language server or the language server protocol. And so as a result, when working with a VS Code fork, we kind of have access to every single part of the language server protocol, which means we can go to definition, get all the symbols in your entire workspace, kind of everything you do in a modern IDE. And what we've been working on is kind of giving these models access to those tools. And that like dramatically improves performance, right? Because the way that humans usually will search for something is they'll kind of click around, go to definition, read some code, do all that. But you use the tools in the IDE to search for things more efficiently. And if you're just trying to have a model, just do a brute force kind of semantic search and get the answer from that. I think it's not going to work nearly as well as kind of an agent that's able to use those tools. [00:36:10]

Swyx: Awesome. [00:36:11]

Alessio: And you guys are growing the team right now? [00:36:14]

Aman: Yes, we are. So we are currently five people based in SF and we're looking to hire engineers and designers. We think there's a lot of interesting work that we're doing that's left to be done. So some of it involves model training, kind of training some open source models for things like embeddings or areas where it perhaps is too expensive or not or too slow to use open AI. And then lots of interesting things with pushing these models to kind of the boundaries. So getting GPT-4 to work really well in this kind of agent loop in a way that's really in flow and intuitive for users to use. So yeah, I think lots of exciting work. [00:36:53]

Swyx: Cool. And then maybe to sketch out a little bit more about the company and then we'll zoom out to just general LLM observations. You're also working on a prompt tooling thing called Priompt? Yeah. [00:37:04]

Aman: So this is just an internal tool that we use. It's called Priompt. And we built this because we didn't really find a good way of solving for the problem of when you have a variable number of kind of inputs that you want to stuff into the prompt and you have like a fixed length prompt, right? You can only use 4096 tokens. How do you encode for rules and how to properly kind of order the inputs that go into it? And Priompt or priority prompting are intermediary solution for this, where you can kind of encode very custom rules into how you build up the prompt based on how, I guess, overflowing it is, right? So let's say I have a bunch of previous chat messages and then I also have the code from the current file. So maybe what you want to do is you want some rules where if everything can fit in, you put it all in. But then you start by like first removing like all the old chat messages. Then once it gets to a certain length, you don't want to remove any more chat messages and you want to start removing parts of the file. And then you want to remove parts of the file in this particular truncation strategy, which tends to work quite well. So this kind of thing where like as you kind of slide the window and how many tokens you're allotted, you can see like the prompt is like very, very differently constructed. So it's like optimal kind of at all sizings. And we found that quite helpful internally. [00:38:23]

Swyx: And you chose the JSX approach. I'm not going to ask you too much about like design choice. I mean, it's popular with React. Fixies also put out AI JSX. Do you find that like helpful? Do you think that like some kind of DSL might emerge for prompting? [00:38:36]

Aman: Yeah, I think it's still pretty early. And it's not clear what the best way to do. I think for very lightweight, easy prompting, like you should just use strings. When you're doing kind of prompt engineering, and really like rigorous prompt construction where you can have a bunch of different possible inputs in the prompt. We think JSX makes a lot of sense. It's because it's kind of like website development where you'll have different kind of screen sizes, different kinds of devices that can look at it. And in a similar way, you've different kinds of prompts, right? You've different prompt context lengths. And you basically want across all different context lengths to get a very, very good prompt for the model. And so yeah, that's why I think like JSX kind of makes sense. It's not clear if it is like the best way of doing it. I think the jury's still out on that. [00:39:27]

Swyx: One way to deal with the context length model issue is to train your own model that has a very long context length, like magic.dev, which announced a 5 million context length window. I don't know how credible that is. I haven't tried it. But your thoughts? [00:39:42]

Aman: Yeah, I think the issue with context length, long context length right now is that costs scale linearly, right? Costs technically scale quadratically in terms of attention. But the interesting thing is that for really, really large models in terms of flops or actual floating point operations that the models are doing, attention tends to be a pretty negligible part compared to the actual, I guess, feed forward part of the neural network. And so up to like 8K, it tends to look pretty linear. I guess when you're going to like higher and higher context lengths, it starts to get more and more tricky. And then there's some other optimizations or some other difficulties with memory bandwidth that we can get into. It just feels like the key issue is even if it is linear, it's still so expensive, right? Paying for 32,000 tokens at whatever the pricing is right now feels like exorbitantly high. My perspective is that there probably will be at some point in the future, or there might be at some point in the future, like a better approach for really, really long context. Something that looks more kind of recurrent. [00:40:47]

Swyx: It feels more elegant. [00:40:48]

Aman: I don't know if it'll happen because I think there are like interesting ways of hacking together or chaining together these language models, even with short prompts. But I'm not super bullish on kind of scaling up attention the way that we're doing right now in like 100, 200K context windows. [00:41:02]

Swyx: Like Cloud is doing. Yeah. Are you monitoring like RWBKV, which is one of the recurrent approaches? [00:41:07]

Aman: I've been meaning to read that paper. I have not been monitoring that. I looked into a few of the papers from state space, like the state space models. Those are pretty interesting. Can you give an intuition? [00:41:19]

Swyx: Because you seem to be explaining it really well. Why are they different? Why is that interesting? Yeah. [00:41:25]

Aman: I think the interesting thing with, at least with the original state space model, is that you get kind of two benefits. One for training, you get the paralyzability of a transformer and you can kind of run it, I believe in about N log N for some N length sequence. And then for inference, it's also like the way that it's formulated is also somewhat recurrent. So you can kind of store everything in this fixed state. And then because of that, you get, I believe, an O of one kind of cost towards inference. It could be slightly higher, but yeah, it's much less than the O of N cost per token for the transformer. And so that makes it really tractable to then do for very, very long sequences. There's some follow on work with Hungry Hungry Hippos and Hyena. And again, I think the key piece is that for like very, very long sequence lengths, it ends up being N log N rather than N squared. I did say that the cost of like, I guess even linear attention is pretty high, but that's because the 32K model is priced a decent bit higher than the original. It is surprising that Claude, or I'm actually not familiar with Claude's pricing. Is it higher for the 100K one than for the normal? [00:42:31]

Swyx: No, I believe it's the same. [00:42:33]

Aman: That is actually quite surprising. I'm not sure if they're doing attention under the hood because even with like a lot of tricks with 100K or even 200K, I would assume that cost will eventually start to build up. So they might be doing something fancy there. [00:42:46]

Swyx: Well, my guess was alibi, which is a trick, which is replacing proper attention with kind of like a exponentially declining forgetting curve is what I'm thinking. Someone has to put 100K to the test. I haven't done it. [00:43:00]

Aman: Yeah, they have this graph that looks promising for 200K, but I feel like anecdotally from everything that I've heard, it just seems like it forgets things like they don't actually pay attention to things. [00:43:11]

Alessio: I just open-sourced a small podcast there yesterday, which is what we use. [00:43:14]

Swyx: We use it to summarize this podcast. [00:43:16]

Alessio: Yeah, I'm looking at my logs, prompt length of all my recent ones is 55,400 tokens, and it works. [00:43:25]

Swyx: How much per call? [00:43:26]

Alessio: Free, because it's not commercial. I'm like, hopefully nobody from Entropic is listening. But yeah, it works. But I think that's kind of like the sweet spot. And then the completion length is like 1,800, you know, so it's not like it stays within the 60K band. But anyway, yeah, curious to see. And I think another thing from your Twitter model parades that I really like is actually differentiating between the type of workload. I feel like people talk about these models as like anything you do is like the same thing, but you posted about GPT 3.5 being cheaper than LLAMA 2 for completion-heavy workloads. What does that mean? [00:44:07]

Aman: Yeah, so there are different terms, I guess, based on like whatever community you're in. So I think in the research community, they probably call it pre-filling is handling prompt tokens. And then I believe maybe decoding is what they call generating completion tokens. We'll just use prompt tokens and completion tokens. But for prompt tokens, the work, it's entirely compute bound. And the reason why is the same reason why transformers are so good at being kind of being trained in parallel. And it's that you can parallelize the entire sequence or you can parallelize an input, not just along the batch dimension, but the sequence dimension. So that means let's look at the first layer of the transformer. Imagine like that entire layer could fit in memory. I just read that to memory. And then I basically apply the matrix multiplication of the entire sequence on this layer. If you're doing token generation, instead, you have to read the layer, then taking the first input, and then you have to read the next layer and then do that same input. You have to do it all the way to the end of the model. And then you generate the next token and that next token passes through all the layers again. So before what you were doing is you have all your input tokens in parallel, they're going through the first layer. So you read the first layer, then in parallel, they're going through the second layer. You read the second layer, so on and so forth to the end. But when you're doing it for one token at a time, you read the first layer, second layer, third layer, fourth, blah, blah, blah. Then you do it all over again for the next token. And so as a result, for your sequence length N, you end up using N times more memory bandwidth than compute. [00:45:44]

Swyx: And time as well, like wall clock time. Yeah. [00:45:47]

Aman: I mean, so with wall clock time, it's weird because transformers are far more efficient than... [00:45:53]

Swyx: I comment on that because in the RWKV interview that I did, same thing. They have a visual actually of this. So the thing you were trying to describe with words, they actually have a visual and animation But it's helpful because once you see it, you're like, oh, okay, that's why it's like a different graph. Yeah, exactly. Yeah. [00:46:10]

Aman: So when you're dealing with the prompt, it's completely compute bound. And because GPUs can handle some crazy number of floating point operations per second, it's like almost instant. That's why time to first token feels super instant. And then when you're generating one token at a time, it now becomes completely memory bound where for each token, you're bound by how fast you can read all the weights into memory. So that's like around like 200x slower in general. [00:46:34]

Swyx: Yeah. So your specific recommendations, which I pulled out from the post, people should read it. It's really good. I feel like the title undersells it a little bit. Yeah. You should not serve Llama 2 for completion heavy workloads. Llama is best for prompt dominated tasks like classification. And I feel like I can run with that. That makes a lot of sense. [00:46:51]

Aman: And re-ranking is one thing we find useful for it internally. [00:46:54]

Swyx: Do you use Llama 2 right now? [00:46:55]

Aman: We don't have it in production, but we've experimented with it for a few things. [00:46:59]

Swyx: You also had an interesting observation because I think we had talked a lot about quantization in the podcast just for running locally or more efficient running. You said quantization and imperfect utilization cancel each other out. Yes. That's a cool observation. Yeah. [00:47:12]

Aman: So this is like a little bit hand wavy, but the core thing is, yeah, we expect that when you don't have like complete utilization, right, you're never going to like saturate all your GPUs. There's going to be some idle time. Like from things that we've experimented with in the past, it ends up being, you know, 50% is a reasonable amount as a more liberal estimate of how much you can get. So the interesting thing about quantization is that there's a bunch of these kind of new quantization libraries that have cropped up and they're all very good at reducing costs for low batch inference when you're memory bound. But the key thing is when you increase the batch size, they actually end up resulting in no real speed ups over FP16. The reason why is because they only quantize the model weights, right? So that operation of kind of reading the model weights when they're now, you know, 4x smaller instead of FP16, they're, you know, 4 bits or something. [00:48:04]

Swyx: It's still the same number of weights. [00:48:06]

Aman: The operation of reading weights is like it ends up being 3, 4x faster. But the issue is when you increase your batch size enough and for large batch inference, the key thing is it now moves back from being memory to being compute bound again. And when you're compute bound, quantization of model weights basically does nothing. And so it ends up being effectively the same cost. And then the other interesting thing is it's even worse for small models because for, or at least the small LLAMA models, because I believe the smaller ones relative to model size have a much bigger KV cache. I'm not sure if the smaller ones use multi or group query attention. They might not. [00:48:42]

Swyx: They do not. Only the large ones use. Okay, exactly. [00:48:45]

Aman: Yeah. So then because they use normal multi-head attention, the thing is when your batch size increases enough, then the memory bottleneck is not your small quantized model weights. No, it's actually the KV cache. And so quantizing the model weights effectively will do nothing then. So the key insight there is like all these new techniques are fantastic when you're just kind of playing with these models, running them low batch sizes. But when you really try to increase the batch size and serve it in production, they're probably going to be lower or more expensive than FP16 because there are these optimizations with things like text generation inference, which uses VLM or like page attention, which are much, much faster. And so the best that I think you could probably do right now with open source is like full 8-bit quantization, which means not just quantizing the weights, but also like the actual activations and the KV cache so that none of those things end up being bottlenecks. [00:49:36]

Swyx: That's a great breakdown. The post goes into much more detail with a lot of math, actually, which I love. And you also spec out some rules of thumb, which I think people can use to figure out their limitations and pricing and all that good stuff. Yeah. [00:49:49]

Aman: One big caveat I'd say is that the other massive benefit of LLAMA too is that you can fine tune it. [00:49:54]

Swyx: Yeah. Right. Well, you'll be able to fine tune OpenAI soon enough. We'll see. So we'll just get your general takes on LLM topics, just kind of quick fire, and then we'll go to lightning round. So human eval, that is the predominant way to benchmark code models because OpenAI benchmarks code models that way. There's some issues with it. [00:50:13]

Aman: Yeah. With open source models and even probably with some closed source models, it's unclear how much of it has actually leaked into the train set, right? So there's a recent model, New Hope, which it looked like they had some leakage, which is why it had really, really good performance. But I think there was an interesting approach taken by Palm too, where I think this is actually possible for someone to do right now. I've been meaning to do it at some point, but there's this paper called Babel code and they have a library which I think literally translates human eval into all other languages. And I think that would be a really good test because the other issues, a lot of the models that perform really well on human eval are pure Python, right? And that doesn't really give you a sense of if it's a good coding model overall. So yeah, I think at some point it would be really helpful if just someone did the work and ran the Babel code engine and translated human eval into all these other languages and then was able to run it. I think that would probably be a better benchmark, but still. I think if the original human eval problems leaked, I suspect it would also be helpful for solving the problems translated into other languages. But the issue is it's just so easy to run and anything else is probably going to be quite painful. [00:51:24]

Swyx: Right? Well, it'd be better if there was a sandbox to run it. So agent cloud, hashtag. Hot take on training. Yeah. [00:51:32]

Alessio: Another one from your endless Twitter quality. Training will look like researchers offloading large scale training jobs to specialized training companies. A state of the word that resembles chip design and fabrication. Yeah. [00:51:44]

Swyx: How do you think about that? [00:51:45]

Alessio: And obviously Mosaic was on the podcast just got acquired. [00:51:47]

Swyx: So you tweeted that in May in 2022 and then one year later Mosaic gets acquired. Like I think that's a pretty fresh hint. Yeah. [00:51:54]

Aman: I was probably wrong about it in a lot of ways too, because I assumed the future would kind of look like a lot of startups would have their own models. And this is me kind of in the CAD frame of mind where I thought, okay, if you look at GPT-3 at that point, it was just like GPT-3, maybe a little bit 3.5. It wasn't like that good a generalist model. And I thought prompting is not the way to do things. It's just completely fine tuning or training your own models. And it was also a similar time that we kind of saw a lot of the open source earlier efforts in training models, which proved like not that great. I think Bloom and OPT were two models that came around about that time. And if you looked at the OPT logs, they manually tuned their learning rates several times. I think they switched the optimizer from Adam to something really weird where they switched the optimizer in the middle. And don't quote me on this because I could be wrong, but I remember it was like some really, really sketchy stuff down in the middle. And I just thought, wow, if it's this hard, it seems like there's a company to be built around it. The key difference is that there are just massive foundation model companies. And I think most AI product companies are not going to be mostly training their models or mostly using like custom models. It's more so going to look like them kind of using these APIs out of the box. And then maybe using, you know, the fine tuning endpoints there. [00:53:12]

Alessio: Oh, I mean, it's the same. [00:53:14]

Swyx: So you changed your mind a little bit. [00:53:15]

Aman: I did change my mind a little bit. I assumed like with the CAD thing, I thought, okay, you're gonna need a foundation model for CAD. You're going to need a foundation model. [00:53:22]

Swyx: No, that's old school thinking. [00:53:23]

Aman: Yeah. And now it's just like you have the one generalist model. The one God model. And the one God model transfers fantastically well with everything. Okay, quickly move along. [00:53:31]

Swyx: You had another one, which I loved. The size of all code history on GitHub public repos is 92 terabytes. The size of Google's monorepo is 86 terabytes of much higher quality code. If Google were willing to deploy code models trained on your own data, they would have a noticeable advantage over everyone else. Yeah. [00:53:46]

Aman: Again, this is one thing that I think is probably a little wrong. Because this is based on the big science paper. And the big science paper, like basically said they scraped all of GitHub and they got 92 terabytes. And I think if you look closely, which I did kind of after some people kind of pointed out some mistakes, I think GitHub is like a lot, a lot bigger than that. The big science paper said they get cloned. And so I was assuming, okay, get clone means you get the full working tree, right? But if you look a little deeper, I think GitHub is like a lot bigger than people think. My expectation is that GitHub probably has something like five to 10 trillion tokens of code, usable code. And so that's a lot more than what they ended up getting. But yeah, Google still has like a pretty meaningful fraction. [00:54:33]

Swyx: And they just put out IDX, which is somewhat of a competitor. Yeah, yeah. [00:54:37]

Aman: I think it's more like, it looks more like a replit kind of competitor where it's like an in-browser thing. But yeah, I think a lot of people can be viewed as competitors. [00:54:46]

Swyx: But you're very competitive as we established, you know. And then final question, why is the company called AnySphere? And you have this whole manifesto on your landing page on why humans should focus on bigger problems. [00:54:55]

Aman: It's an interesting story where Michael and I were in this program Converge, and two of our friends, Arvid and Swale, who we knew like reasonably well at MIT. And we knew them because they're like some of the best engineers at MIT. And so they were independently kind of working on their own company. It was called AnySphere. And we both independently from after playing with GPT-4 realized, oh, wow, like the IDE is the thing to build. After a few months of independently working on it, we realized, okay, like, why are we doing this separately? We should just kind of join forces. And that's kind of what we did. And so right now, the overall company is called AnySphere. But yeah, the product and the core thing is Cursor. It's lovely. [00:55:34]

Swyx: I recommend people actually check out AnySphere.co and read the manifesto because I think it's a broader message to builders out there. Yeah. [00:55:42]

Alessio: Yeah. Let's jump into lightning round. Okay. We got three questions for you. The first one is, what is something that already happened in AI that you thought would take much longer? [00:55:52]

Aman: I think code. Specifically, I think just being generalist at code, where before you had these specialized models, right, where codex was supposed to be kind of specialized for code. And then there's a general language model, but it's kind of unification of capabilities towards like this one model that's not just really good at text, but it's also fantastic at code. I was not expecting like the generalist model, I guess, to come super, super soon and be this good at code. [00:56:19]

Swyx: That's why you pivoted or you started your whole company. What do you think is the most interesting unsolved question in AI? [00:56:26]

Aman: I really think it's this kind of long-term memory piece where I think it's possible to get to maybe AGI superhuman level systems that still kind of hack around memory using like something that kind of resembles transformers. But it feels like the more elegant thing is how do you get models that really like continuously learn? Some kind of recurrent based system would be able to do this where there's like a state. But right now, like models can only really learn in context super efficiently. Fine-tuning is incredibly inefficient. It requires tons of data points to actually learn new things. So yeah, I'm really interested to see how we solve this lifelong learning efficiency problem. [00:57:06]

Swyx: Yeah. I'm interested in using knowledge graphs to do that because I think that's kind of like a forgotten piece of the puzzle. And if you could have models update their own knowledge graphs and query their own knowledge graphs, that might be it. I think Llama Index is basically working itself into what that is. Oh, interesting. [00:57:22]

Aman: Yeah. And then there's the techniques where the models directly kind of learn to like inside the weights or inside the architecture, you learn how to be able to read from databases and retrieval based like the retro based techniques. Like those seemed interesting, but it's surprising like you haven't really seen anything from that in a while after that initial paper. [00:57:42]

Alessio: And just to wrap the episode up, what's one message you want everyone to remember and think about as they keep building and exploring in AI? [00:57:49]

Swyx: Yeah. [00:57:50]

Aman: I mean, GPT-4 is now a few months old. At some point we're going to get much, much better models and I think it'll be pretty soon. And so what does the world look like then? And specifically for coding, like what does the world look like when you have another step that's just as large as it was from GPT-3 to GPT-4? I think it's just so incredibly different. I think it just completely changes how people write software. [00:58:14]

Swyx: In what direction though? So I've said my piece on like 4.5 being more inference time. I don't actually know if that's true. That's just my theory. [00:58:22]

Aman: I think the direction that we'll probably see is, I mean, the language models will just get better at doing intense reasoning, right? So they'll be able to tackle harder problems. They'll probably pick up in more nuances and how like software engineering is done. They'll probably have longer context windows. And so I expect, yeah, more agentic type things will end up being more prominent in the future. I don't know how far you can take the agent stuff with a four level model, but I think with like a 4.5 or a 5, I think agent models will work for almost any kind of coding task. At least almost any kind of reasonably well-scoped coding tasks. [00:59:00]

Swyx: Agents are the future. Well, thanks so much for coming in. Thanks Aman. Of course. [00:59:04]

Alessio: Thanks for having me. [00:59:04]

Our only more popular episodes so far have been Code Interpreter == GPT 4.5, George Hotz of tinycorp, and Reza Shabani of Replit!

As a proportion of Copilot users, not ALL code on GitHub. Still a big number! And, as some people think, could be insecure or copyright infringing.

Something that Twitter and Reddit seem intent on reminding developers even today.

Naming Coding AI Extension with something other than “Co” challenge [IMPOSSIBLE]

Still reading footnotes? We love the footnote gang here at Latent Space. Here’s a video of Nat Friedman telling the Copilot UX story captured by swyx at the Jasper conf in Feb.